Published Date : November 15, 2016

Have you ever thought does your Power Query Transformations happens in the data source side (server side), or on the local memory (client side)? When you use a relational data source, query can be run on the data source, but it depends on transformations. Some transformations can be translated to query language of the data source, some not. as an example; recently (I believe from last few releases) Power BI Desktop added a feature called Merge Columns. Merge Columns concatenate columns to each other to either create a new column, or replace them with the new concatenated result. Previously you could do the concatenation with adding concatenation simply with & character, what you’ve done was adding a new custom column, and writing M expression to concatenate columns. Now with the new Merge Column this are much easier, you select columns and apply Merge Columns. This easiness does come with a price, a high price I’d say, price of reducing the performance of Power Query and as a result Power BI! Merge Columns doesn’t support query folding and it means it will affect performance badly. In this post I’ll show you how this cause the performance issue, and how it can be solved. Note that Merge Columns is an example here ,this situation might happens with some other transformations as well. If you like to learn more about Power BI; read the Power BI online book from Rookie to Rock Star.

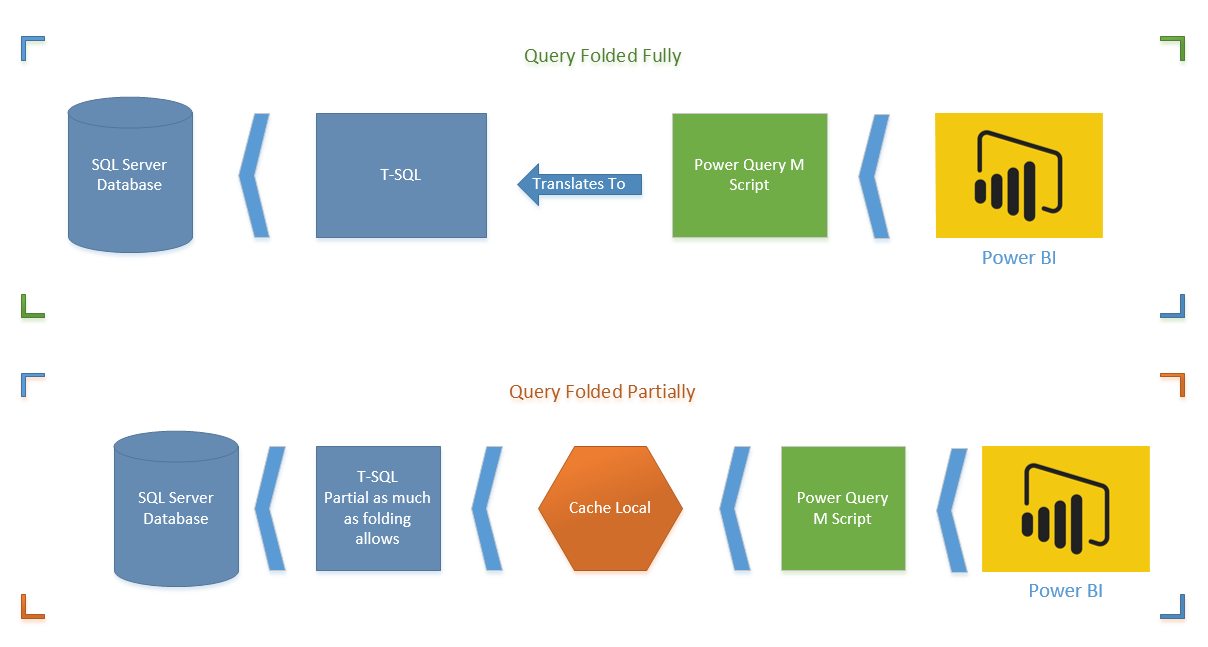

I can’t start talking about the issue without explaining what Query Folding is, so let’s start with that. Query Folding means translating Power Query (M) transformations into native query language of the data source (for example T-SQL). In other words; when you run Power Query script on top of a SQL Server database, query folding will translate the M script into T-SQL statements, and fetch the final results.

Here is an example of M Script:

let

Source = Sql.Databases("."),

AdventureWorks2012 = Source{[Name="AdventureWorks2012"]}[Data],

Sales_SalesOrderHeader = AdventureWorks2012{[Schema="Sales",Item="SalesOrderHeader"]}[Data],

#"Added Conditional Column" = Table.AddColumn(Sales_SalesOrderHeader, "Custom", each if [SubTotal] >= 100 then "0" else "1" )

in

#"Added Conditional Column"

And here is the folded version of that translated to native T-SQL query:

select [_].[SalesOrderID] as [SalesOrderID],

[_].[RevisionNumber] as [RevisionNumber],

[_].[OrderDate] as [OrderDate],

[_].[DueDate] as [DueDate],

[_].[ShipDate] as [ShipDate],

[_].[Status] as [Status],

[_].[OnlineOrderFlag] as [OnlineOrderFlag],

[_].[SalesOrderNumber] as [SalesOrderNumber],

[_].[PurchaseOrderNumber] as [PurchaseOrderNumber],

[_].[AccountNumber] as [AccountNumber],

[_].[CustomerID] as [CustomerID],

[_].[SalesPersonID] as [SalesPersonID],

[_].[TerritoryID] as [TerritoryID],

[_].[BillToAddressID] as [BillToAddressID],

[_].[ShipToAddressID] as [ShipToAddressID],

[_].[ShipMethodID] as [ShipMethodID],

[_].[CreditCardID] as [CreditCardID],

[_].[CreditCardApprovalCode] as [CreditCardApprovalCode],

[_].[CurrencyRateID] as [CurrencyRateID],

[_].[SubTotal] as [SubTotal],

[_].[TaxAmt] as [TaxAmt],

[_].[Freight] as [Freight],

[_].[TotalDue] as [TotalDue],

[_].[Comment] as [Comment],

[_].[rowguid] as [rowguid],

[_].[ModifiedDate] as [ModifiedDate],

case

when [_].[SubTotal] >= 100

then '0'

else '1'

end as [Custom]

from

(

select [$Table].[SalesOrderID] as [SalesOrderID],

[$Table].[RevisionNumber] as [RevisionNumber],

[$Table].[OrderDate] as [OrderDate],

[$Table].[DueDate] as [DueDate],

[$Table].[ShipDate] as [ShipDate],

[$Table].[Status] as [Status],

[$Table].[OnlineOrderFlag] as [OnlineOrderFlag],

[$Table].[SalesOrderNumber] as [SalesOrderNumber],

[$Table].[PurchaseOrderNumber] as [PurchaseOrderNumber],

[$Table].[AccountNumber] as [AccountNumber],

[$Table].[CustomerID] as [CustomerID],

[$Table].[SalesPersonID] as [SalesPersonID],

[$Table].[TerritoryID] as [TerritoryID],

[$Table].[BillToAddressID] as [BillToAddressID],

[$Table].[ShipToAddressID] as [ShipToAddressID],

[$Table].[ShipMethodID] as [ShipMethodID],

[$Table].[CreditCardID] as [CreditCardID],

[$Table].[CreditCardApprovalCode] as [CreditCardApprovalCode],

[$Table].[CurrencyRateID] as [CurrencyRateID],

[$Table].[SubTotal] as [SubTotal],

[$Table].[TaxAmt] as [TaxAmt],

[$Table].[Freight] as [Freight],

[$Table].[TotalDue] as [TotalDue],

[$Table].[Comment] as [Comment],

convert(nvarchar(max), [$Table].[rowguid]) as [rowguid],

[$Table].[ModifiedDate] as [ModifiedDate]

from [Sales].[SalesOrderHeader] as [$Table]

) as [_]

You can see as an example how the conditional column script in M translated to Case statement in T-SQL.

Good obviously. Why? because performance is much higher to run transformations on billions of records in the data source, rather than bringing millions of records into cache and applying some transformations on it.

The first diagram shows a M script fully folded (translated to T-SQL). This is the best situation. Server side operation apply all transformations on the data set and returns only desired result set.

The second diagram shows Query Folding partially supported (only up to specific step). In this case T-SQL brings the data before that step. and data will be loaded in the local cache, and rest of transformations happens on M engine side. You have to avoid this option as much as possible.

The question that might comes into your mind right now is that; Can I see the Native Query that M script translates to it? The answer is Yes. If Query Folding is supported on a step, you can right click on that step and click on View Native Query.

Query Folding is enabled by default. However in some cases it is not supported. For example if you are doing some transformations on a SQL Server table in Power Query and then join it with a web query query folding stops from the time you bring the external data source. That means transformations will happen on the data of SQL Server table. then before joining to web query it will be fetched into cache and then rest of steps happens by M engine. You would need to bring data from different data sources in Power BI, and this is the ability that Power Query gives to you. So sometimes you have to step beyond query folding, and there might be no better way of doing that.

There are also some Transformations in Power Query that Query Folding doesn’t support them. Example? Merge Columns! Fortunately there are workarounds for this situation. Let’s dig into this more in details.

Merge Columns concatenates columns to each other in the order of selection of columns. You can also specify the delimiter character(s). So simply if you want to create a full name from first name and last name, you can select them in right order, and from either Transform tab, or Add Column tab choose Merge Columns. Let’s see this through an example;

You need to have AdvanetureWorksDW database installed on SQL Server. or alternatively you can use any table in SQL Server that has two string columns which can be concatenated.

Create a new Power BI file in Power BI Desktop, and Get Data from SQL Server with Import Data Mode. Get Data from DimCustomer table only, and click on Edit. When in Query Editor. Select First Name, Middle Name, and Last Name in the correct order, and then from Add Column Select Merge Columns.

In the Merge Columns Specify the Separator to be space, and name the new column to be Full Name.

You will see the new column generates simply and adds to the end of all columns. You also may notice that Merge Columns uses Text.Combine Power Query function to concatenate columns to each other.

Now to see the problem with Query Folding, right click on Inserted Merge Column step in Applied Steps section. You will see that View Native Query is disabled.

Rule of thumb is that: When View Native Query is not enabled, that step won’t be folded! that means the data will be loaded into cache up the step before this step. and then rest of the operation will happen locally. To understand how it works, right click on the step before which was Navigation. You will see the View Native Query. Click on that, and you can see the T-SQL query for that which is as below;

select [$Table].[CustomerKey] as [CustomerKey],

[$Table].[GeographyKey] as [GeographyKey],

[$Table].[CustomerAlternateKey] as [CustomerAlternateKey],

[$Table].[Title] as [Title],

[$Table].[FirstName] as [FirstName],

[$Table].[MiddleName] as [MiddleName],

[$Table].[LastName] as [LastName],

[$Table].[NameStyle] as [NameStyle],

[$Table].[BirthDate] as [BirthDate],

[$Table].[MaritalStatus] as [MaritalStatus],

[$Table].[Suffix] as [Suffix],

[$Table].[Gender] as [Gender],

[$Table].[EmailAddress] as [EmailAddress],

[$Table].[YearlyIncome] as [YearlyIncome],

[$Table].[TotalChildren] as [TotalChildren],

[$Table].[NumberChildrenAtHome] as [NumberChildrenAtHome],

[$Table].[EnglishEducation] as [EnglishEducation],

[$Table].[SpanishEducation] as [SpanishEducation],

[$Table].[FrenchEducation] as [FrenchEducation],

[$Table].[EnglishOccupation] as [EnglishOccupation],

[$Table].[SpanishOccupation] as [SpanishOccupation],

[$Table].[FrenchOccupation] as [FrenchOccupation],

[$Table].[HouseOwnerFlag] as [HouseOwnerFlag],

[$Table].[NumberCarsOwned] as [NumberCarsOwned],

[$Table].[AddressLine1] as [AddressLine1],

[$Table].[AddressLine2] as [AddressLine2],

[$Table].[Phone] as [Phone],

[$Table].[DateFirstPurchase] as [DateFirstPurchase],

[$Table].[CommuteDistance] as [CommuteDistance],

[$Table].[FullName] as [FullName]

from [dbo].[DimCustomer] as [$Table]

You can see that this is simple query from DimCustomer Table. What will happen here in this scenario is that Power Query cannot translate Text.Combine to T-SQL Query. So data up to step before will be loaded into the cache. It means the query for step before (which is above query) will run on the database server, the result will come to the cache, and then Text.Combine will happen on the local memory in cache. Here is a diagram of how it works;

In this example the data set is so small, but if data set be big, then not folding cause performance issues. It is taking much longer to load the whole data set into cache and then apply transformations, rather than doing transformations in the data source, and just loading the result into Power BI.

Now remove the step for Inserted Merged Column, and go to Add Column Tab, and select Custom Column

In the Add Custom Column write below expression to generate Full Name;

= [FirstName] & " " & (if [MiddleName]=null then "" else [MiddleName]) & " " &[LastName]

This expression used the concatenation character which is &. and also checked if Middle Name is null or not. Result in Power Query side is the same, and it generates the Full Name column like the previous example;

However it is different for Query Folding. Right Click on the Added Custom step, and you will see the Native Query this time.

Query is simply the same with a new concatenated column added.

select [_].[CustomerKey] as [CustomerKey],

[_].[GeographyKey] as [GeographyKey],

[_].[CustomerAlternateKey] as [CustomerAlternateKey],

[_].[Title] as [Title],

[_].[FirstName] as [FirstName],

[_].[MiddleName] as [MiddleName],

[_].[LastName] as [LastName],

[_].[NameStyle] as [NameStyle],

[_].[BirthDate] as [BirthDate],

[_].[MaritalStatus] as [MaritalStatus],

[_].[Suffix] as [Suffix],

[_].[Gender] as [Gender],

[_].[EmailAddress] as [EmailAddress],

[_].[YearlyIncome] as [YearlyIncome],

[_].[TotalChildren] as [TotalChildren],

[_].[NumberChildrenAtHome] as [NumberChildrenAtHome],

[_].[EnglishEducation] as [EnglishEducation],

[_].[SpanishEducation] as [SpanishEducation],

[_].[FrenchEducation] as [FrenchEducation],

[_].[EnglishOccupation] as [EnglishOccupation],

[_].[SpanishOccupation] as [SpanishOccupation],

[_].[FrenchOccupation] as [FrenchOccupation],

[_].[HouseOwnerFlag] as [HouseOwnerFlag],

[_].[NumberCarsOwned] as [NumberCarsOwned],

[_].[AddressLine1] as [AddressLine1],

[_].[AddressLine2] as [AddressLine2],

[_].[Phone] as [Phone],

[_].[DateFirstPurchase] as [DateFirstPurchase],

[_].[CommuteDistance] as [CommuteDistance],

[_].[FullName] as [FullName],

((([_].[FirstName] + ' ') + (case

when [_].[MiddleName] is null

then ''

else [_].[MiddleName]

end)) + ' ') + [_].[LastName] as [Full Name]

from [dbo].[DimCustomer] as [_]

This time there won’t be an intermediate cache. transformation happens in the data source with the T-SQL query, and result will be loaded into Power BI.

Great Question. It is important to understand which step/transformation folds and which doesn’t. To understand that simply right click on every step and see if the View Native Query is enabled or not. If it is enabled Query Folding is supported for that step, otherwise not. Also Note that Query Folding is not supported for data sources such as web query, or CSV or things like that. Query Folding at the moment is only supported for data stores that supports a native query language. For Web, Folder, CSV… there is no native query language, so you don’t need to be worry about Query Folding.

*Important Note: At the time of writing this post Merge Columns doesn’t support Query Folding. I have reported this to Power Query Team, and they are working on it, to solve the issue. The Merge Columns is very likely to support query folding very soon as the result of bug fix. However there are always some other transformations that doesn’t support query folding. This post is written to give you an understanding what kind of issue might happen, and how to resolve it.

My advise to you as a performance best practice is that when working with a relational data source (such as SQL Server). always check the query folding. Sometimes it is not supported, so use another approach for the transformation. Don’t fall into the black hole of not folding, otherwise your query might take ages to run.

Published Date : November 14, 2016

In the area of performance tuning a Power BI model many things has to be considered, most of them around consumption of the CPU and RAM. One of the most basic but important consideration is minimizing the usage of memory. By default all queries from Query Editor will be loaded into the memory of Power BI Model. In this post I’ll show you an example to disable the load for some queries, especially queries that used as intermediate transformation to produce the final query for the model. This is a very basic tip but very important when your model grows big. If you want to learn more about Power BI read the Power BI online book from Rookie to Rock Star.

For running examples of this book you need to download the ZIP file here;

By Default all queries in Power Query will be loaded into the Power BI model. This behavior might be a desired behavior if you are connecting to a proper star schema modeled data warehouse, because normally you don’t need to make many changes in the structure of queries. However this brings some issues if you are connected to a transactional data store, some files, web source, and many other non-star schema modeled data sources. Normally when you get data from a data source, you apply transformations for rows and columns, and merge queries or append them, and you might end up to have 5 tables out of 10 queries as final queries. By default when you close and apply your query editor window all queries will be loaded into the model no matter if you want to use them in your final model or not.

For every query that loads into model memory will be consumed. and Memory is our asset in the Model, less memory consumption leads to better performance in most of the cases. I have seen lots of models that people Hide the unwanted query from the model, This approach doesn’t help to the performance because hided query will still consume the memory. The best approach is to disable loading before closing query editor. Disabling Load doesn’t mean the query won’t be refreshed, it only means the query won’t be loaded into the memory. When you click on Refresh model in Power BI, or when a scheduled refresh happens even queries marked as Disable Load will be refreshed, but their data will be used as intermediate source for other queries instead of loading directly into the model. This is a very basic performance tuning tip, but very important when your Power BI model grows bigger and bigger. Let’s look at this through an example.

In this example Scenario I want to get list of all files from a directory. There are two types of CSV files in the directory, some Students files, and some course files, both are CSV files, but different structured. Also there might be some other files in the directory, such as Word files or HTML files which I don’t want to process.

Aim is to load data rows of all students and courses as two separate tables into the model. Instead of fetching files from the folder twice, we use one query to fetch the files, and then use it as a reference for other queries. The referenced query itself doesn’t needs to be loaded into the model. Let’s build the solution and see how it works in action.

Open a new Power BI file, and stat by Getting Data from Folder

Enter the path of folder that contains all files (Files in this folder can be downloaded from ZIP file up in the prerequisite section of this post)

Click on Edit in the preview showed in the navigator window to open the folder content in Query Editor. As you see there are number of different files in the folder.

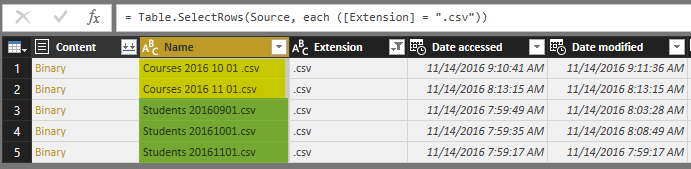

Filter the Extension to only .csv. Note that both Course and Student files are .CSV files which is what we need.

Now the subset includes Course files and Students files which are different in the structure. We have to apply transformations on each set individually.

Because I don’t want to repeat the process of getting all files from a folder, and I want to split the data into two data sets; one for Students and another one for Courses. I’ll generate a REFERENCE from the main query. Right click on the query (which called CSVs in my example), and select Reference.

This will generate a new query named as CSVs (2). This new query is NOT A COPY of first query. This is only a reference from first query. which means if the first query changes, the source for this query will also change. Rename this new query to be Student. Filter the Name column to everything starting (or begins with) “Student”.

The reason that I don’t type in in the search box and use Text Filters specifically is that the search box will filter the data statistically based on values that exists in the current data set. If in the future new values (file names) comes in the folder this won’t consider that. However the Text Filter will apply on any data set because it will be filtered dynamically. (I’ll write a post later to explain that in details). In the Filter Rows window type in Student as filter for begins with.

Now you will see only Students files.

Click on the Combine Binaries icon on the header of Content column to combine all CSV files into one.

After combining binaries, Power Query will also import the CSV into the table and do automatic data type conversion. You can see all three steps in the Query Editor.

The data table needs couple of changes before be ready. First; set the column names. The first row has column names. so use the menu option of “Use First Row As Headers” to promote the first row to be column headers.

This simply brings column headers;

Also you need to remove all extra header rows from the combined data set. There are some rows with “ID,First Name, Last Name, and City” as their values which should be removed from the data set. You can simply remove them with a Text Filter of Does Not Equal to on the ID column. values should not be equal to “ID” because every row with “ID” in the first column’s value is a header row and should be removed.

Now you have you cleaned data set for Students. Note that I have also applied a data type conversion for column ID to type whole number;

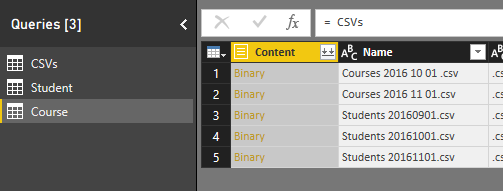

The other entity is Course which we need to create as a reference from the CSVs query. Create another reference from CSVs, and name it Course.

Create a Text Filter for Begins with on the Name Column with the value of: “Course”

And the you’ll have only Course files. Combine Binaries on that.

Same as Student query, apply transformations in this order;

Here is the final data set for course;

Now to see the problem, without any changes in the default load behavior, Close the Query Editor and Apply changes.

You will see that after the refresh, three queries loads in the model; Student, Course, and CSVs.

Student and Course are expected tables in the model. However CSVs is not useful. we already fetched everything we wanted from the query, and this is used as an intermediate query for loading Student and Course. Having CSVs as a separate table in our model has two problems;

The main issue is the memory consumption for this extra table. and normally memory consumption will reduce the performance and brings heavier load to the model. In this example CSVs table only has few rows. but this is just a sample data, in real world examples you might have intermediate tables with millions of rows. You need to remove every unused table from the Power BI model to enhance the memory consumption.

The first issue “Confusion for users” can be solved by hiding the table from report view. You can do that in Relationship tab of the model, right click on the CSVs table, and click on Hide from report view. This method HIDEs the table from report view. However the table still exists, and it consumes memory! It is just hidden. Hiding a table from report view is good for tables that you need for the modeling. For example a relationship table (that creates many to many relationship) should be kept in the model but be hidden. because it creates the relationship, but it is not useful for the report viewer. Any other tables that is not used for relationship and is not used for reporting should be removed before loading into the model.

Go back to Query Editor, and right click on CSVs query. You will see that by default every query is checked as Enable Load.

Click on the Enable Load to disable it. You will see a message saying that any visuals attached to this query will not work after this change. Which is totally fine, because this query is not used (and won’t be) for any visualization. The message is: “Disabling load will remove the table from the report, and any visuals that use its columns will be broken.”

Click on Continue, and you will see the load is disabled for the query now. the query name will be also in Italic font illustrating that it won’t be loaded into the model. Note that query will be refreshed and if new files comes to the directory it will pass it on to Course and Student queries. It just won’t be loaded into the model itself.

You can also see in the View tab of Query Editor, on the Query Dependencies that this query is the source of the other two queries. and in that view it also shows which query is load disabled.

Now close and apply the Query Editor. This time you see that CSVs query won’t be loaded into the model. Your relationship tab and the report tab only contains two tables; Course, and Student.

In summary you’ve seen how an easy tip can save the memory and enhance the performance of Power BI model. Always remember that Query Editor is your ETL (Extract, Transform, Load) engine. It will apply all transformations before loading the data into the model, but once it finished the transformation all queries will be loaded into the model and they take memory. By default all queries are Enabled to Load into the model. Simply change that for queries that is not required in the model.

Published Date : November 3, 2016

The latest update of Power BI Desktop (October 2016) has many features. Two of these features are grouping and binning. These features used to create groups of items and visualize them better in the report. Previously you could do that by Power Query or DAX calculated columns, now it is all possible simply through the graphical user interface of report editor. In this post I’ll show you how to create banding (binning) and grouping simply with these features. If you like to learn more about Power BI; read the Power BI online book from Rookie to Rock Star.

For running examples of this post you need to have AdvantureWorksDW SQL Server database sample installed. Or you can get the excel version of it.

Get Data; Start with Get Data from AdventureWorksDW, and choose tables: FactInternetSales, DimDate, and DimCustomer.

Set the relationship between DimDate and FactInternetSales to be only based on OrderDateKey as Active relationship, remove inactive relationships between these two tables.

Binning is grouping a numeric field based on a division. This type of grouping is called Banding as well. For example you might have customers with different yearlyIncome range from $10,000 to $100,000 and you want to create a banding by $25,000. This will generate 4 groups of yearly income for you. This is exactly what Binning in Power BI does. Let’s look at the example.

Create a Table in Power BI Report and visualize YearlyIncome (from DimCustomer), and SalesAmount (from FactInternetSales) in it. Change the aggregation of YearlyIncome from Sum to Do Not Summarize as below

This will visualize each yearlyIncome value with the sales amount associated to it.

Now let’s create groups of people based on their yearly income categorized in buckets of $30,000. Right click on the YearlyIncome column in the fields section and select Group.

In the Groups window make sure Bin is selected as the group type (by default for numeric or date/time columns this will be the default). and set the size to 30000. This is the size that values of YearlyIncome will be split based on it.

Press OK in the Group Window, and bring this new generated field (named YearlyIncome (bins)) to the table.

Color coding above shows that YearlyIncome values grouped by the division result of the value of yearly income by group size which is $30,000. If you want less groups you can increase the group size, or if you want more detailed groups you can decrease the group size.

Now you can remove the YearlyIncome from table view, change the table to be Column Chart to see which group generates the most revenue.

This binning can be applied to datetime values as well. For example if you want to see revenue based on half year’s banding, you can simply create a group with Bin size of 6 by Month. Let’s see the example; Right click on FullDateAlterneteKey column (from DimDate), and create the group;

Now create a Bar chart with this new column (named Oder Date – 6 Months), and Sales Amount.

You can simply create other banding or binning for Years, Months, Days, Hours, and Minutes as well.

You Can Create a group of multiple items in a visualization. This group can be simply created by Ctrl+Click and selecting items, then grouping them. As an example; create a Column Chart with English Education (from DimCustomer), and SalesAmount (from FactInternetSales).

Now Ctrl Click and select High School and Partial High School, then right click and select Group.

You will see now them grouped together with a legend on the top of chart showing this group, and an “other” group.

Now create another group for Bachelors and Graduate Degree in the same way.

You have now three groups; higher education (Bachelors and Graduate Degree), College (Others), and High School (High School and Partial High School).

Once you have the grouping you can do many nice visualizations with that, an example can be creating a Stacked Column Chart with These Groups as the Axis, and the English Education as the Legend. To do so; Change these two in the chart and change it to be Stacked Column Chart.

Now the chart will look like this;

This shows simply how much revenue each category generates in total, and also inside the category which sub category (English education) generates the most or least revenue.

What is happening behind the scene is that there is a DAX calculated column (calculation is not visible in Power BI) created for each binning. This was exactly the method of implementing such thing previously. There is also another method which is creating the banding through Power Query.

Grouping and Binning is part of your model. That means if you create another report or chart you can use the Group there. Even if you create calculated tables or any DAX calculations you can use these new Group columns there.

There are heaps of possibilities that these new grouping features bring to your visualization. Having these grouping as part of you model also is great to have unique experience in your reporting solution. However There are always complex grouping conditions that still need to write a bit of DAX code or Power Query M script.

Published Date : October 10, 2016

This question is one of the main questions that people ask when they are at the preliminary stages of building a Power BI solutions. There is a misconception that Report is a detailed tabular report, and dashboard is interactive visualizations with chart. However this definition isn’t what Power BI reports and dashboards stands for. There are differences in these two main components of a Power BI solution, Understanding differences will help you to leverage their power in a best way. In this post I’ll explain what are differences between these two and Where, When, Why, Which is best to use? If you are interested to learn more about Power BI; read Power BI online book from Rookie to Rock Star.

Dashboard: General

Stephen Few‘s definition of Dashboard: A dashboard is a visual display of the most important information needed to achieve one or more objectives; consolidated and arranged on a single screen so the information can be monitored at a glance.

Report: General

A Report on the other hand is any informational work. This information can be at any format. Table, Chart, text, number or anything else.

Power BI Report

Power BI Report is combination of multiple visual elements (charts, texts, values…) on a page that can be inter-related with each other. Data visualized in the report can be sliced and diced with slicers. Power BI report is fully interactive from user. and It can be filtered based on some criteria.

Power BI Dashboard

Power BI Dashboard is a high level view of some of key KPIs of one or more reports. Dashboard is a day-to-day view of KPIs, and provide the navigation point to the detailed reports. Power BI Dashboard isn’t built for slicing and dicing.

You can see that definition of Power BI Dashboard and Report fits into the general definition of these two component we’ve had earlier. Now let’s look closer at these two.

Reports in Power BI can have multiple pages. In each page there might be multiple visualization elements. Slicing and dicing, hovering and highlighting is possible in the Report. We can drill down through a hierarchical data structure, or select a particular column in a column chart and see the related data to it in other visualization elements. All of these means Report in Power BI is for slicing and dicing. Report built for end users to play with different handles (slicers or visuals or filters), and achieve what they want. For example they might want to understand why Sales Amount in month August is lower than other month? or Which product is selling best, and how it is distributed through branches. Here is an example of Power BI report;

If you like to know how to create this report, please read this post.

Unique Features of a Power BI Report

A Dashboard in Power BI is a navigation point to reports, and a very high level day-to-day view of main KPIs of business. For example for a particular business need some KPIs might be required from multiple reports. For example year to date revenue from sales report, stock in hand from inventory report, and something from production report. With a Dashboard visualization elements from multiple reports and pages can be pinned to one main place. This place then will work as a navigation point. with clicking on each of these visuals user will be redirected to the report and page that has this element. Here is an example of a dashboard;

Unique Features of a Power BI Dashboard

You can simply slice and dice the data in the report with slicers. This is unique to reports, In dashboard there is no way of using slicers (Except using “Pin Live Page” option which will pin the whole page in the dashboard)

You can have multiple pages in a report. Navigation between pages is simply possible through the navigation pane at the bottom of report. In dashboard you can have as many as tiles you want. But there is no concept of pages. everything is in one page, If content doesn’t fit into one page scroll bars will appear (Which is not recommended)

In addition to slicers, you can select a particular element in a chart and it will highlight other elements. User can simply interact with report elements and get more insight from it. In dashboard with clicking on a tile you will be redirected to the report/page that built the element.

In Power BI you can have hierarchies; such as Product Group, or Calendar. And you can drill down or up in different levels of hierarchy through some of visualization elements. However in Dashboard drill down/up is not possible, and with a click on the visual you will be redirected to the report/page that built the element.

Publish to Web is a specific feature for a report. With Publish to web report can be published as a public web page, or can be embedded in a publicly available web page. Dashbarods at the time of writing this post, cannot be published publicly on the web. To read more about Publish to Web, read this blog post.

Users can explore the data of a particular visual element in the report with options in Explore Data or See Records. These options will help users to drill even down to the record level and see roots of a particular value in a chart. In dashboard only the data of dashboard (not data records) can be exported.

Automatic refresh is one of the main benefits of dashboards vs reports. A dashboard can be designed in this way that it be open for many hours and it will refresh automatically (depends on elements explained later). On the other hand if you open a report, and if data set of that report get refreshed, you need to manually refresh your report otherwise your report won’t be refreshed automatically.

Well when I get to this point, many students in my courses asks why this behaves like that?! Why it is not getting refreshed automatically like the dashboard. I believe the main reason for this is scenarios like this: Consider that an analyst has opened an inventory report, and is checking number of that with a static report that he/she has in Excel or even on paper. His/Her excel or paper report is static, and he/she is doing a sanity check to see if numbers match or not. if the report be dynamic then nothing can be checked. There might be other reasons as well, but this is I believe the main reason for it.

Power BI Dashboards refreshes whenever the data set refreshes. This is for when we import the data into Power BI. If the data set is scheduled to refresh, or if we refresh that manually, the dashboard will be refreshed automatically.

Power BI Dashboards for DirectQuery data sets refreshes every 15 minutes.

Dashboards, Reports, and Data Sets in Power BI can be shared through different methods; such as Content Packs, and Power BI Work Groups. However Dashboards can be shared through a basic sharing method as well. This is an addition to the other methods of sharing. With this method person/people that this dashboard will be shared with them will receive an email with the dashboard link.

It is easy to change size of dashboard tiles, and change order of them, also to add new tiles (Image, Text, Web, and Video) in dashboard. That said it is also possible to make changes to a report as well. But that required clicking on Edit report, going to edit mode, applying changes, and saving. Dashboard is like user’s view of the world, so simply can be adjusted to what he/she wants to see.

A dashboard can be set as a landing page with setting that as Featured Dashboard. There can be only one featured Dashboard. If you set another dashboard to be featured, the previous featured dashboard will be replaced with this new one.

If you haven’t heard about Q&A I encourage you to read this blog post. Q&A is an engine of top of Power BI model which will respond to your natural English language questions. Q&A box is only available on top of dashboards (Not all types of dashboards, at the time of writing this post, Q&A on dashboards that has Row Level Security enabled, or data sets used DirectQuery is not possible).

I’ve mentioned that dashboards refreshes automatically. However this doesn’t mean real-time monitoring. Real-time monitoring means using PUSH approach. That means anytime a new data in the source is available it should be monitored. It is possible to have real-time monitoring of events with Power BI Dashboards. The data set at the moment can be sourced from PubNub, Azure Stream Analytics, or REST API. To learn more about real-time monitoring with REST API read this post, and Azure stream Analytics read this post.

You can define alerts for each data driven tile in the report. Alerts can be as simple as if number goes above or below something send an email to me.

In dashboards you can get some more insight with selecting the Related Insights feature of the tile. This option will search through patterns in the data set and visualize them automatically.

As you see dashboards and reports are not exactly the same. there are many major differences between these two. majority of the difference is that; Dashboard is day-to-day single page view of main KPIs, which can be refreshed automatically and can visualize real-time events. Dashboard can be used to navigate to reports. Reports are interactive data visualization elements that can be used by users to slice and dice, highlight and interact with to investigate numbers and insight more in details. For a great visualization solution with Power BI you would need both, these are complements of each other, not replacements.

Published Date : October 7, 2016

Previously I’ve explained a method of looping through number of files in a folder which used Custom Functions. This method is preferred method buy not always working with Schedule Refresh and gateway setup, because scheduled refresh doesn’t always support custom functions in the code. So I this post I show you a second method that loops through the files dynamically, and it doesn’t use custom function, and you will see at the end of this post that schedule refresh works with this method. For better clarification of the scenario I will first do custom function approach, show you the problem with scheduled refresh, and then I’ll explain about the second method. If you would like to learn more about Power BI, read Power BI online book; from Rookie to Rock Star.

For running example of this post you need to download set of CSV files from here: fitbitsourcefilesradacad

In this example we have number of CSV files which has list of my Fitbit activities, one file for each month. All under the same folder, all same structure. We want to apply number of similar steps to each files and loop through them and fetch the data all into single data set. After building a report on top of that we want to publish it to Power BI service, and get that refreshed through a gateway configuration. As files has similar structure we are looking for a mechanism to apply same steps on all files.

You will see two approaches with this, first one is preferred approach because it uses custom functions, however because scheduled refresh doesn’t support custom functions always, so there is a second approach which will work with automatic refresh. If you are visiting this page at the time that problem of scheduled refresh and custom function resolved, then use the first approach, otherwise use the second.

Open a New Power BI Desktop, Start by Get Data, and Select CSV as a source.

Choose of the files in Fitbit Source folder and continue;

Go to Edit Queries Window with clicking on Edit button, and You will see data previewed there.

As you can see in the preview the first record isn’t useful. it has just a title “Acitivities”, so let’s remove it with going to Remove Rows, Remove Top Rows. and enter 1 in the dialog box;

Now the first row only contains column headers. So let’s promote it to column headers with clicking on Use First Row As Headers.

There are also some blank rows, so let’s remove them with Remove Rows -> Remove Blank Rows.

Now we’ve done all clean up work for this file. We have one row per each day with details of activities in that day. Next step is to loop through all files in the source folder, and apply same number of steps on them. Fortunately we can use Custom functions for this. Previously I’ve explained how Custom Functions are helpful in code re-usability, better maintenance, and consistency. Instead of repeating same steps for each file is it much wiser to create a function from these steps, and call the function for each file. So let’s do it;

For creating the function through GUI, the first step is to create a parameter. You can create Parameter from Menu option or with right click on the blank area in Queries section.

Create the Parameter with name of FullPath, of type Text, and set a default value to be full path (including file name, and extension) of one of CSV files in your folder;

After creating the function you should be able to see that with Parameter icon in Queries pane;

Next step is to get the current query (in the screenshot named as fitbit_export_201507) sourced from the FilePath parameter instead of static path. Click on the query, and choose settings from steps in right hand side pane;

Change the File path in the setting window to be sourced from Parameter, and because you have only one parameter FilePath parameter will be selected automatically.

After clicking on OK, you will see result of first step before applying your changes, so simply click on the last step to see the final result. if the file path you have provided as the default value of parameter be wrong you will see an error, otherwise you will see the data of that particular file. Now let’s create the function;

Right click on the query that we just changed the source of it (fitbit_export_201705), and select Create Function.

Set the function name as ProcessFile, and leave the parameter as is.

Because the query used parameter as a source so it can be simply used to create a function. Now You will see a function listed in Queries pane as well.

Note that the function uses M (Power Query Formula language) with Lambda syntax. But we have created it all just with graphical user interface option. If you are interested to learn more about code side of it, read this post. This function now has all the steps generated in the first query. To see the steps you can simply click on Advanced Editor while you have ProcessFile function selected in Queries pane.

Don’t be afraid of this code! you don’t need to change anything here. It was just for letting you know that we have same steps copied into the function.

Now you can remove the first query, we don’t need that anymore, steps are already in the custom function.

Now that we have the function ready, let’s get list of files and apply it on all files. Get Data from a New Source, and More.

Choose Folder this time;

And select the folder which contains all CSV files;

After clicking OK here and also on the preview, you will see list of all files in this folder with their details such as file name, extension, date created…. and folder path. You also see a column as Content, which is the Binary format of this files (the actual data in each file), I will talk about this column more in second approach.

For this approach we just need a full file path which we can generate from Folder Path and Name , so only select these two columns and remove everything else.

Now let’s concatenate these two and create a full file path, Select Folder Path first, and then hold ctrl key and select Name column then. Go to Transform Tab and Choose Merge Columns

Name the new concatenated column as FullFilePath, You don’t need to put a separator for it, because Folder path already has an ending backslash in the string.

This will replace existing two columns with the new FullFilePath concatenated column;

Now we have everything ready for the custom function to be called for every record of this table. Go to Add Column tab, and click on Invoke Custom Function;

In the Invoke Custom function dialog box, select the function as ProcessFile, and the input parameter this time comes from the FullFilePath column that we created few minutes ago. Name this column as Content.

After clicking on OK, in an instant, you will see the new column appears which has a Table in each cell. This is the data table processed for each file. If you click on a blank area of one of table’s cells you will see the data preview of that in a details view pane underneath preview pane.

We don’t need FullFilePath column anymore, so Remove that column. Now in the table we have just one column, which is a structured column. Means it has a structured value in each cell (in this case a table in each cell). We can now expand this structured column to all underlying columns with clicking on Expand button. Also remember to uncheck the box for Use Original Column Name as prefix.

This expand button (or you might call it Magic button now, as it reveals the magic!) combines all data tables into a single big data table, including data rows from all CSV files.

All we need to do now is some data type changing;

Change data type of all columns except Date and Distance to Whole Number. Change data type of Distance column to Decimal. and Finally change data type Date field to Date. For the data type of Date field to be changed you need to choose locale. because the exported files has date values in New Zealand Date format, and if you change it with normal data type conversion, Power Query try to convert their data type based on your local system date format, which might not be New Zealand’s format. So right click on Date Column. under Data Type choose Using Locale.

In the new dialog box, choose the data type to convert to as Date, and set locale as English New Zealand

After clicking on OK you will see data types converted correctly without any error. As the final step now change the query name to Tracking Data;

Notice that Date column showed in above screenshot is converted to a Date format (you can see the date format icon in the column header), but it shows in this window to me in my computer locale format which is English (United States). The way that it shows doesn’t matter. All matters is that this is of Date format.

Now let’s build a very simple Report with the data. Close and Apply Power Query window, and in the Report pane, add Steps as Total value in a Card Visualization. Also in the formatting section set the Format of data label to display as None (means without any millions, or K, or anything like that).

The other visualization to add is a Column Chart, which Steps as Value, Date as Axis. It it will automatically brings Date Hierarchy, So remove Quarter and Day from the hierarchy.

After removing Quarter and Day, click on Expand all down one level in the hierarchy in the top left corner of the column chart (screenshot above numbered 2);

Also create a measure to show the last date. Go to Modeling tab, New Measure, and use LastDate function to get the latest date of the date field in the Tracking Data table;

Last Date Processed = FORMAT( LASTDATE('Tracking Data'[Date]) , "YYYY-MM-DD")

Show this new measure as a Card visual in the report.

We can make this report much richer with adding more insights and visualizations, but let’s keep that simple for now. Save the report and Publish. You can save it with a name like Fitbit Custom Function Method.

Because we want the report to be refreshed from on-premises folder we need to set up a gateway. Both Personal and On-Premises gateways can do this. Explanation of how to install and configure gateway is out of scope of this post, If I want to explain that I will make this post VERY LONG! If you want to learn more about gateways read this post about Personal gateway, or this one about On-Premises Gateway. I explain everything from after successful setup of gateway here, where we need to add a new data source.

We have to create a data source under the gateway for our local folder containing CSV files. Go to Manage Gateways in Power BI Service

Now select your gateway, and add a new data source under it.

Set up the new data source for Folder and set the local folder path there;

Note that the local path should be accessible from the machine that has gateway installed on it. otherwise it should be a shared path. The windows username used here also should be for an account that has read access on the folder we are trying to access.

After successful setup you will see a message clarifying that;

We’ve published the report earlier. Now it’s the time to Schedule it for refresh, and configure it to get its data from gateway. Under Datasets find the Fitbit Custom Function dataset, and click on ellipsis button and choose Schedule Refresh.

In the Schedule Refresh Setting section, You will see an error message that says you can not configure gateway for this data set. That’s because of custom function used here. (Note that this happens at the time of writing this blog post, It might be resolved by the time that you are reading this post)

The error message is: You can’t schedule refresh for this dataset because one or more sources currently don’t support refresh.

Now you see the problem, It was a long way to show you the issue! but you need to know it before using many custom functions in your code. Let’s go through the second approach to resolve it.

This approach has some limitations and difficulties involved, However at the time of writing this post, this is the only way of getting the scheduled refresh working.

With this method we start with getting data from Folder. Open a new Power BI Desktop solution, and Get Data From Folder, exactly as you’ve seen in the Get Data From Folder section earlier in this post.

This time however we don’t use custom functions, we want to use binary data of the file content. Content column includes the Binary Data. Content column is all we need, so remove all other columns.

If you click on a blank area in any Binary cell in the content column, you will see the CSV file in preview pane under it.

This Content column is a structured column, and any structured column can be expanded. Expanding a structured column with Binary values Will combine them together. Click on Combine Binaries button on the top right hand side of the column.

Combine Binaries will combine content of all files together, and will generate a big list of all data rows. You can also see automatic steps applied after combining binaries in the steps pane.

Now that we have all data rows. Let’s do some cleanup;

As you can see in the preview the first record isn’t useful. it has just a title “Acitivities”, so let’s remove it with going to Remove Rows, Remove Top Rows. and enter 1 in the dialog box;

Now the first row only contains column headers. So let’s promote it to column headers with clicking on Use First Row As Headers. There are also some blank rows, so let’s remove them with Remove Rows -> Remove Blank Rows.

There are still some additional rows with “Activities” or “Date” labels in their first column. These are heading rows in each CSV file. After combining content of all files these appears in the middle of the data set.

We can remove them by applying a filter on Date column. Click on Filter icon for Date column and from Text Filters choose Does Not Equal.

Choose Does Not Equal, and with an AND condition type in both Activities and Date there.

This will filter all extra labeled rows, and you will only see actual data rows.

Now Apply Same data type conversion steps and build the same report as we’ve done earlier. So continue again from Step Data Type Conversion.

Apply the same steps from Step Data Type Conversion, and Build the Sample Report till saving the report.

Save this Power BI file as Fitbit Combine Binaries. and Publish.

If you have already set up the Data Source for this folder, you don’t need to do it again. Just go directly to Schedule Refresh under Fitbit Combine Binaries dataset.

This time in Schedule Refresh setting you won’t see any error, and you can simply choose the Gateway and apply. Because we haven’t used any custom functions here, so no error message anymore. All good to go.

Under this section there is another section that you can Schedule report to be refreshed, daily or weekly depends on how you want it.

Now let’s test the refresh. Download this new CSV file and paste it in the same folder of other CSV files (the folder that you’ve set up for gateway as a data source); fitbit_export_201605

Refresh the dataset with Refresh Now (Or wait untill schedule refresh happens! I’m not that patient, so I’ll do refresh now ![]() )

)

If there be no errors in connection and gateway setup and access to the source folder, then refresh finish successfully. Go to report and refresh the report to see the new data.

You will notice that Data of month May is added. Total number of steps is now over 4 million, and last processed Date is 31st of May 2016.

Congratulations, You have done the example successfully, and much harder; You have finished this very long post ![]()

If the Gateway issue with custom functions resolves, I’d say first method with custom function is definitely the best method. Because you have vast flexibility with custom function. Combine Binaries doesn’t always produce an easy output, with some excel files it might generate some data rows which needs many more steps for cleansing the final data. But for now that gateway has this issue with custom functions, the only possible way is Combine Binaries.

Published Date : September 26, 2016

You can filter all visuals in Power BI except Slicer! In fact slicer itself is a type of filter, however there are some times that number of items in slicer is too many. So this is normal that we want to filter the items in the slicer itself. Unfortunately current version of Power BI Desktop doesn’t support visual level filter on slicer, and I’m sure this feature will come soon. However in the meantime here is a work around for filtering slicers using calculated tables. If you want to learn more about Power BI, read Power BI online book; from Rookie to Rock Star.

The idea of this blog post came from a question that one of students in my Power BI course asked to me, and I’ve found this as a high demand in internet as well. So I’ve decided to write about it.

You might have too many items to show in a slicer. a slicer for customer name when you have 10,000 customers isn’t meaningful! You might be only interested in top 20 customers. Or you might want to pick few items to show in the slicer. With all other visual types (Such as Bar chart, Column chart, line chart….) you can simply define a visual level filter on the chart itself. Unfortunately this feature isn’t supported at the time of writing this post for Slicers. However the demand for this feature is already high! you can see the idea published here in Power BI user voice, so feel free to vote for such feature ![]()

The work around in this post might get dated very soon because I expect Power BI team to fix this soon, however in the meantime this workaround will help some people I believe.

There are number of workarounds for this challenge;

I have written a blog post about what Calculated tables are and how to use them for features such as role playing dimensions in Power BI, and fetching filtered views such as top 20 customers. I strongly encourage you to read that post to learn about usages of calculated tables. In summary calculated tables are DAX generated tables, these tables will be part of the model, and can have relationship with other tables in the model.

For filtering data in a slicer I thought using calculated tables for such filtering. I know it won’t be that easy filtering. and I would need to write a bit of DAX instead of picking items from a list, but at least I have full DAX features to use and create whatever filter I want. Once we create the filtered view as a calculated table, then we can create relationship between that table and other tables, and use it in the slicer. Let’s go through that with an example.

For running this example you need to download and install AdventureWorksDW database from here.

Start with Get Data from AdventureWorksDW database, and choose these tables to import: FactInternetSales, DimProduct, DimProductSubCategory, DimProductCategory, and DimCustomer.

Click on Load, and then build a bar chart with Full Name from DimCustomer as Axis, and SalesAmount from FactInternetSales as Value. Also sort the chart by SalesAmount in descending order.

Then Create a Slicer with EnglishProducctCategoryName from DimProductCategory. Note that if you drag and drop something into visual level filter it won’t be placed there! in this version of Power BI Desktop Visual Level Filters are not supported for slicer! But don’t worry we will solve it with calculated tables.

Go to Data Tab, and from Modeling tab, choose Create Calculated Table

Let’s create a table for filtered list of product categories. As a simple example, let’s show everything except Bikes. I use FILTER function of DAX which gets a table to filter as the first parameter, and the expression as the second parameter.

Now set DimProductCategory as the table, and everything except Bikes as the expression as below;

Here is the DAX code;

Filtered Product Categories = FILTER(DimProductCategory,DimProductCategory[EnglishProductCategoryName]<>"Bikes")

As you can see the filter simply selects everything but Bikes. After creating the table, go to Relationship tab

Create a relationship between DimProductCategory, and Filtered Product Category based on ProductCategoryKey.

Now go back to the Report tab, and in the slicer use EnglishProductCategoryName from Filtered Product Category.

You can see that the slicer is now showing everything but Bikes. Other elements in the report will show everything if slicer hasn’t filtered them yet. If slicer filter their data then they will show only filtered data.

The example I’ve showed you here was a very basic example of filter. DAX is fully featured language. you can define a very complex filter as well. Think about all possible filters you can create with calculated tables. things like top 10 customers, or any other filters that you cannot simply achieve with visual level filters are possible here.

Calculated tables can be created only if you use Import Data into Power BI. these are generated based on DAX functions, and are not available as DirectQuery.

Calculated tables consumes memory, but you can reduce it with selecting minimum number of columns in calculated tables, and some DAX performance tuning considerations.

In summary I explained to you how to create a visual level filter on a slicer using calculated tables. This method might not be as easy as picking some items from a list in a visual level filter section of the report, but it is very powerful because it uses DAX filtering for generating calculated tables. creating filters such as top 10 customers are possible here with DAX expressions. There are some limitation though, DAX is only supported if you import data into Power BI. the DirectQuery don’t support calculated tables.

Published Date : September 12, 2016

Few weeks ago, I’ve written how REST API can be used for real-time dashboard in Power BI. Another method for real-time dashboard is using Azure Stream Analytics. This can be combined with Azure IOT and Event Hub to get data from IOT devices. In this post I’ll explain to you all steps necessary to push output from a .NET application through Azure Event Hub, and Azure Stream Analytics to Power BI. You will see how to create a real-time dashboard with this method. If you want to learn more about Power BI; read Power BI online book; from Rookie to Rock Star.

You need an Azure account for running sample of this post. Azure account can be created with a free trial 1 month of credit. To create an Azure Account go to Portal.Azure.com.

You also need Visual Studio 2015 professional or Community, because a .NET application will be used as the source of the data.

Diagram below shows the architecture of this sample.

IoT Devices or Applications can pass their data to Azure Event Hub, and Azure Event hub can be used as an input to Azure Stream Analytics (which is a data streaming Azure service). Then Azure stream analytics can pass the data from input based on queries to outputs. If Power BI be used as an output then a dataset in Power BI will be generated that can be used for real-time dashboard.

As a result anytime a new data point from application or IoT device comes through Event hubs, and then Stream Analytics, Power BI dashboard will automatically update with new information.

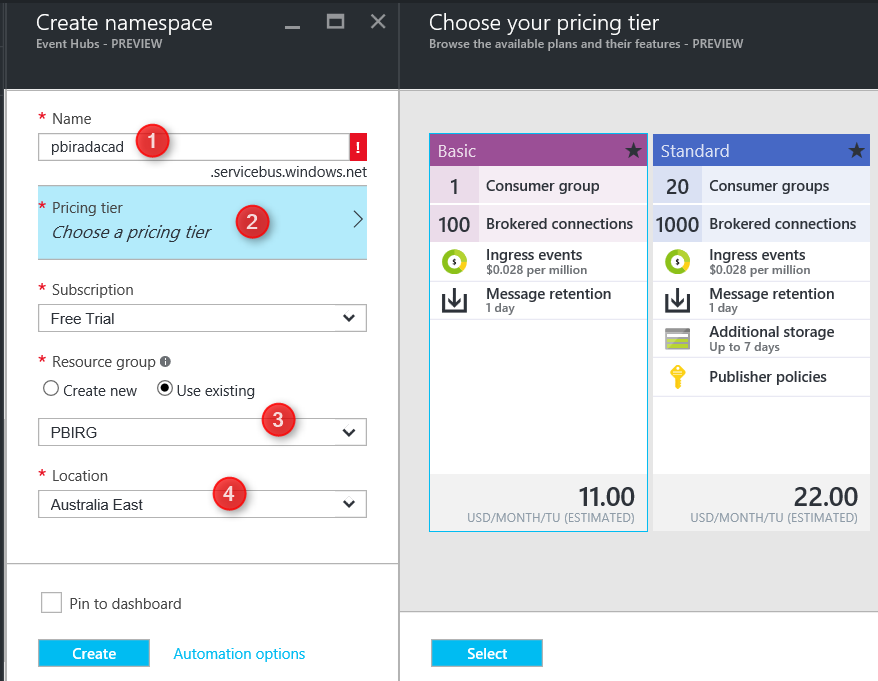

Azure Event Hub is the entry point of the data from applications. Start by creating an Event Hub by going to Portal.Azure.com

In Azure portal, create a New Event Hub from New, and then Data + Analytics

Event Hub needs a Service Bus, this first step here creates the namespace for service bus. Set a namespace in the new blade. for example PBIRadacad. choose a pricing tier. for this example you can choose Basic. Select a resource group (or create if you don’t have one), and set a location.

After creating you will be redirected to Event Hubs Namespace Blade. Your Event Hubs namespace can include multiple Event Hubs. Create your first Event Hub here, by Adding an Event Hub.

After creating you will be redirected to Event Hubs Namespace Blade. Your Event Hubs namespace can include multiple Event Hubs. Create your first Event Hub here, by Adding an Event Hub.

For the new Event Hub just set a name. like pbi for example. and leave other settings as is. You can change these settings if you want to change partitions, and number of days to retention.

After creation the Event Hub should be listed under PBIRadacad Event Hubs (or whatever name you set for the namespace).

For simplicity we keep all configuration and setting as is. So far, you have created the Event Hub which can be used as the data entry input point. Now Let’s create an Azure Stream Analytics Job.

Stream Analytics is a data streaming technology under Azure list of services. With this technology you can pass data from input (such as Event Hub) to one or more outputs. Note that Stream Analytics is not a data storage. If you want the data to be stored you have to pass that to an storage output, such as Azure Blob Storage, or Azure SQL Database or Azure SQL Data Warehouse.

Create an Azure Stream Analytics Job from Azure portal under New, and Data + Analytics.

Set a Name, Resource Group, and Location for Stream Analytics Job.

After creating the job, you will be redirected to the job’s blade.

Job above named as DeviceTempratures, yours might be named something else.

From Job Topology click on Inputs, and then Add an Input in the new blade. In the New Input blade, set a name. set Source to Event Hub, and choose the service bus namespace as the namespace you entered earlier in this post (PBIRadacad). and select the event hub as pbi.

Leave event serialization format as JSON and encoding as UTF-8. With setting above, now Stream Analytics reads the input from Event Hub.

Go to Stream Analytics main blade and create an Output this time. Set a name such as PBIOutput, and set Sink to Power BI. This means Stream Analytics will output to Power BI as a data set.

Then Click on Authorize to map the Power BI account that you want to use here. You will be prompted to login with your Power BI username and password. This account can be different from your Azure account.

After authorization, you will see list of all groups in the Power BI account, and you can choose where the data set will be published to. (by default it goes to My Workspace, but you can change it to other groups). If you want to learn more about groups in Power BI, read this post. Set a name for data set, for example StreamAnalyticsDS. Notice that if a data set with this name exists, data set will be overwritten. set a name for table, for example myTable. and then create the output.

After creating output and input you will see them both under Stream Analytics blade. but you can’t start the job yet. there is one step more.

Stream Analytics passes the data from input to output through query. Without a query you cannot start Stream Analytics job. For this example we use a very simple query which select everything from input and pass that to output. Click on Query item in the Stream Analytics main blade (as numbered 1 in above screenshot).

Change the default query to;

SELECT

*

INTO

[PBIOutput]

FROM

[PBIEventHub]

Notice that the query select everything from the input. so you have to put your input name after FROM clause. and it will insert result into output. So you need to put your output name after INTO clause. if names doesn’t match you get failure error message at the time of starting the job.

Don’t test the query. Just Save it and Start the job. It will take few minutes for job to start. Wait and check if the job started successfully. Otherwise failure might be related to input, output, or the query. You have to see job in RUNNING state as below.

As I mentioned earlier in this post, I’ll be using a .NET C# console application to pass the data to Event Hub. Let’s create the sample application by opening Visual Studio 2015 Professional. Create a New C# Console Application.

Name the application something, and when it is opened in Solution Explorer, go to Referencces, right click and select Manage NuGet Packages

Click on Browse, Search for Azure Service Bus, and install it.

After installation, open App.Config file and at the end of the file search for appSettings. The connection string to Azure Service Bus is in the value beneath this section.

The highlighted line should be changed to your service bus connection string. To find your service bus connection string go to Azure Portal, find the service bus (we’ve created it in Event Hub section) under All Resources.

Under RootManageSharedAccessKey you’ll find Primary Key;

Copy the value of primary key here and replace the value in app.Config file with it. Save the App.Config after change and close it.

Then right click on the references folder in solution explorer and add System.Configuration reference.

Now open Program.CS file and add these two namespaces using;

using System.Configuration; using Microsoft.ServiceBus.Messaging;

Also add code below under Main method;

string ConnectionString = ConfigurationManager.AppSettings["Microsoft.ServiceBus.ConnectionString"];

EventHubClient client = EventHubClient.CreateFromConnectionString(ConnectionString, "pbi");

// Declare values that we're about to send

Int32 unixTimestamp = (Int32)(DateTime.UtcNow.Subtract(new DateTime(1970, 1, 1))).TotalSeconds;

Random r = new Random();

int currentValue = r.Next(0, 100);

// Generate string in JSON format

string postData = String.Format("[{{ \"ts\": {0}, \"value\":{1} }}]", unixTimestamp, currentValue);

// Send the Message

client.Send(new EventData(Encoding.UTF8.GetBytes(postData)));

Console.WriteLine("Message sent. Press Enter to Continue.");

Console.ReadLine();

First Line in the code reads the connection string from app.config. Second line create an instance of EventHubClient with the connection string. Note that in this line connection created to Event Hub with name “pbi”. if you named your Event Hub differently enter your own name.

Next three lines are creating random integer value with time stamp. and line with String.Format will generate a JSON formatted string. and Client.Send send the string in UTF-8 encoding to Service Bus (Event Hub).

Execution of this application will pass only one data point to Event Hub. For adding another entry you have to run it again. Now the application is ready to run. Let’s run it for the first time.

After the execution we expect Power BI to show something. Let’s see.

Login to Power BI service with the account you have used in the Stream Analytics Authorization section. In My Workspace (or any other group you’ve selected in Stream Analyitcs Output section) you’ll find the new data set. if you followed the naming I suggested it should be named StreamAnalyticsDS.

Open the data set and you’ll see the table under data set with two fields we’ve passed (ts, and value) plus other fields from Event Hub (EventEnqueuedUtcTime, EventProcessedUtcTime, and PartitionId).

As you can see the structure can be anything sent from Event Hub and Stream Analytics. Now create two simple visualizations. One Card Visualization with distinct count of ts. This will show number of times we send data point (execute the console application) to Event Hub.

Also a Column chart with TS at Axis, and Value as the Value of chart.

Save this report, and Pin these two visualizations into a new dashboard.

Now go to the dashboard. While dashboard is open execute the console application. after few seconds of execution and sending message you will see the dashboard shows result. Fantastic, You’ve build your first Azure Stream Analytics and Power BI real-time dashboard now.

Real-time dashboard with Power BI, Azure Stream Analytics, and Event Hub open the path to many possible solutions. You can analyze Twitter data real-time. Or you can pass data from an IoT device with IoT Hub into a real-time dashboard. Come with ideas of what real-time dashboard with Stream Analytics can do? I like to hear your ideas.

Published Date : September 5, 2016

This is not story of Power BI in Power Point slides. This post is about how to use Power Point integration with Power BI for story telling of Power BI reports and dashboards through slides, and also give some users features such as commentary. This features is not a new feature, it has been released almost a year ago! but because many people still are not aware of such feature, I intended to write about it in a post. In this post you will learn;

If you want to learn Power BI, read Power BI online book; from Rookie to Rock Star.

Dashboard and reports used in this example are sourced from Power BI Desktop; The First Experience.

Power BI Tiles is an add-in for Excel, Power Point and few other Microsoft Office products version 2013 SP 1 or later. This component is developed by DevScope. Please note that this is not Microsoft built-in feature. However it can be used easily with Microsoft Power BI and Power Point. This component enables Power BI reports and dashboard elements to be pinned in Power Point slides. Download this component and install it from there, or from your office project.

Open Power Point, create a blank slide deck, then insert a BLANK slide, and from Insert menu option, click on My Add-ins

From My Add-ins you might see Power BI Tiles if you have installed that before. otherwise go to Store tab, and search for Power BI

After adding this add-in you will be ask to enter Power BI account related to this, and also authorizing Power BI tiles to access reports and dashboards in your Power BI cloud storage. After authorization you will see a box including list of dashboards in your work space will appear.

This box is Power BI Tile added to your slide. You can select any of dashboards you want. for this example I use the dashboard which is related to Pubs Data Analysis Demo I’ve done before. After choosing a dashboard you will see one of its tiles appear here.

This is only one of the tiles in the existing dashboard, You can navigate between tiles by two navigator arrow buttons highlighted above.

Here is the dashboard in full view in Power BI service. as you can see in Power BI Tiles add-in we can navigate between tiles individually.

Once you chose the tile, you can leave it there. It is already embedded into your Power BI slide. You can now add another Power BI Tile, again from My Add-ins and this time Power BI Tiles is there. Note that you can build similar dashboard here in Power Point with adding each item as a tile.

You can see here that I’ve added a title for this slide in Power Point, and also a Textbox with text “Psychology has the most sales”. Yes, with this method you can add commentary to Power BI reports and dashboards, you can tell the story behind the data with integration of Power BI and Power Point. very simple feature but really useful.

Under each Power BI tile in Power Point there will be a link to Power BI

When you click on this link, it will open that Power BI dashboard or report in a browser. This provides you the navigation from a high level story point to the detailed report in Power BI service.

By default Power BI Tiles are interactive tiles that can be refreshed and clicked, highlighted, and interactive. However if you want them as an image only tile, you can choose it from top right hand side of Power BI tile.

You can navigate to all other work spaces that you are part of, and see all reports and dashboards under it. by default Power BI Tiles will look into “My Workspace” (named as Personal Workspace), but you can change it from the top side of Power BI Tile.

You can also bring a Power BI public report ( a report that is published to web) into a tile

If you choose dashboard, then you can pick one element of dashboard in each tile. but if you choose a report. For example “Pubs Analysis” report we’ve built in another example, then you will see the whole report in tile, with all report pages, and filter pane.

The view of report is fully interactive here. For example if you hover on something or click on something you will see it responds like any other Power BI reports (but in Power Point slide)

Power BI and Power Point integration helps to tell story behind the data much easier. You have presentation and story telling features of Power Point, plus interactive visual, refresh-able from cloud features of Power BI. Good news is that Microsoft team is working on another integration feature named “Export to Power Point” which will be released very soon I believe. I’ll put together another post when that feature comes live.

Published Date : August 28, 2016

Line chart is one of the most basic charts that you can see in every visualization tool. Power BI like other visualization tools has the line chart visualization which is good for showing one or two measure’s value across an axis which can be a category, time period and etc. Recently Power BI line chart added the feature to create dynamic reference lines and the Analytics pane which brings more power into this useful chart. In this post I’ll show you an example of using these features in Power BI line chart. If you are new to Power BI or you want to learn more make sure to read Power BI; from Rookie to Rock Star.

For running the sample in this post you will need to download and install AdventureWorksDW sample Microsoft SQL Server database.

Line chart can show one or more measures as measures, such as Sales Amount, Total Costs, Quantity of the goods sold and etc.

Depends on the variety of values across a time period, Line chart can illustrate a straight line as a trend. This trend is good to understand how in overall products are selling, is revenue going up or down for example.

You might want to define minimum, maximum, average, or median values for your line chart as separate lines, and compare values lines with these lines. These are reference lines which can be dynamically created based on value of measures in the chart.

Create a new Power BI solution with Get Data from AdventureWorksDW SQL Server database, and choose tables as DimDate, DimProductCategory, DimProductSubCategory, DimProduct, and FactInternetSales. Click on Load, and in the relationship tab. remove extra relationships between FactInternetSales and DimDate to be only one active relationship based on OrderDateKey.

Go to Report tab, and add EnglishProductCategory to the Page Level Filters. Filter data to be only Bikes.

Page level filter will filter all visualizations in this page to only show Bikes information. Now add a Line Chart from visualization pane. Set the value to be SalesAmount (from FactInternetSales), Axis to be FullDateAlternateKey (from DimDate), and EnglishProductSubCategory (from DimProductSubCategory).

This line chart now simply shows three product sub categories of Bike; Mountain Bikes, Touring Bikes, and Road Bikes. FullDateAlternateKey is a full date column which in this view showed as a Year level. This behavior is because Power BI automatically add Date Hierarchy to date fields. If you want to change this behavior you can go to options and do so.