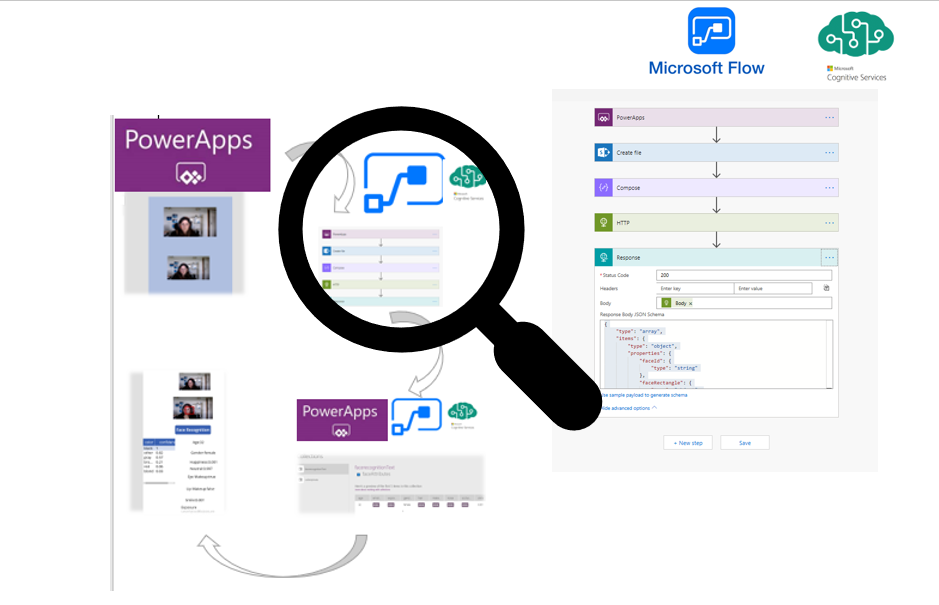

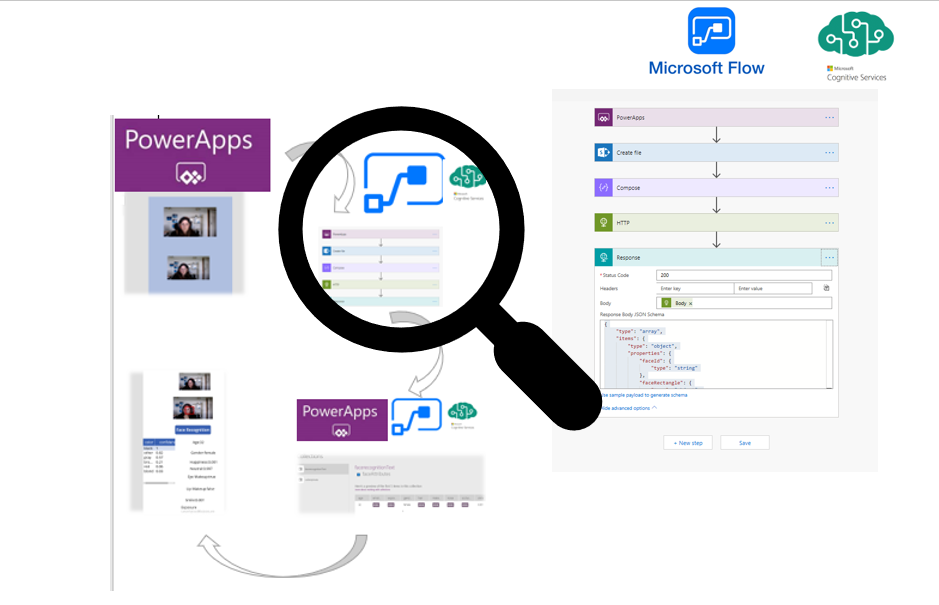

In this series the process of how to create a simple applicaiton for facial recognition has been explained.

In the last post, the process of creating Power apps for taking the photo has been explained.

In this post, the process of creating face recognition function in Microsoft Flow will be explained. In the next one, how we able to connect and show the final result will be explained clearly.

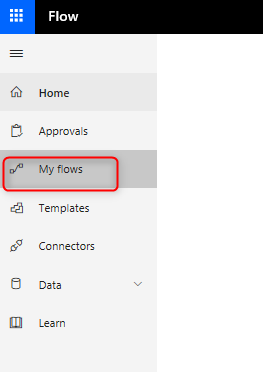

First, you need to log into Microsoft Flow: https://australia.flow.microsoft.com/en-us/

Microsoft Flow is an automated process site that able to handle the different process with most of Microsoft applications.

In this post, the main reason for using Microsoft Flow is for Microsoft Cognitive service, Face Recognition serivce.

However, before starting to setting up the Flow, you need to setup the Cognitive Servicce in Azure Portal or Cognitive Service. you cna follow the instruction from https://docs.microsoft.com/en-us/azure/cognitive-services/cognitive-services-apis-create-account

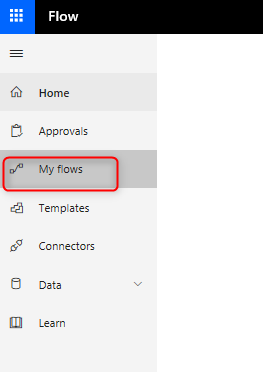

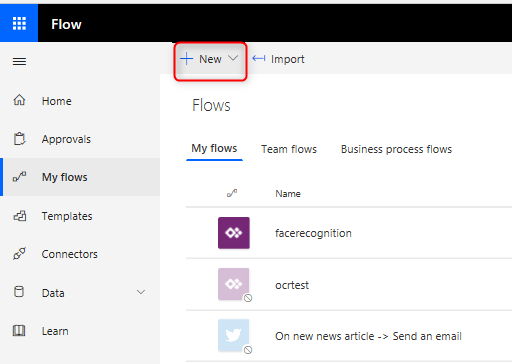

After setting up, now click on the My Flows:

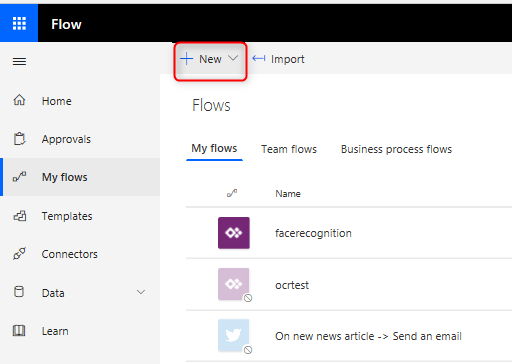

In the Myflow, click on the New to create a new flow

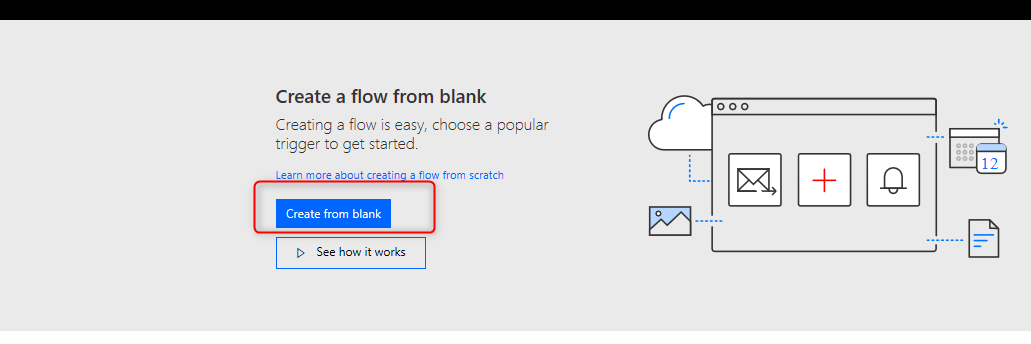

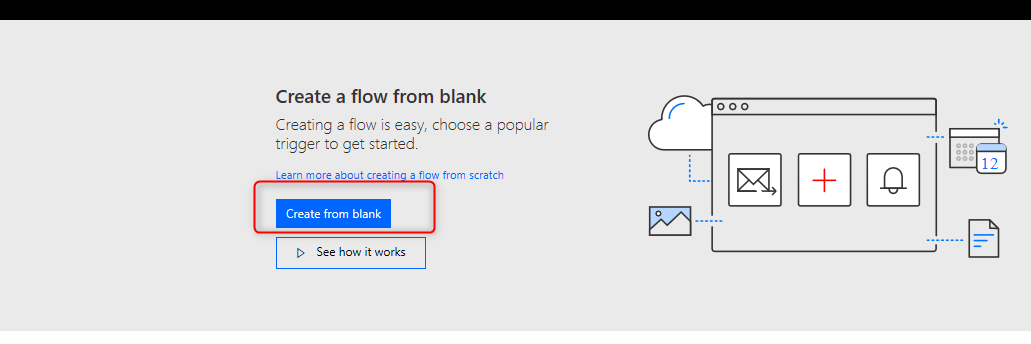

In the flow, choose the Create From Blank.

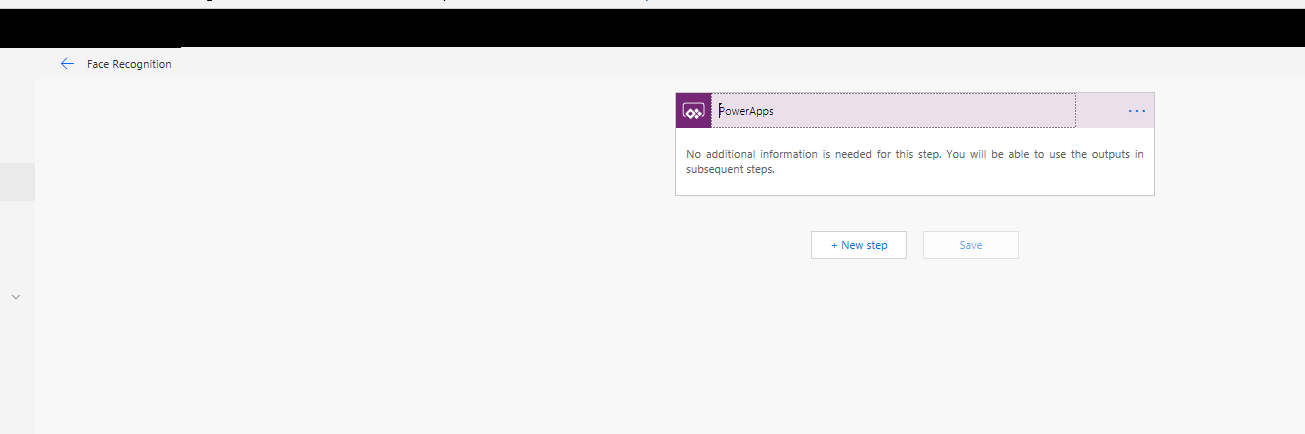

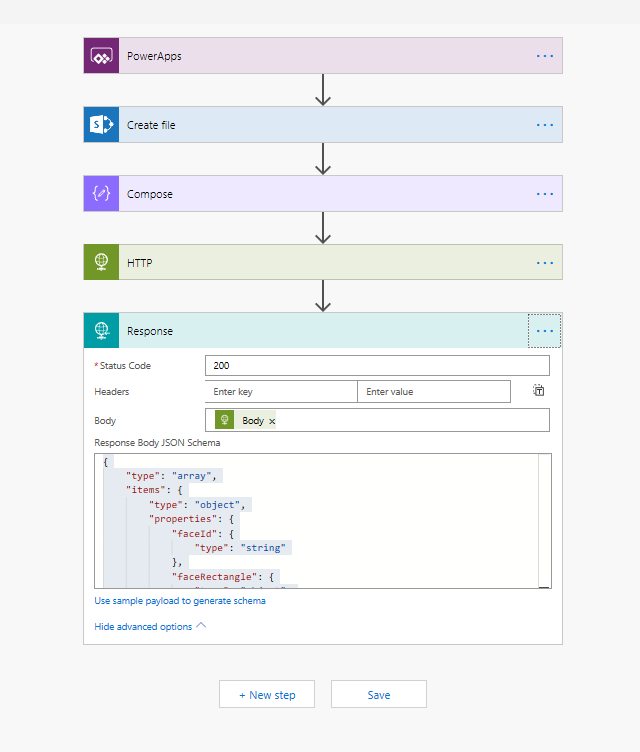

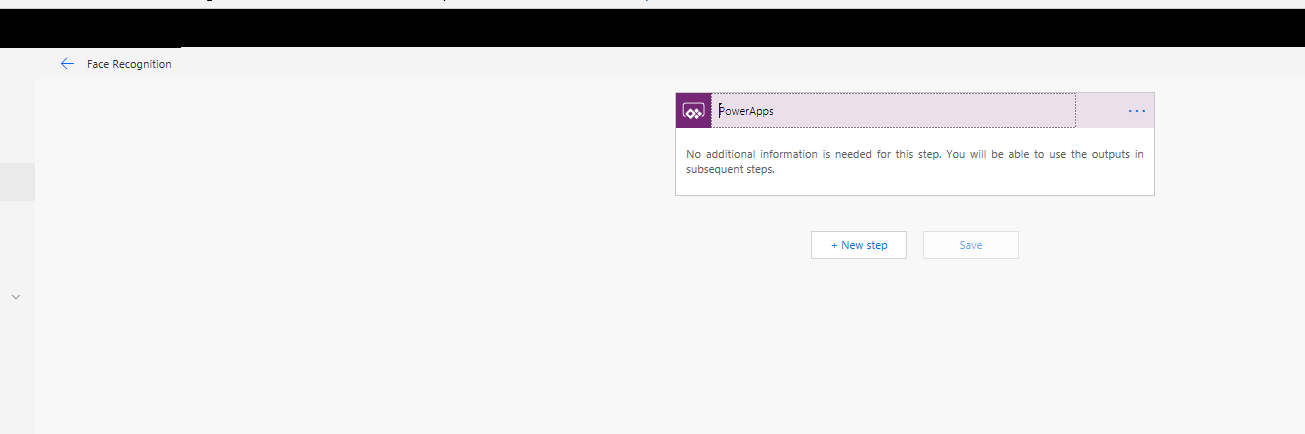

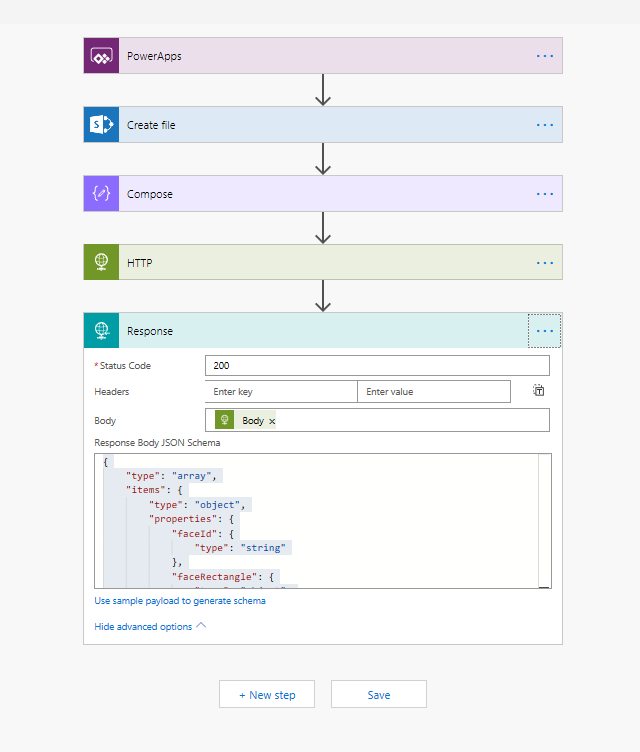

In the new flow, add trigger, the trigger for the flow is Power Apps (the picture that has been taken from face).

The Power App does not need any attributes. To call the face reccognition API, there is a need to store and convert the picture that we taken by Power App.

There is a component for Face Recognition in Microsoft Flow, however it just able to recive specific format, and also also not able to sent out the collection to Power Apps. To Solve this problem we need to add more steps and call teh API via other components,

As a result, first we need to store it in sharepoint folder by calling the Create File, we need to add the Site Address, Folder Path, File Name. Moreover, we need to send the actual image file from Power Apps with the name of Create_FileContent.

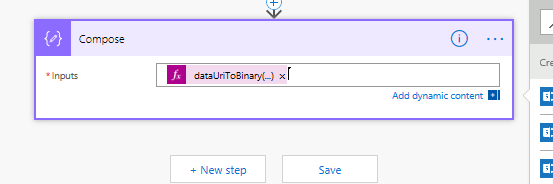

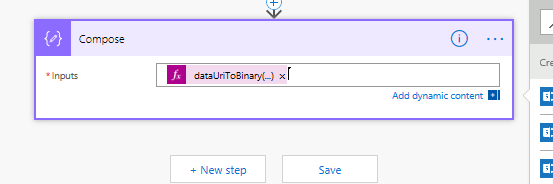

In the next step, we need to pass it to the Compose component for the aim of store it in a variable ands also pass it to the HTTP component.

Here in the ComposeComponent, we have to convert the picture to the binary format using the function

dataUriToBinary(triggerBody()[‘Createfile_FileContent’])

to do that, first click on the input then in the Expression search for the function dataUriToBinary then for the input choose the Createfile_FileContent.

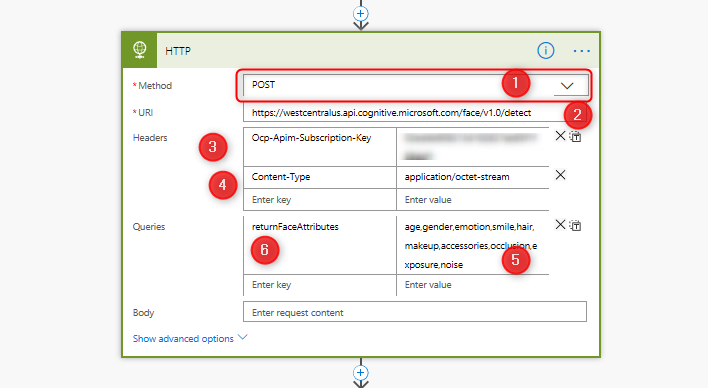

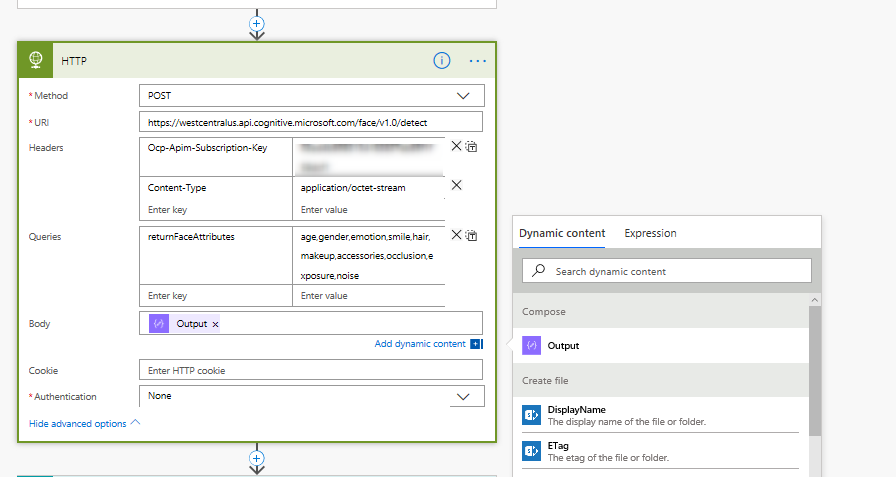

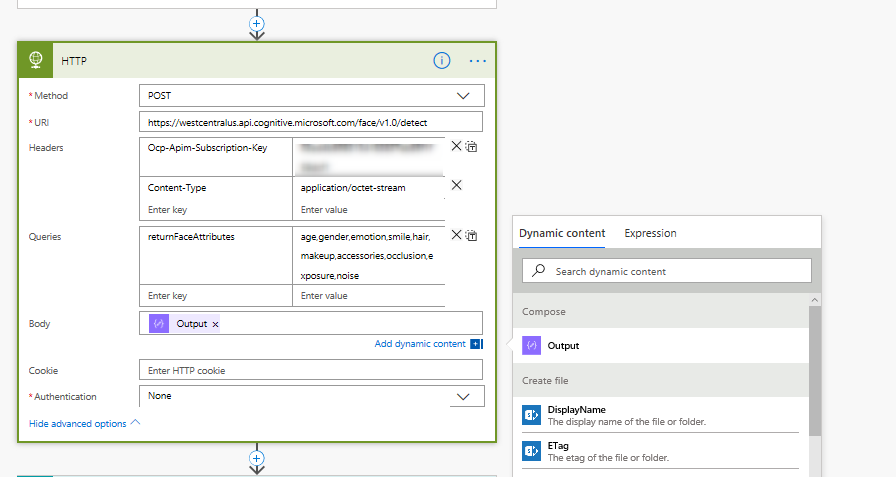

In enxt step, we are goin to pass the binary file (picture) to a component named HTTP. This component is responsible for caling any API by passign the Url, Key and the requested fileds.

Choose a new action, and search for the http

for thr headers choose

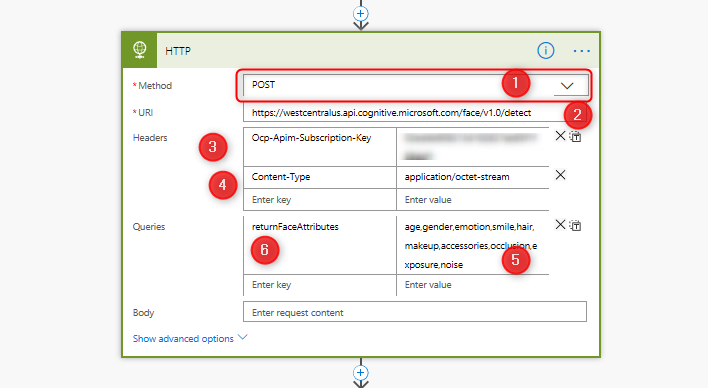

In the next step, in http component, choose the Post for the Method,

Url”https://westcentralus.api.cognitive.microsoft.com/face/v1.0/detect

for headers:

Ocp-Apim-Subscription-Key: put the API key from Azure

Content-Type: application/octet-stream

then we need to provide the Queries:

the first attribute is

returnFaceAttribute: that need to return below components from a picture:

age,gender,emotion,smile,hair,makeup,accessories,occlusion,exposure,noise

The last Step is to pass the picture in Body of the Http component.

Http component able to call any type of API and can be used for calling other webservices.

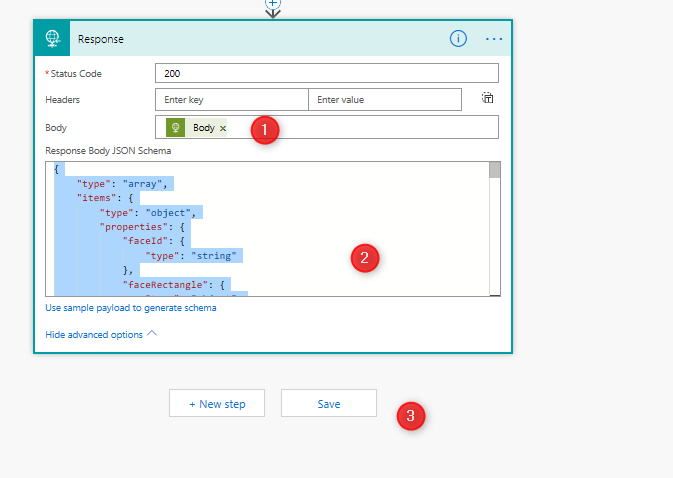

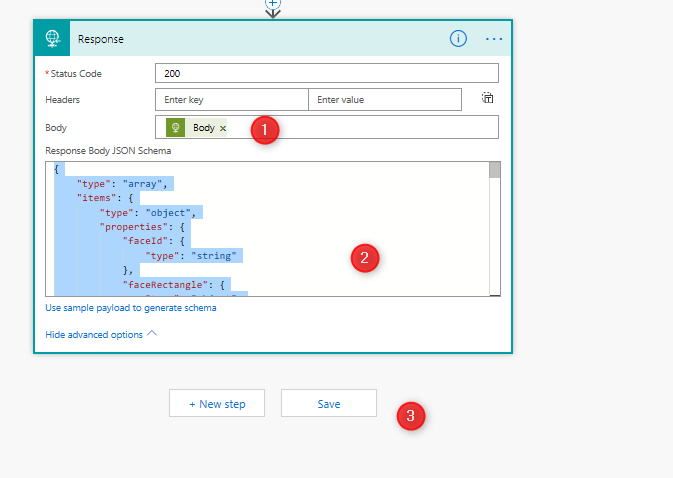

Now we need another component to be able to pass the result to Power Apps, as a result

in the new action search for the responce, then for status code select 200, for Body choose the Body, and for the Responce Body JSON Schema paste the below codes

{

“type”: “array”,

“items”: {

“type”: “object”,

“properties”: {

“faceId”: {

“type”: “string”

},

“faceRectangle”: {

“type”: “object”,

“properties”: {

“top”: {

“type”: “integer”

},

“left”: {

“type”: “integer”

},

“width”: {

“type”: “integer”

},

“height”: {

“type”: “integer”

}

}

},

“faceAttributes”: {

“type”: “object”,

“properties”: {

“smile”: {

“type”: “number”

},

“gender”: {

“type”: “string”

},

“age”: {

“type”: “integer”

},

“emotion”: {

“type”: “object”,

“properties”: {

“anger”: {

“type”: “integer”

},

“contempt”: {

“type”: “number”

},

“disgust”: {

“type”: “integer”

},

“fear”: {

“type”: “integer”

},

“happiness”: {

“type”: “number”

},

“neutral”: {

“type”: “number”

},

“sadness”: {

“type”: “integer”

},

“surprise”: {

“type”: “number”

}

}

},

“exposure”: {

“type”: “object”,

“properties”: {

“exposureLevel”: {

“type”: “string”

},

“value”: {

“type”: “number”

}

}

},

“noise”: {

“type”: “object”,

“properties”: {

“noiseLevel”: {

“type”: “string”

},

“value”: {

“type”: “number”

}

}

},

“makeup”: {

“type”: “object”,

“properties”: {

“eyeMakeup”: {

“type”: “boolean”

},

“lipMakeup”: {

“type”: “boolean”

}

}

},

“accessories”: {

“type”: “array”

},

“occlusion”: {

“type”: “object”,

“properties”: {

“foreheadOccluded”: {

“type”: “boolean”

},

“eyeOccluded”: {

“type”: “boolean”

},

“mouthOccluded”: {

“type”: “boolean”

}

}

},

“hair”: {

“type”: “object”,

“properties”: {

“bald”: {

“type”: “number”

},

“invisible”: {

“type”: “boolean”

},

“hairColor”: {

“type”: “array”,

“items”: {

“type”: “object”,

“properties”: {

“color”: {

“type”: “string”

},

“confidence”: {

“type”: “number”

}

},

“required”: [

“color”,

“confidence”

]

}

}

}

}

}

}

},

“required”: [

“faceId”,

“faceRectangle”,

“faceAttributes”

]

Now we just need to save the Flow and Test it to make sure it working properly

so till now we just create a Flow

The flow is created, now weneed to connect it to the Power Apps. In the next posts the process of to connect to the power apps to show the facial specification will be shown.

Reference from: https://www.youtube.com/watch?v=KUtdnnYRpo4

Trainer, Consultant, Mentor

Leila is the first Microsoft AI MVP in New Zealand and Australia, She has Ph.D. in Information System from the University Of Auckland. She is the Co-director and data scientist in RADACAD Company with more than 100 clients in around the world. She is the co-organizer of Microsoft Business Intelligence and Power BI Use group (meetup) in Auckland with more than 1200 members, She is the co-organizer of three main conferences in Auckland: SQL Saturday Auckland (2015 till now) with more than 400 registrations, Difinity (2017 till now) with more than 200 registrations and Global AI Bootcamp 2018. She is a Data Scientist, BI Consultant, Trainer, and Speaker. She is a well-known International Speakers to many conferences such as Microsoft ignite, SQL pass, Data Platform Summit, SQL Saturday, Power BI world Tour and so forth in Europe, USA, Asia, Australia, and New Zealand. She has over ten years’ experience working with databases and software systems. She was involved in many large-scale projects for big-sized companies. She also AI and Data Platform Microsoft MVP. Leila is an active Technical Microsoft AI blogger for RADACAD.

Hi!

Just a little correction that took me sometime to figure it out. For headers you say:

Ocp-Apim-Subscription-Key: put the API key from Azure

Content-Type: application/octet-stream

then we need to provide the Queries:

the first attribute is

returnFaceAttribute: that need to return below components from a picture:

age,gender,emotion,smile,hair,makeup,accessories,occlusion,exposure,noise

There is a missing “S” in this sentence -> returnFaceAttribute

If is not there, the response doesn’t resolve it.

Great tutorial!

Thanks so much, I will put the screenshot as well to avoid that, much appreciated for telling me