Previously I’ve explained a method of looping through number of files in a folder which used Custom Functions. This method is preferred method buy not always working with Schedule Refresh and gateway setup, because scheduled refresh doesn’t always support custom functions in the code. So I this post I show you a second method that loops through the files dynamically, and it doesn’t use custom function, and you will see at the end of this post that schedule refresh works with this method. For better clarification of the scenario I will first do custom function approach, show you the problem with scheduled refresh, and then I’ll explain about the second method. If you would like to learn more about Power BI, read Power BI online book; from Rookie to Rock Star.

Prerequisite

For running example of this post you need to download set of CSV files from here: fitbitsourcefilesradacad

Defining the Challenge

In this example we have number of CSV files which has list of my Fitbit activities, one file for each month. All under the same folder, all same structure. We want to apply number of similar steps to each files and loop through them and fetch the data all into single data set. After building a report on top of that we want to publish it to Power BI service, and get that refreshed through a gateway configuration. As files has similar structure we are looking for a mechanism to apply same steps on all files.

You will see two approaches with this, first one is preferred approach because it uses custom functions, however because scheduled refresh doesn’t support custom functions always, so there is a second approach which will work with automatic refresh. If you are visiting this page at the time that problem of scheduled refresh and custom function resolved, then use the first approach, otherwise use the second.

First Approach: Custom Function

This is Preferred Approach but is not working with Schedule Refresh at the time of writing this post.

Process one File

Open a New Power BI Desktop, Start by Get Data, and Select CSV as a source.

Choose of the files in Fitbit Source folder and continue;

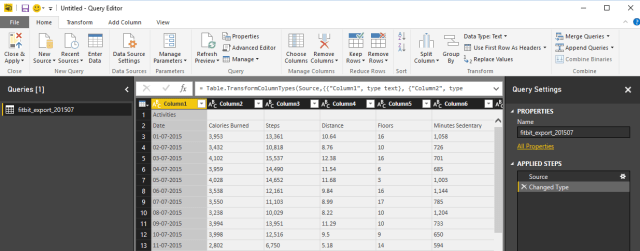

Go to Edit Queries Window with clicking on Edit button, and You will see data previewed there.

As you can see in the preview the first record isn’t useful. it has just a title “Acitivities”, so let’s remove it with going to Remove Rows, Remove Top Rows. and enter 1 in the dialog box;

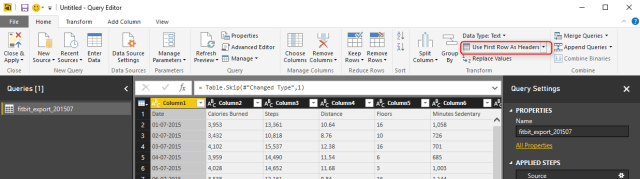

Now the first row only contains column headers. So let’s promote it to column headers with clicking on Use First Row As Headers.

There are also some blank rows, so let’s remove them with Remove Rows -> Remove Blank Rows.

Now we’ve done all clean up work for this file. We have one row per each day with details of activities in that day. Next step is to loop through all files in the source folder, and apply same number of steps on them. Fortunately we can use Custom functions for this. Previously I’ve explained how Custom Functions are helpful in code re-usability, better maintenance, and consistency. Instead of repeating same steps for each file is it much wiser to create a function from these steps, and call the function for each file. So let’s do it;

Creating Parameter

For creating the function through GUI, the first step is to create a parameter. You can create Parameter from Menu option or with right click on the blank area in Queries section.

Create the Parameter with name of FullPath, of type Text, and set a default value to be full path (including file name, and extension) of one of CSV files in your folder;

After creating the function you should be able to see that with Parameter icon in Queries pane;

Creating Function

Next step is to get the current query (in the screenshot named as fitbit_export_201507) sourced from the FilePath parameter instead of static path. Click on the query, and choose settings from steps in right hand side pane;

Change the File path in the setting window to be sourced from Parameter, and because you have only one parameter FilePath parameter will be selected automatically.

After clicking on OK, you will see result of first step before applying your changes, so simply click on the last step to see the final result. if the file path you have provided as the default value of parameter be wrong you will see an error, otherwise you will see the data of that particular file. Now let’s create the function;

Right click on the query that we just changed the source of it (fitbit_export_201705), and select Create Function.

Set the function name as ProcessFile, and leave the parameter as is.

Because the query used parameter as a source so it can be simply used to create a function. Now You will see a function listed in Queries pane as well.

Note that the function uses M (Power Query Formula language) with Lambda syntax. But we have created it all just with graphical user interface option. If you are interested to learn more about code side of it, read this post. This function now has all the steps generated in the first query. To see the steps you can simply click on Advanced Editor while you have ProcessFile function selected in Queries pane.

Don’t be afraid of this code! you don’t need to change anything here. It was just for letting you know that we have same steps copied into the function.

Now you can remove the first query, we don’t need that anymore, steps are already in the custom function.

Get Data From Folder

Now that we have the function ready, let’s get list of files and apply it on all files. Get Data from a New Source, and More.

Choose Folder this time;

And select the folder which contains all CSV files;

After clicking OK here and also on the preview, you will see list of all files in this folder with their details such as file name, extension, date created…. and folder path. You also see a column as Content, which is the Binary format of this files (the actual data in each file), I will talk about this column more in second approach.

For this approach we just need a full file path which we can generate from Folder Path and Name , so only select these two columns and remove everything else.

Now let’s concatenate these two and create a full file path, Select Folder Path first, and then hold ctrl key and select Name column then. Go to Transform Tab and Choose Merge Columns

Name the new concatenated column as FullFilePath, You don’t need to put a separator for it, because Folder path already has an ending backslash in the string.

This will replace existing two columns with the new FullFilePath concatenated column;

Call Custom Function For Each File

Now we have everything ready for the custom function to be called for every record of this table. Go to Add Column tab, and click on Invoke Custom Function;

In the Invoke Custom function dialog box, select the function as ProcessFile, and the input parameter this time comes from the FullFilePath column that we created few minutes ago. Name this column as Content.

After clicking on OK, in an instant, you will see the new column appears which has a Table in each cell. This is the data table processed for each file. If you click on a blank area of one of table’s cells you will see the data preview of that in a details view pane underneath preview pane.

We don’t need FullFilePath column anymore, so Remove that column. Now in the table we have just one column, which is a structured column. Means it has a structured value in each cell (in this case a table in each cell). We can now expand this structured column to all underlying columns with clicking on Expand button. Also remember to uncheck the box for Use Original Column Name as prefix.

This expand button (or you might call it Magic button now, as it reveals the magic!) combines all data tables into a single big data table, including data rows from all CSV files.

All we need to do now is some data type changing;

Data Type Conversion

Change data type of all columns except Date and Distance to Whole Number. Change data type of Distance column to Decimal. and Finally change data type Date field to Date. For the data type of Date field to be changed you need to choose locale. because the exported files has date values in New Zealand Date format, and if you change it with normal data type conversion, Power Query try to convert their data type based on your local system date format, which might not be New Zealand’s format. So right click on Date Column. under Data Type choose Using Locale.

In the new dialog box, choose the data type to convert to as Date, and set locale as English New Zealand

After clicking on OK you will see data types converted correctly without any error. As the final step now change the query name to Tracking Data;

Notice that Date column showed in above screenshot is converted to a Date format (you can see the date format icon in the column header), but it shows in this window to me in my computer locale format which is English (United States). The way that it shows doesn’t matter. All matters is that this is of Date format.

Build Sample Report

Now let’s build a very simple Report with the data. Close and Apply Power Query window, and in the Report pane, add Steps as Total value in a Card Visualization. Also in the formatting section set the Format of data label to display as None (means without any millions, or K, or anything like that).

The other visualization to add is a Column Chart, which Steps as Value, Date as Axis. It it will automatically brings Date Hierarchy, So remove Quarter and Day from the hierarchy.

After removing Quarter and Day, click on Expand all down one level in the hierarchy in the top left corner of the column chart (screenshot above numbered 2);

Also create a measure to show the last date. Go to Modeling tab, New Measure, and use LastDate function to get the latest date of the date field in the Tracking Data table;

Last Date Processed = FORMAT( LASTDATE('Tracking Data'[Date]) , "YYYY-MM-DD")

Show this new measure as a Card visual in the report.

We can make this report much richer with adding more insights and visualizations, but let’s keep that simple for now. Save the report and Publish. You can save it with a name like Fitbit Custom Function Method.

Set up Gateway

Because we want the report to be refreshed from on-premises folder we need to set up a gateway. Both Personal and On-Premises gateways can do this. Explanation of how to install and configure gateway is out of scope of this post, If I want to explain that I will make this post VERY LONG! If you want to learn more about gateways read this post about Personal gateway, or this one about On-Premises Gateway. I explain everything from after successful setup of gateway here, where we need to add a new data source.

New Data Source Configuration

We have to create a data source under the gateway for our local folder containing CSV files. Go to Manage Gateways in Power BI Service

Now select your gateway, and add a new data source under it.

Set up the new data source for Folder and set the local folder path there;

Note that the local path should be accessible from the machine that has gateway installed on it. otherwise it should be a shared path. The windows username used here also should be for an account that has read access on the folder we are trying to access.

After successful setup you will see a message clarifying that;

Scheduled Refresh

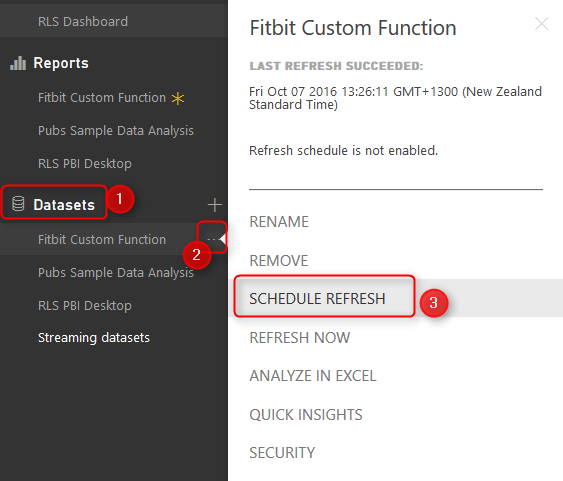

We’ve published the report earlier. Now it’s the time to Schedule it for refresh, and configure it to get its data from gateway. Under Datasets find the Fitbit Custom Function dataset, and click on ellipsis button and choose Schedule Refresh.

In the Schedule Refresh Setting section, You will see an error message that says you can not configure gateway for this data set. That’s because of custom function used here. (Note that this happens at the time of writing this blog post, It might be resolved by the time that you are reading this post)

The error message is: You can’t schedule refresh for this dataset because one or more sources currently don’t support refresh.

Now you see the problem, It was a long way to show you the issue! but you need to know it before using many custom functions in your code. Let’s go through the second approach to resolve it.

Second Approach: Combine Files

This approach has some limitations and difficulties involved, However at the time of writing this post, this is the only way of getting the scheduled refresh working.

Get Data From Folder

With this method we start with getting data from Folder. Open a new Power BI Desktop solution, and Get Data From Folder, exactly as you’ve seen in the Get Data From Folder section earlier in this post.

This time however we don’t use custom functions, we want to use binary data of the file content. Content column includes the Binary Data. Content column is all we need, so remove all other columns.

If you click on a blank area in any Binary cell in the content column, you will see the CSV file in preview pane under it.

Combine Binaries

This Content column is a structured column, and any structured column can be expanded. Expanding a structured column with Binary values Will combine them together. Click on Combine Binaries button on the top right hand side of the column.

Combine Binaries will combine content of all files together, and will generate a big list of all data rows. You can also see automatic steps applied after combining binaries in the steps pane.

Now that we have all data rows. Let’s do some cleanup;

As you can see in the preview the first record isn’t useful. it has just a title “Acitivities”, so let’s remove it with going to Remove Rows, Remove Top Rows. and enter 1 in the dialog box;

Now the first row only contains column headers. So let’s promote it to column headers with clicking on Use First Row As Headers. There are also some blank rows, so let’s remove them with Remove Rows -> Remove Blank Rows.

There are still some additional rows with “Activities” or “Date” labels in their first column. These are heading rows in each CSV file. After combining content of all files these appears in the middle of the data set.

We can remove them by applying a filter on Date column. Click on Filter icon for Date column and from Text Filters choose Does Not Equal.

Choose Does Not Equal, and with an AND condition type in both Activities and Date there.

This will filter all extra labeled rows, and you will only see actual data rows.

Now Apply Same data type conversion steps and build the same report as we’ve done earlier. So continue again from Step Data Type Conversion.

Apply the same steps from Step Data Type Conversion, and Build the Sample Report till saving the report.

Save this Power BI file as Fitbit Combine Binaries. and Publish.

Schedule Refresh

If you have already set up the Data Source for this folder, you don’t need to do it again. Just go directly to Schedule Refresh under Fitbit Combine Binaries dataset.

This time in Schedule Refresh setting you won’t see any error, and you can simply choose the Gateway and apply. Because we haven’t used any custom functions here, so no error message anymore. All good to go.

Under this section there is another section that you can Schedule report to be refreshed, daily or weekly depends on how you want it.

Testing Automatic Refresh

Now let’s test the refresh. Download this new CSV file and paste it in the same folder of other CSV files (the folder that you’ve set up for gateway as a data source); fitbit_export_201605

Refresh the dataset with Refresh Now (Or wait untill schedule refresh happens! I’m not that patient, so I’ll do refresh now 🙂 )

If there be no errors in connection and gateway setup and access to the source folder, then refresh finish successfully. Go to report and refresh the report to see the new data.

You will notice that Data of month May is added. Total number of steps is now over 4 million, and last processed Date is 31st of May 2016.

Congratulations, You have done the example successfully, and much harder; You have finished this very long post 🙂

Which Method is Best?

If the Gateway issue with custom functions resolves, I’d say first method with custom function is definitely the best method. Because you have vast flexibility with custom function. Combine Binaries doesn’t always produce an easy output, with some excel files it might generate some data rows which needs many more steps for cleansing the final data. But for now that gateway has this issue with custom functions, the only possible way is Combine Binaries.

Great work, this will save me huge amounts of time when it comes to delivering reports!

I do have one question: The resulting data set after merging all the files does, as far as I can see, not have any reference to the original file. I need a column in the dataset that would contain the filename of the original csv-file. I would believe that I could extract the filename in some of the first steps in this guide, and add it to a new column. Then when the function is called, It would do that for every single file.

I can however not seem to figure out how to do this. I tried the Csv.Document-function, but it seem to deliver only the content rather than the filename I’m after. Is there any way to do this?

Hi Jan,

You can use the FileName field for that. before combining files with each other; keep File Name, and the file content columns, then expand/combine.

Cheers

Reza

Hi Reza,

first Reply didn’t go through…

Thank you so much for this post, solved a huge problem getting data from our WAGO SPS controllers (energy monitoring).

Do you have any other examples for the use of parameters/functions? I know there is a lot of potential in these two, but I didn’t finally understand how to use it.

I’m always looking forward for your newsletters, they are a source of useful knowledge for me!

Thanks and cheers from Germanym,

Sebastian

Hi Sebastian.

Thanks for your comment 🙂

Yes, I have written another blog post about Parameters and custom functions. here it is

Cheers

Reza