Power BI can connect to many data sources as you know, and Spark on Azure HDInsight is one of them. In area of working with Big Data applications you would probably hear names such as Hadoop, HDInsight, Spark, Storm, Data Lake and many other names. Spark and Hadoop are both frameworks to work with big data, they have some differences though. In this post I’ll show you how you can use Power BI (either Power BI Desktop or Power BI website) to connect to a sample of Spark that we built on an Azure HDInsight service. by completing this section you will be able to create simple spark on Azure HDInsight, and run few Python scripts from Jupyter on it to load a sample table into Spark, and finally use Power BI to connect to Spark server, load, and visualize the data.

This post is a section of Power BI from Rookie to Rock Star online book.

Prerequisite

- For running sample mentioned in this section you need to have an Azure subscription. (You can apply for a free trial as well)

- You need to install Microsoft Spark ODBC Driver on your machine (https://go.microsoft.com/fwlink/?LinkId=616229). This is for connecting from Power BI Desktop.

- For some features of Spark Connection through Power BI website you’d need Power BI Pro account (You can apply for a trial).

A Bit of Introduction

What is Spark? What is HDInsight?

I’ve spoken about Power BI well enough in previous chapters. However you might be new to big data names such as Spark and HDInsight, and would like to know what these are? I say it in the most simple method; HDInsight is the framework for running big data, you can have your big data storage as a distributed storage for massive availability. Spark on the other hand is another big data framework that works great for analytics and big data operation, however it doesn’t work with distributed files. So the real world big data solution has most of the time Spark on top of an HDFS (Hadoop Distributed File System) or Azure HDInsight to gain both scalability and powerful analytics. Spark is an Apache application, and often called as Apache Spark. However Spark can be installed on both Windows and Linux servers. In this sample for simplicity we will use Spark on Linux server.

What is Jupyter?

Jupyter is a live notepad engine for writing and running codes such as Python (it supports mote than 40 languages!) on servers (such as Spark server).

Setup and Configure Spark on Azure HDInsight

Let’s start building the first Spark on Azure HDInsight. You can skip this part if you have an Spark on Azure HDInsight already.

- Login to Azure Portal through https://portal.azure.com.

- Go to New, and then under Data + Analytics choose HDInsight

- In the New HDInsight Cluster definition section set a name for cluster (my sample is mysparkcluster), then set Cluster Type as Spark (it is still in preview stage), then Cluster Operating System as Linux. When you choose Linux it will install on Ubuntu 14.04 LTS.

- Choose one of your subscriptions for Azure (If you have more than one), then assign a resource group. You can choose one of existing resource groups or create a new one.

- Set the Credentials for Spark cluster. set a password for admin user. Then write a SSH username and password. SSH username and password used to connect to spark cluster through command line. In this example we won’t use command line. There is also a limitation on some reserved SSH user names that you cannot use.

- Set Data Source. You can choose an existing storage account, or create a new one. and set the container and location.

- Choose Node Pricing Tiers. In this example because we are not processing large amount of data I’ll do it with couple of nodes. You can see how much this cluster costs you per hour based on your selection of nodes and pricing tier for worker nodes and head node.

- Now Create the HDInsight Cluster.

- It will take about 15 minutes for the Cluster to be created and visible in your Microsoft Azure dashboard.

- Now you have your Spark on Azure HDInsight ready for the next step.

Setup the Sample Table for Spark on Azure HDInsight

In this section we will load a sample data into a table (inside our Spark on Azure HDInsight cluster) using some codes that we run from Jupyter.

- Open Jupyter for your Spark on Azure HDInsight with going to https://<your spark cluster name>.azurehdinsight.net/jupyter

- You will be asked for username and password. Enter the admin username and password we’ve created in previous section.

- Click on New and choose a Python 2 type notebook.

- You can name the document something meaningful and then copy and paste code below there into a cell

-

from pyspark import SparkContext from pyspark.sql import * from pyspark.sql import HiveContext from pyspark.sql import Row # Create Spark and Hive contexts sc = SparkContext('yarn-client') hiveCtx = HiveContext(sc) - Then press Shift+Enter (to run the code and go to the next cell)

- You will see the Jupyter icon showing that the Kernel is busy after running commands.

- Wait for the command to run, and Kernel back to idle state.

- Now add script below in the second cell;

-

# Create an RDD from sample data hvacText = sc.textFile("wasb:///HdiSamples/HdiSamples/SensorSampleData/hvac/HVAC.csv") # Create a schema for our data Entry = Row('Date', 'Time', 'TargetTemp', 'ActualTemp', 'BuildingID') # Parse the data and create a schema hvacParts = hvacText.map(lambda s: s.split(',')).filter(lambda s: s[0] != 'Date') hvac = hvacParts.map(lambda p: Entry(str(p[0]), str(p[1]), int(p[2]), int(p[3]), int(p[6]))) # Infer the schema and create a table hvacTable = hiveCtx.createDataFrame(hvac) hvacTable.registerTempTable('hvactemptable') dfw = DataFrameWriter(hvacTable) dfw.saveAsTable('hvac')Commands above will populate a hive table called hvac from a CSV sample file located on the server.

- After pressing Shift+Enter, and waiting for the kernel to go back to idle mode. you can query all hive tables with below command;

-

hiveCtx.sql("SHOW TABLES").show() - after Shift+Enter you will see the result below;

-

- You can even query hvac table to see the data;

-

hiveCtx.sql("SELECT * FROM hvac LIMIT 10").show() - and the result will be;

-

Now we have table with the data ready for Power BI to work with, Let’s go to the next step.

Connection from Power BI Desktop to Spark on Azure HDInsight

As I said in the very first part this tutorial, you need to install Microsoft Spark ODBC driver to be able to connect from Power BI Desktop to Spark on Azure HDInsight.

- Open Power BI Desktop and go to Get Data Section.

- Choose Azure, and then Azure HDInsight Spark.

- Enter the spark cluster URL as https://<your spark cluster name/azurehdinsight.net

- If you don’t have Microsoft Spark ODBC Driver installed you will see an error as below;

- If you have driver installed you will be asked for the username and password (the admin user that we’ve created for Spark on Azure HDInsight before)

- After successful connection you will see list of tables. Choose hvac simply and then Load.

- That was really simple from Power BI point of view, wasn’t it?!

- Now you can visualize it as you want! for example I’ve created an Area Chart with Time as axis, and average of ActualTemp and average of TargetTemp as values. I’ve changed color of data values as below.

- I’ve also added a slicer (horizontal) for the BuildingID.

- And here is the result.

You can simply publish your report to Power BI website. Let’s now see how you can connect to Spark from Power BI website itself.

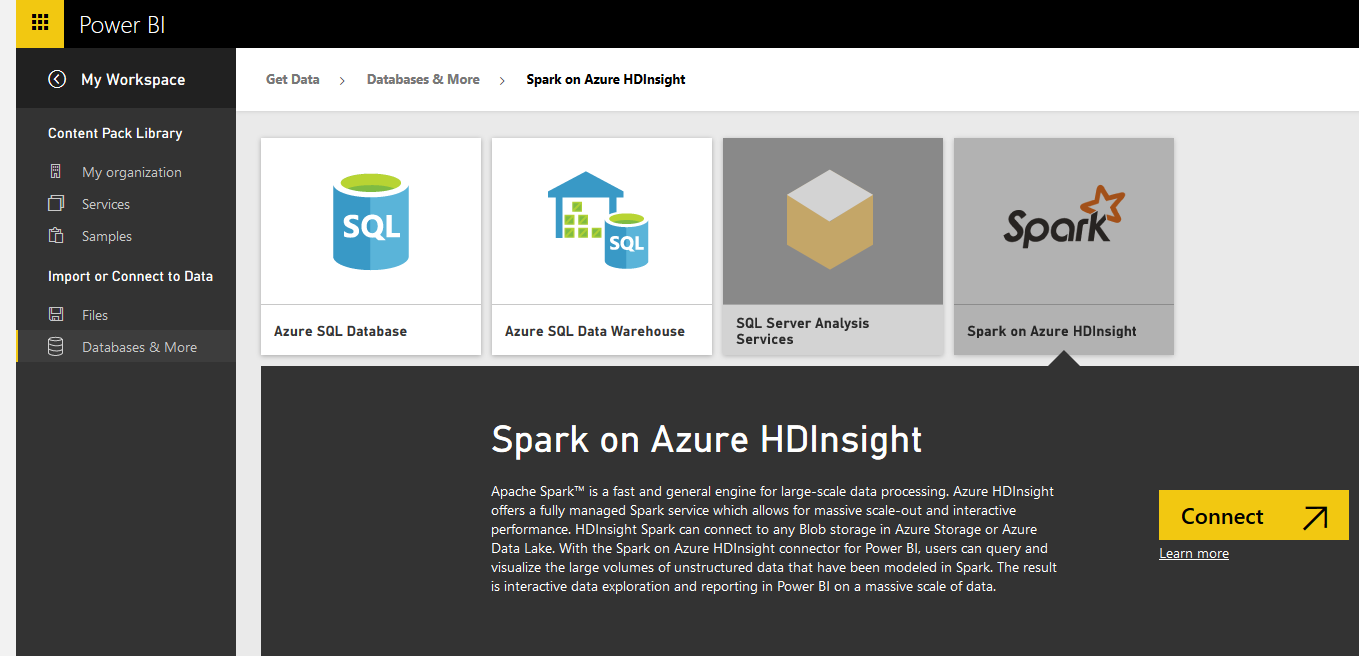

Connection from Power BI website to Spark on Azure HDInsight

- Login to Power BI website and Get Data from Databases, and Choose Spark on Azure HDInsight as the source.

- Type in the server name as https://<your spark cluster name>.azurehdinsight.net and then connect

- Sign in to the server with user name and password

- Now you can build the report you want from the hvac table.

References;

https://powerbi.microsoft.com/en-us/documentation/powerbi-spark-on-hdinsight-with-direct-connect/

https://azure.microsoft.com/en-us/documentation/articles/hdinsight-apache-spark-use-bi-tools/

https://azure.microsoft.com/en-us/documentation/articles/hdinsight-apache-spark-jupyter-spark-sql/

Hi Reza

Thanks for informative article.

Need help as I can’t see “Python 2” option while performing step 4 of “Setup the Sample Table for Spark on Azure HDInsight” section. Appreciate your feedback.

Thanks.

Hi Salman,

What do you see there? any other Python options?

Cheers,

Reza

Hi Reza,

interesting article indeed.

Have you tried Power BI on Spark with somewhat larger datasets?

It seems as the dataset does not to have be very large to make Power BI to choke. I’ve uploaded a rather small file of 50 Mb, about one million rows and Power BI could not handle it. I’ve tested with Tableau and it worked, even with datasets much larger than that.

So I wonder if you have any experience with reporting on larger datasets and Power BI?

Hi Artur,

Power BI works with much larger files, I have worked with files in GB size myself. Not Spark sourced, but from other sources. For Spark I don’t think there be any limitations. if the size is much larger then you can use Live/DirectQuery options.

Cheers,

Reza

Have you experienced the ambiguous error “unable to establish connection” when using the PowerBI service (PowerBI.com) to connect to the cluster? I don’t understand what could be causing the connection to fail – our cluster isn’t behind any custom firewall, all ports are open, authentication is “basic” … Have any ideas?

Austin

Hi Austin,

I haven’t received such error. But first thing I’d check with this is to try some other tools to see if the connection to the cluster is issue or the network/ proxy? or something on Power BI side. I believe it should be outside of Power BI.

Cheers

Reza