I work with Power Query transformations every day these days, and I want to share one simple, but critical caution with you. If you use Power Query a lot, this tip can improve the performance of your transformation significantly. The number of steps that you add in a query counts in the performance of your data transformation (if you have too many steps), I like to show this to you through an example. If you like to learn more about Power BI and Power Query, read the Power BI from Rookie to Rock Star book.

Too Many Variables

This is a sample Power Query file, which in that I do a very simple transformation. The transformation is adding one to the existing number. However, in this sample, we are doing it over thousands of steps! one step at a time, we are adding thousands to a number. The main reason to do it this way is to show you what is the performance you get when you have too many variables (or let’s say steps) in Power Query.

Here is my sample query:

let

a0= 0,

a1=a0+1,

a2=a1+1,

a3=a2+1,

... //

... // each variable used in the next variable with a plus one

... //

a1808=a1807+1,

a1809=a1808+1,

a1810=a1809+1,

a1811=a1810+1,

a1812=a1811+1

in

a1812

The query above takes 15 minutes to run on my Surface Book 2 machine with Core i7 CPU and 16GB memory! The 15 minutes that you cannot touch Power BI through it. it will not respond to your actions, you have to wait for that time to see the result after that.

Oh! That is a really long time for a query that just adds one value in every step, Isn’t it? Let’s see how the resources consumption is in the system with Power BI Desktop and Power Query running. here it is only halfway through:

Uh! Even with a simple calculation like adding one to a number I had over 10GB memory consumption and 70% CPU usage for a long period! What do you think caused it? The number of variables of course. There is nothing else in this query.

Having too many variables, or too many steps, cause performance issues. Power Query allocates memory for every variable, and memory consumption raises significantly. The processor also takes lots of time to process that number of variables through the Power Query Engine.

Of course, this example was an exaggerated example of too many variables. You would never have 1,800 variables. However, this example also was on a variable with a single simple numeric value. In most of the cases, your variables are tables with many data rows and columns. So this can happen in a real-world scenario for you even with hundreds of steps or variables. You need to watch the number of variables in other words.

Too Many Variables will cause performance issue!

What is the Solution?

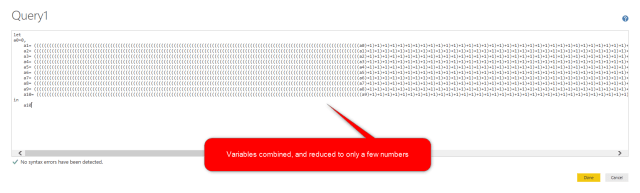

Well, if the number of variables is the cause of the issue, the remedy would be reducing the number of variables in the first place. If I change the query above to this one below, I get the result in less than a second!

The query above runs in less than a second, vs the previous query that took 15 minutes to run! The only difference is the number of variables. So here is the take away for this post:

Watch the number of variables (steps) in Power Query, If too many variables, then best to combine some of them

Important Note: I am not saying that you have to combine all your variables. You will not usually see performance issues with less than a hundred variables, this problem starts to appear when the number goes beyond that. One critical point you need to consider is that if you combine variables together, then debugging or troubleshooting or maintenance of the code would be harder as well. so use this solution only if needed.

Is the 100-variable threshold tip per query or per file?

per query. and not specifically 100+ more variables the slower it will become

Cheers

Reza