In any software application, the need for multiple environments is essential. Separating the user’s environment from the developer’s environment comes with many benefits. Power BI is not an exception in such scenarios. Having separate environments for different types of users can be helpful in many aspects. I’ll explain how Power BI handles this using Deployment Pipelines in this article.

Why multiple environments?

Before discussing the Deployment Pipelines, it is important to understand why you will need multiple environments in a Power BI implementation. The best way to understand it is to go through a sample scenario;

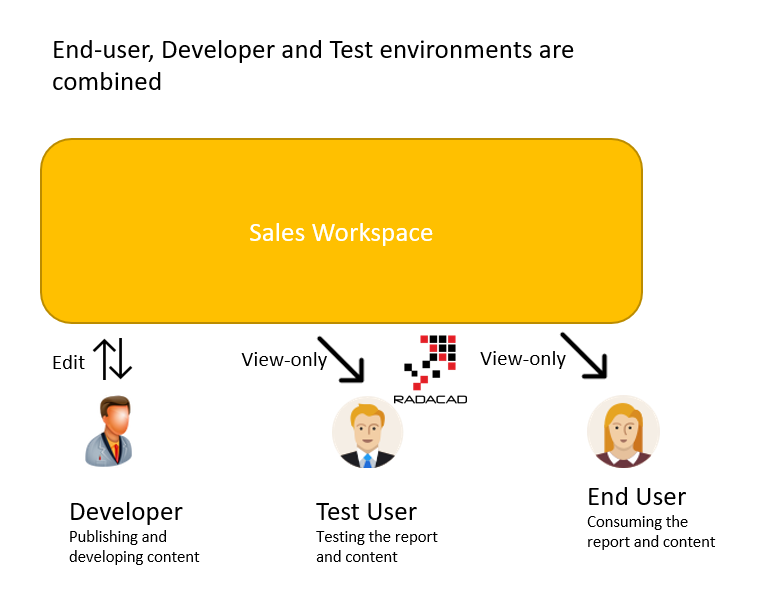

Jack is a Power BI Developer who is part of the Data Analytics team in his organization. The Data Analytics team has created a workspace for Sales reports, and they asked Jack to publish his reports, datasets, and dataflows in that workspace. Others in the data analytics team also have edit access to that workspace. Some test users use the same workspace to check the reports and the results. The end users are connecting to this environment through the same workspace. The structure is something like the below;

The big problem with the above structure is that as soon as the development team applies a change, both the test user and the end user will get impacted, even if the change is still not final. This might lead to frustrating times for the end users, resulting in losing their trust in the reports. To separate the end-user experience, the Analytics team can use Power BI App. Here is the scenario now with the App;

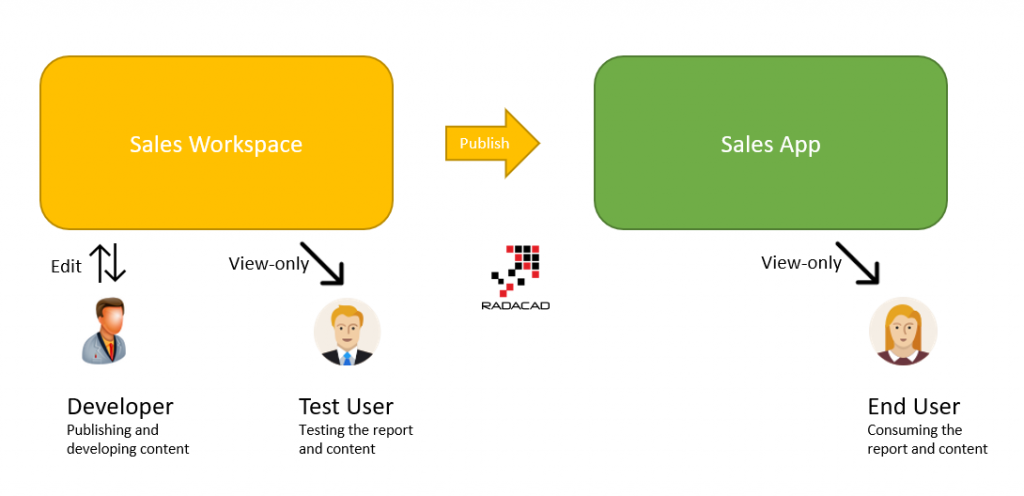

The Data Analytics team has created a workspace for Sales reports, and they asked Jack to publish his reports, datasets, and dataflows in that workspace. Others in the data analytics team also have edit access to that workspace. Some test users use the same workspace to check the reports and the results. The end users are connecting to this environment through an App. The structure is something like the below;

The environment above uses one workspace to share between the developers (Jack and the data analytics team) and the test users. It then uses an App on top of that workspace for end users. In this scenario, the developers and end users are separated, but still, the developer is not separated from the test users. What if the developer is still trying some features and is not ready for the test? At the same time, the test users are testing the content, getting different outputs, and getting confused with the test process.

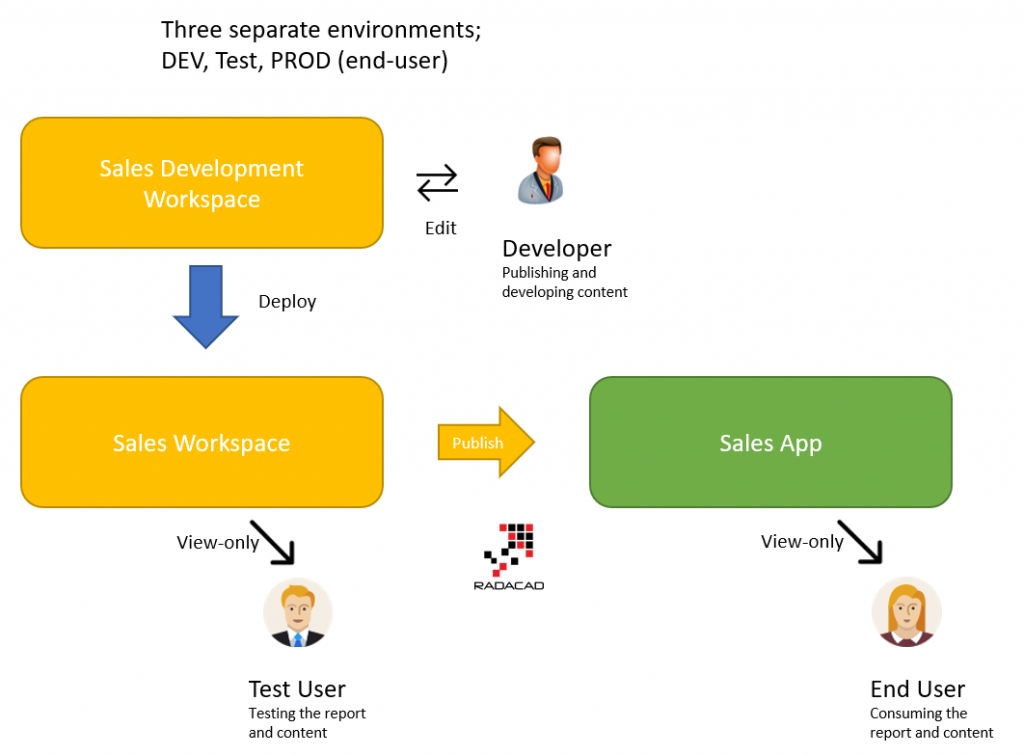

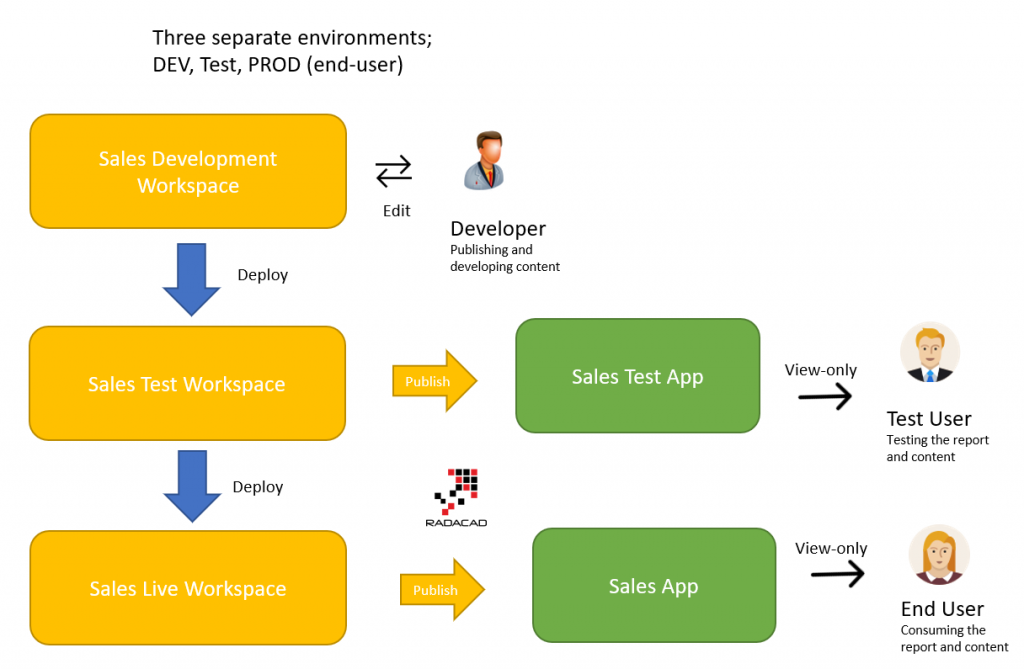

Separation of the Developer and Test environments can be done using another workspace. Here is the new structure;

The structure above is much better. When the developer makes changes, the test user won’t get impacted. So the developer team will do all their work, and when ready, they will deploy (or copy) the content into the Sales workspace. In that Sales workspace, the test user can test the content before publishing it to the end user.

Although the structure above might look perfect, it still has problems. What if there is a use of a shared dataset in this structure? In that case, the reports that are shown in the Sales App are connected to the dataset, which is in the workspace, and when the dataset gets updated, the reports may show the new results (which might not be correct because the test users haven’t tested it yet). So the environment can change to something like this:

The structure above separates the Test environment using its workspace and app and then the Live (or Production or Prod) environment with its workspace and app. This minimizes the risk of unwanted changes appearing in other environments. These levels of isolation for each environment make them easier to change.

This is why having multiple environments in any software application system is recommended. There are different types of users, and separating their environment would make the change process more reliable and, as a result, lead to better adoption of that software system in the organization.

How many environments?

When I explain the structure of multiple environments, I get the question; Do I always need three environments? Or more or less? The answer is it depends on your solution architecture. Sometimes you may need more, sometimes less. Sometimes you may be fine just with two environments of Dev and then Live (where the workspace of Live is used for test users). Sometimes you may need four or five environments (depending on roles and levels of test users in your organization).

Choosing the right environment setup has to be considered carefully for every organization based on the Power BI solution architecture, the use of shared components such as Dataflow, Datasets, and Datamarts, the culture of data users in the organization, the self-service usage of the content and many other factors.

What is Deployment Pipeline?

Now that I have explained why we need multiple environments, it is the right time to discuss Deployment Pipelines in Power BI.

When you set up multiple environments, you will need a mechanism to know which environment is which (they may not always be called Test, DEV, PROD, etc.). You also need a process to copy the content from one environment to another (for example, from Dev to Test). You will also need a process to compare the content in one environment with another, look for changes, and deploy changes only (For example, comparing the Test and Live environments to see which reports have changed). You may need to change some connections during this process (for example, connect the Test dataset to the test data source and the Live dataset to the live data source). Many more requirements come with having multiple environments. In other words, you will need a tool for managing the deployment between multiple environments. Here is where the Deployment Pipeline comes to help.

Deployment Pipeline in Power BI is a component of the Power BI Service, which enables you to manage multiple environments, define each environment, assign workspaces to the environments, compare the contents of two environments, deploy the changes, have the history of deployment, rollback the change if needed, change the connections through the deployment process and more.

If you manage multiple environments in Power BI, Deployment Pipelines are a big help. This is a tool for the deployment team (which usually is a smaller section of the Data Analytics team in the organization). Deployment Pipeline is not an end-user feature but would impact how the end-user adopts Power BI in the organization.

Deployment Pipeline is a Premium function in Power BI. This means you will need workspaces to be part of a Premium capacity or Premium Per User accounts to use that.

How does the Deployment Pipeline work?

Let’s now dive into the experience of the deployment pipeline in Power BI and how it works.

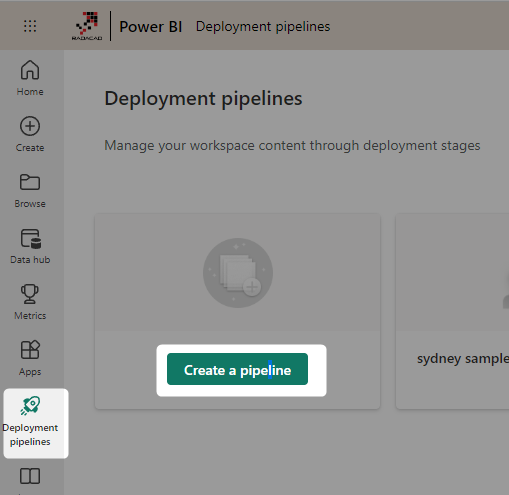

Creating deployment pipelines

To create a Deployment Pipeline, you must first log in to the Power BI service and create a pipeline.

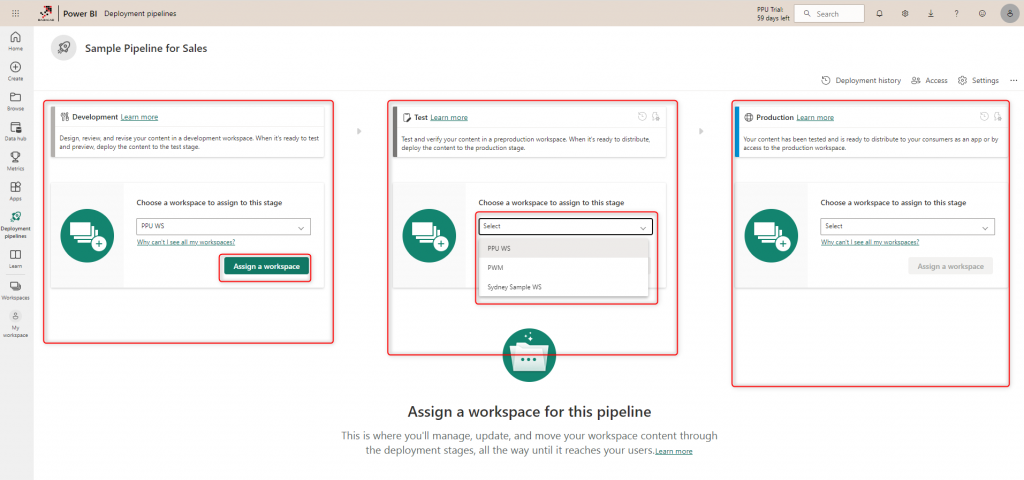

Set a name and description for the pipeline. Then the next step would be assigning workspaces to each environment.

Assign workspace

A deployment pipeline in Power BI comes with three environments option only. You can assign workspaces to these environments by selecting them in the dropdown.

If you don’t see the workspace in the dropdown, it might be because you don’t have a Premium workspace created yet, or maybe the workspace is already assigned in another deployment pipeline. Remember that one workspace can be used only in one deployment pipeline.

Compare content

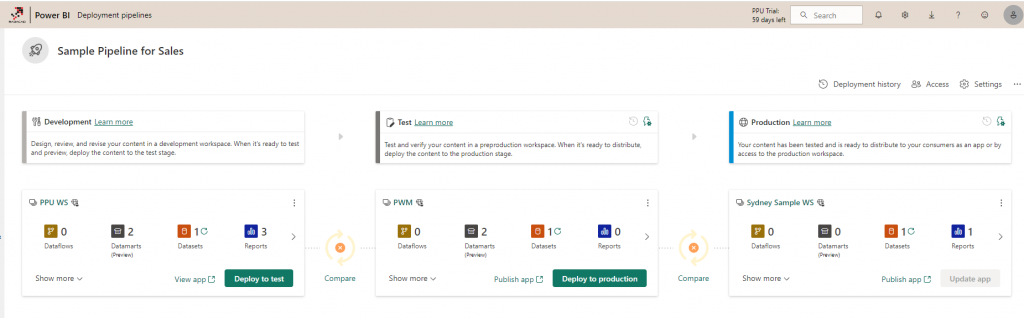

Once the workspaces are assigned, the deployment pipeline summarizes the content in each environment.

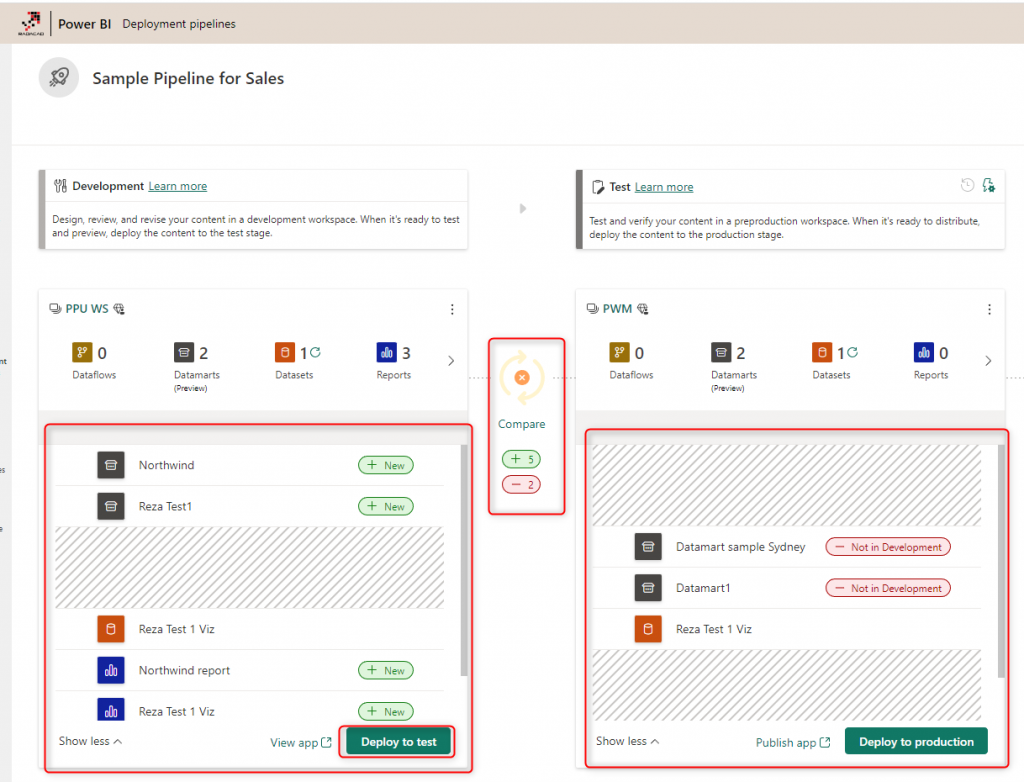

You can then compare two of the environments using the Compare action. The Compare process will give you a detailed output of which content items need adding or updating etc.

After the comparison, you can choose to Deploy. You can Deploy to the Test if you have compared the Development with Test. If you have compared the Test with Production, you can Deploy it to production.

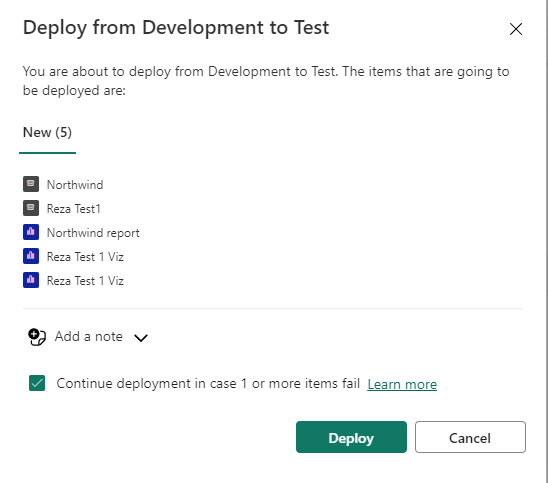

Deploy

The Deploy process is simple to use. You can see the outcome of it as well.

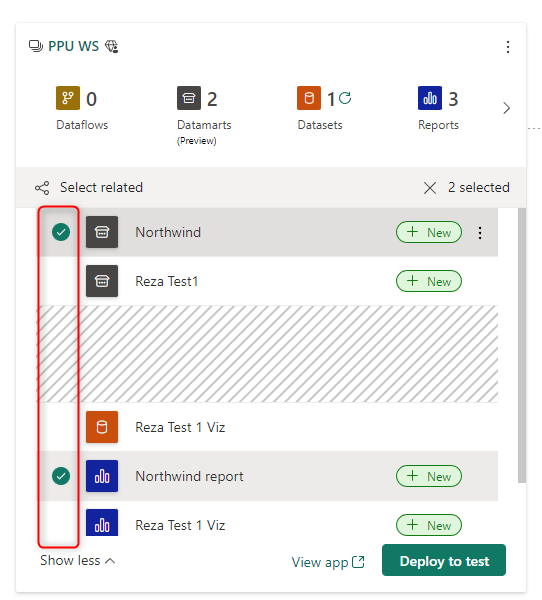

If you want only a few items deployed, select them before clicking the Deploy button.

Once the deployment is done, you will get a report of the outcome. You can also Compare the two environments after the deployment and check for the changes applied or not.

It is important to know that the deployment will OVERWRITE the destination content (if it exists in the destination already)

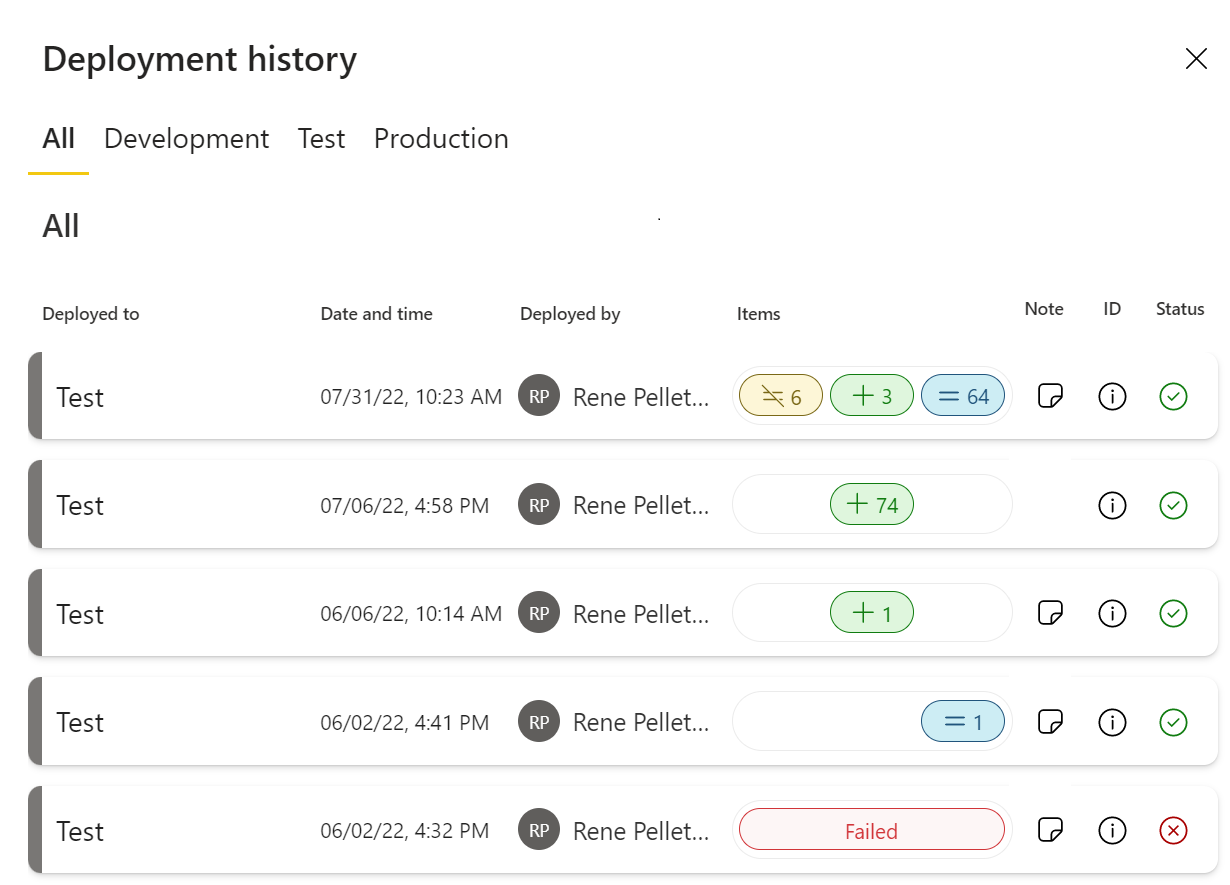

History fo deployment

One of the most important things in the deployment process is the ability to see the history of deployments and roll back if needed. Fortunately, this feature exists in the deployment pipelines in Power BI.

The history also has a detailed result which can be helpful for a detailed check.

Rules and connections

If you want to set up different connection configurations for each environment, you can do that using deployment rules. For example, you can change the connection from the dataset to the data source when you deploy it from one environment to another. This function even works better if you use Power Query parameters.

Automate the deployment

A user manually triggers deployment pipelines. However, you may want to automate that process. This can be done using the Power BI REST API. You can learn more about the Power BI REST API here.

What if there is no Premium license?

Deployment pipelines are a Premium feature in Power BI. If you don’t have the Premium licensing and still wish to use this feature, you must implement it on your own. Fortunately, the REST API for Power BI can greatly help. You can use that to get the content of one workspace and then compare it with another. You can use the same REST API to download the content from one workspace and then upload it to the destination workspace. You can also change the connection details of content when publishing it. In short, it is possible to build a functionality similar to the deployment pipelines using PowerShell scripts and REST API calls. Still, it would take some time, and you would need to maintain the script as the REST API functions might change. It is, however, a method that won’t require premium licensing.

Summary

Having multiple environments in a Power BI implementation is a very important aspect that helps the adoption of Power BI in the organization. You can create separated environments and manage the deployment process between these environments using Deployment Pipelines in Power BI. Deployment Pipelines are a Premium function in Power BI. Still, they provide so many great features, making them a very helpful tool for deployment.

Hi Reza,

Great article and solves a big need on my end.

One question. There seem to only be three available environments (Development, Test, and Production). What if we need additional environments such a Pre-Production or Archive?

Will I need to resort to the REST API?

Thanks,

Jim

Hi Jim

Thanks

Yes, for a customized scenario like that I suggest using the REST API, because deployment Pipeline UI won’t support more than 3 environments

Cheers

Reza

Super helpful, as always. Thanks.