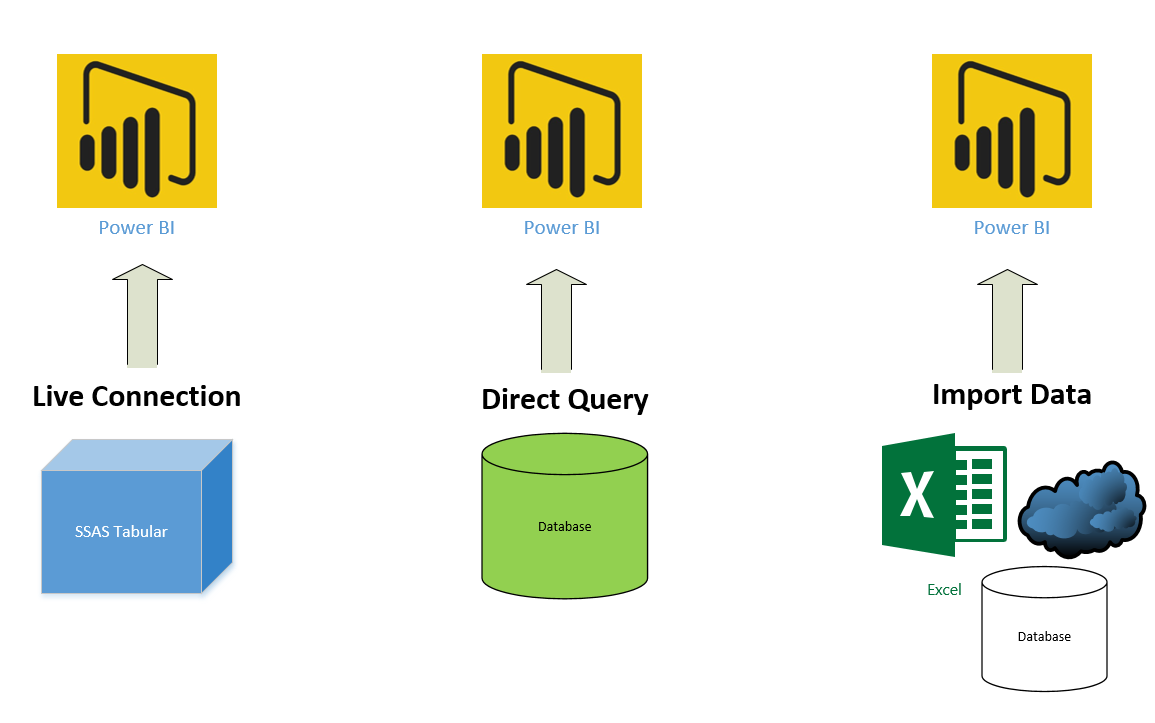

Power BI supports different methods for connecting data. That is why the decision to choose the right method is always a tough decision. You have heard of DirectQuery, Live Connection, Import Data, and there is also a Composite Model. What do these actually mean? and what is the situation in which you should choose one over the other one? What are the pros and cons of each? What are the best practices to choose each? Which one is faster? which one is more flexible? Is DirectQuery and Live Connection actually one thing? or two separate methods? And many other questions. I always get a lot of questions like this in my courses, conference talks, and blog posts. In this post, you are going to get the answer to all questions above. This post helps you to choose the right data connection methodology and architecture for your Power BI solution. If you like to learn more about Power BI; read the Power BI book; from Rookie to Rock Star.

Why is this a tough decision?

If Power BI only had one way to connect to data sources, then everything was easy. You never needed to choose between methods and find the right method for yourself. However, Power BI supports multiple different methods for connecting to data: DirectQuery, Live Connection, Import Data (or some call it Scheduled Refresh), and Composite Model. Many of you might still think that DirectQuery and Live Connection are the same, however, they are different. You will learn later in this post about their differences. Each method has some benefits and also some disadvantages. Depending on the scenario that you are implementing Power BI for, you might choose one way over the others. Changing from one method to another method can be a time-consuming task after a while in the implementation process. So the best would be to choose the right method from the beginning.

Choosing the right method is an important step for your Power BI Solution Architecture, which you need to decide about it usually in the early phases before starting implementation. In this post, I’m going to explain in detail what every method is, and the answer to all questions below;

- What is Import Data/Schedule Refresh?

- What is DirectQuery?

- What is Live Connection?

- What is the Difference between Live Connection and DirectQuery?

- Pros and Cons of Each Method

- Which method is performing best and fastest?

- Which method is more flexible?

- Which method is more scalable?

- What are Architecture Scenarios to use for Each Method?

- What is the Gateway Role?

What is Import Data or Scheduled Refresh?

This method has two names, some call it Import Data, and some call it Scheduled Refresh. Both names explain the behavior of this method. With this method data from the source will be loaded into Power BI. Loading in Power BI means consuming memory and disk space. As long as you are developing Power BI on your machine with Power BI Desktop, then it would be the memory and disk space of your machine. When you publish the report on the website, then it will be the memory and disk space of the Power BI cloud machines.

If you have 1 Million rows in a source table, and you load it into Power BI with no filtering, you end up having the same amount of data rows in Power BI. If you have a database with 1000 tables, however, you only load 10 of those tables in Power BI, then you get memory consumption for only those 10 tables. Bottom line is that you spent memory and disc space as much as you load data into Power BI.

Compression Engine of xVelocity

The very first assumption that you might get after reading the above explanation about Import Data is that; if you have a database with 100 GB, then if you import it into Power BI, you will get a 100 GB file size in Power BI. This is not true. Power BI leverages the compression engine of xVelocity and works on a Column-store in-memory technology. Column store in-memory technology compresses data and stores it in a compressed format. Sometimes you might have a 1 GB Excel file, and when you import it into Power BI, your Power BI file ends up with only 10 MB. This is mainly because of the compression engine of Power BI. However, the compression rate is not always that. This depends on many things; the number of unique values in the column, sometimes data types, and many other situations. I will write a post later that explains the compression engine in detail.

The short read for this part is: Power BI will store compressed data. The size of data in Power BI normally would be much smaller than the size in the data source.

Important Pros and Cons of this method

Power BI Full Functional

With this method, you get Power BI fully functional. You can use Power Query to combine data from multiple sources, or DAX to write advanced time intelligence expressions or visualization. There will be no limitation in the functionality of Power BI with this method. You can use all components.

Size Limitation

With this method, you have a limitation on the size of the model. Your Power BI Model (or let’s say the file) cannot be more than 1 GB (if you are not using Power BI Premium capacity or Premium Per User licenses). You have usually up to 10 GB size in your account, however, every file should be up to 1 GB size. Power BI Premium allows you to have up to 400 GB size of model loaded on the Power BI website. If you are using Power BI Report Server, the size limitation is 2 GB. However, remember that 1 GB in the Power BI file is not equal to 1 GB of data in the source (As mentioned in the compression engine section).

This is the fastest Method

This connection method is the fastest option possible. Data loaded into the memory of the server, and reports queries will be evaluated from the data loaded into the memory. No lags or slowness with this method (as long as you designed your Power BI model with no performance issues).

What is DirectQuery?

DirectQuery is a direct connection to the data source. Data will NOT be stored in the Power BI model. Power BI will be a visualization layer, then query the data from the data source every time. Power BI will only store metadata of tables (table names, column names, relationships…) but not the data. Power BI file size will be much smaller, and most probably you never hit the limitation of the size because there is no data stored in the model.

DirectQuery is only possible through a few data sources. Some of the data sources supporting DirectQuery from Power BI are listed here:

- Amazon Redshift

- Azure HDInsight Spark

- Azure SQL Database

- Azure SQL Data Warehouse

- IBM Netezza

- Impala

- Oracle Database

- SAP Business Warehouse

- SAP HANA

- Snowflake

- Spark

- SQL Server

- Teradata Database

To get the most up-to-date list, look at this post.

Important Pros and Cons of this method

Scaleability: The main Advantage

This method will not have the limitation of size. Mainly because no data is stored in the Power BI file, so you never get any issue with the size of data. You can have data sources with Peta Bytes of data in SQL Server, Oracle, or any other supported data sources and connect to it from Power BI.

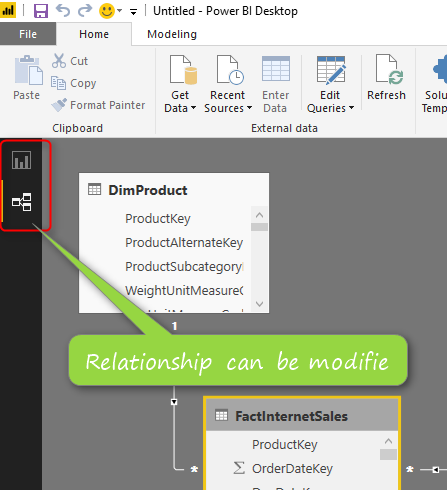

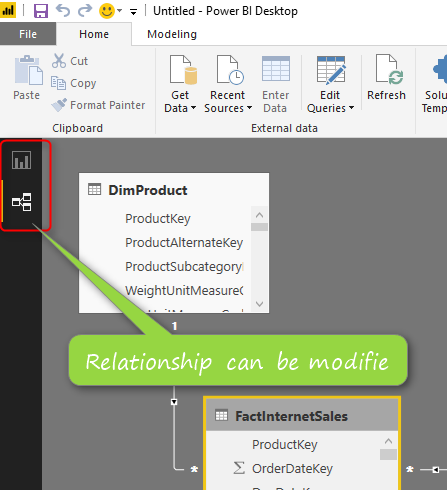

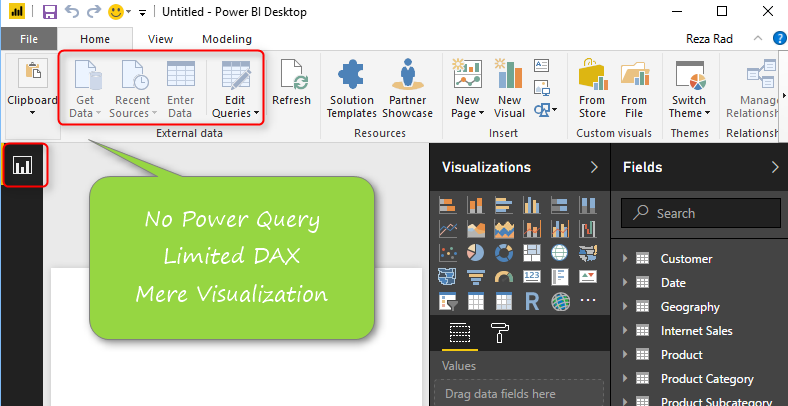

Limited Functionality: Few Power Query Operations, Mainly Visualization

This method will not have the full functionality of Power BI. With this method, you would have only two tabs in Power BI Desktop; Report, and Relationship. You can change the relationship in this mode.

In Power Query, you are limited to a number of operations. majority of operations that cannot be folded, cannot be used. To learn more about query folding read this post. With this mode, however, you have full visualization support.

Slow Connection

A big disadvantage of this method is the connection is slower than other types of connection. Note that every visual sends a query to data source and data comes back. you usually have more than one visual in your report and with slicing and dicing every time you are sending queries to the data source. Performance Considering is a MUST DO in the data source for this model.

Just for a very small example of performance tuning; here is what performance I get when I have normal index on my table with 48 Million records;

A regular select sum from my table with 48 million records takes 4 minutes and 4 seconds to run. and the same query responds in less than a second when I have clustered column store index;

and significantly improved performance when I have a Clustered Column Store index on the same table with the same amount of data rows;

I’m not going to teach you all performance tuning in this post, and I can’t do it because you have to read books, blog posts, and watch videos to learn that. That is a whole different topic on its own. and the most important thing is that; Performance tuning is different for each data source. performance tuning for Oracle, SQL Server, and SSAS are totally different. Your friend for this part would be Google, and the vast amount of free content available on the internet for you to study.

What is Live Connection?

Live Connection is very similar to DirectQuery in the way that it works with the data source. It will not store data in Power BI, and it will query the data source every time. However, it is different from DirectQuery. Live Connection is only supported for these data sources;

- Azure Analysis Services

- SQL Server Analysis Services (SSAS) Tabular

- SQL Server Analysis Services (SSAS) Multi-Dimensional

- Power BI Dataset in the Service

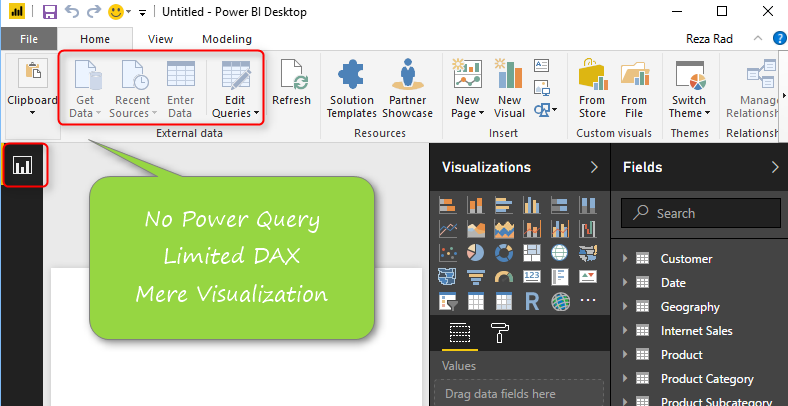

Because these data sources are modeling engines themselves, Power BI only connects to these and fetches all model metadata (measure names, attribute names, relationships…). With this method, you need to handle all your modeling requirements in the data source, and Power BI just surfaces that data through Visualization.

Important Pros and Cons of this method

Big Size Model with OLAP or Tabular Engine

The big benefit of this model is that you can have a big-sized data model, and also you can leverage the modeling layer of SSAS. SSAS Tabular will give you DAX, and Multi-Dimensional will give you MDX. With any of these two languages, you can cover all your calculations and modeling needs. This method has better modeling features than DirectQuery. Because in DirectQuery, there is no DAX or MDX as the analytical language to help. all calculations need to be done on the database side. Sometimes doing calculations on the database side is much more complex than doing it in the analytical expression language.

No Power Query. Just Visualization

The big disadvantage of this method is that you will not have even Power Query simple transformation.

Report Level Measures

You get report-level measures with this type of connection. which gives you the ability to write DAX measures. However, you might want to keep them in the data source to keep your model consistent.

Report Level Measures are a great feature because users can create measures without the need to call the BI developer to do that. However, these measures will not be added to the data set. These are just for the report. So for consistency of your model, you might want to keep measures creation to be part of your SSAS data source model.

Composite Model: The best of both worlds

Power BI enables you to have the DirectQuery sources and Import Data sources combined in one dataset. This gives you the performance and flexibility of the Import Data, and the scalability and the large size of the data of the DirectQuery.

Composite Model not only supports DirectQuery to data sources such as SQL Server and other DirectQuery sources, It also enables you to have DirectQuery to the Power BI Dataset. This enables you to create a chained dataset from the main Power BI dataset.

The Composite Model is bringing many useful features to the DirectQuery Connection, and from the time it has been released, it is highly recommended to build a composite model for any DirectQuery data sources. This can be as simple as keeping the smaller tables in Import Data mode (Such as dimension tables). This also enables you to create aggregated (and Imported) versions of your DirectQuery tables for better performance.

What is the Difference between Live Connection and DirectQuery?

Now you know about all different types of connections, before talking about their pros and cons in detail. Let’s focus more on the difference between Live Connection and DirectQuery;

- DirectQuery is a direct connection to data sources listed above, for example; SQL Server, Oracle, IBM…

- LiveQuery is a direct connection to the analysis services model (Azure AS, SSAS Tabular, Multi-Dimensional, or a Power BI report published in service)

Relationship Configuration

With DirectQuery you can still configure relationships in some cases

With Live Connection, you have no relationship tab. this should be handled in the data source. Because the Analysis Services is a modeling engine, you can build more than just a relationship there, things such as hierarchies, measures, and columns can be created in the data source for the live connection, and then be used in Power BI.

Report Level Measures

With some types of SSAS live connections (to tabular model or Power BI Service), you get report-level measures.

No Power Query in Live Connection

In DirectQuery you still can do simple Power Query transformations. However, in Live Connection Power Query is not available at all. All you can do is change the source data model to another model or another server.

Pros and Cons of Each Method

I have already explained the main highlights of the pros and cons in each section. Here let’s go through all of them together again;

Import Data or Scheduled Refresh

Advantages

- Fastest Possible Connection

- Power BI Fully Functional

- Combining Data from different sources

- Full DAX expressions

- Full Power Query transformations

Disadvantages

- Power BI file size limitation (It is different for Premium and Pro)

DirectQuery

Advantages

- Large Scale data sources supported. No size limitation.

- Pre-Built models in some data sources can be used instantly

Disadvantages

- Very Limited Power Query functionality

- Slower Connection type: Performance Tuning in the data source is MUST DO

Live Connection

Advantages

- Large Scale data sources supported. No size limitation as far as SSAS Supports.

- Many organizations already have SSAS models built. So they can use it as a Live Connection without the need to replicate that into Power BI.

- Report Level Measures

- MDX or DAX analytical engines in the data source of SSAS can be a great asset for modeling compared to DirectQuery

Disadvantages

- No Power Query

- Cannot combine data from multiple sources

- Slower Connection type: Performance Tuning in the data source is MUST DO

Which method is performing best and fastest?

Import Data is the fastest possible option. Data is loaded into the memory of the server and all queries will be resolved immediately.

Live Connection is the next option in this list, especially if SSAS Tabular or Power BI Service is used because these two are in-memory technologies and perform faster than multi-dimensional.

DirectQuery is the slowest type of connection. You have to consider the performance tuning of your data source. However, DirectQuery can be combined with Import Data to boost the performance of the report. And I recommend you to use aggregated import tables to even make things faster when querying big data tables.

So the winner for this part is Import Data. The Import Data parts of the Composite Model go into the same category.

Which method is more flexible?

With Import Data you get the full functionality of Power BI. Full Power Query transformations, and DAX measures as well as visualizations.

Direct Query and Live Connection both are the next on this list because each of them gives you something. DirectQuery will give you a few Power Query options. Live Connection will give you Report Level Measures.

So the winner for this part is again Import Data. However, The Composite Model can have part of the data imported. So the flexibility on that part of the data will be exactly as the Import Data.

Which method is more scalable?

Import Data method has the size limitation of size. So without using Power BI Premium, this method is not so Scale-able.

With DirectQuery and Live Connection, you get better scalability. Data sources support a large amount of data.

So the winner for this part is Live Connection and DirectQuery

What are Architecture Scenarios to use for Each Method?

Import Data for Agility and Performance

Import Data has a fully functional Power BI with a great performance. so If your data set is not a huge data set, then you can easily use this method, and produce reports in a very fast development time frame.

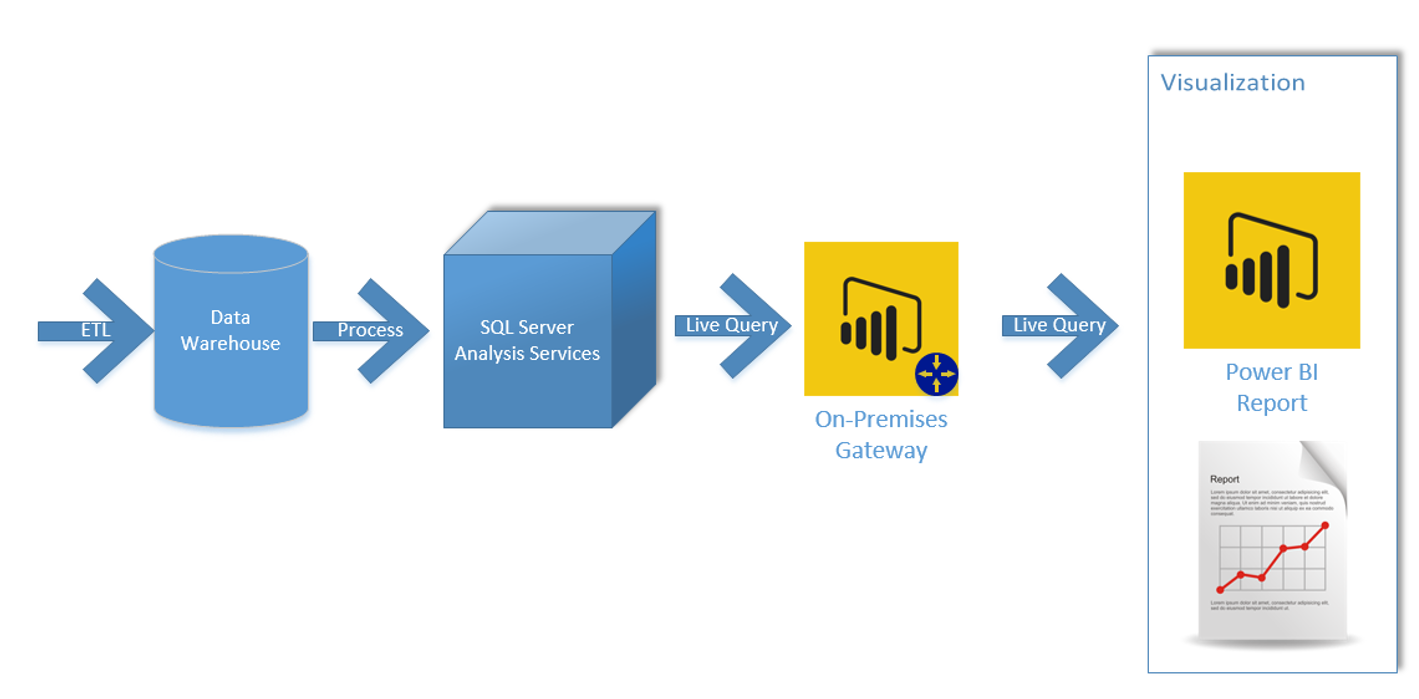

Live Connection for Enterprise Solution

Many enterprises already have pre-built models in SSAS tabular or multi-dimensional. These models can be easily used in a Power BI Live Connection.

Even if your company hasn’t yet started the AAS (Azure Analysis Services) or SSAS solution, and you are dealing with huge data set, this option is better than Direct Query. Because in SSAS you have analytical expression languages of MDX or DAX to cope with lots of calculations and modeling challenges. Here is a sample architecture that can be used with this method;

Live Connection for Team Development

Even if you are not working in an enterprise environment with a dedicated BI team, you may find many benefits in using Live Connection. If you are working on an analytics project with multiple report authors and team members, you will get a lot of benefits by sharing a dataset with a live connection. The dataset can then be consumed by report authors using the Get data from Power BI Dataset using a live connection.

Direct Query for Non-Microsoft Sources

DirectQuery connection is not that much used in Microsoft solution architecture. The main reason is that If you have a Microsoft-based solution architecture, you probably will use SSAS as mentioned above to leverage its analytical engine of it. The DirectQuery mode is mainly used in Non-Microsoft architecture. when the data model is handled in Oracle, IBM, or SAP Hana system or anything like that.

Even for the situations where you use DirectQuery, I strongly recommend not to use pure DirectQuery. Always combine DirectQuery tables with smaller import tables (dimensions or aggregated tables). This normally results in a better performance when querying the data in the reports.

What is the Gateway Role?

Regardless of the type of connection you use (Import Data, DirectQuery, or Live Connection), if the data source is located on-premises, you would need a gateway for it. otherwise not. To learn more about setting up for gateway read my other post.

Summary

In Summary, you’ve learned what are three different types of connection; Live Connection, Import Data, and Direct Query. You’ve learned their differences, pros and cons, and scenarios that each should be used. There are still a lot of details for each method. In this post, I tried to put an explanation of everything to give you a holistic view. I’m sure you will have some questions after reading this. Please don’t hesitate to ask your questions in the comment area below.

Gr8 article , very informative.

Very well explained , this is what i was looking for. This blog helps me to choose right data connectivity option to power BI, Thanks.

Thank you very much Reza for the explanations and examples.

Amazing post!!

I really wish MS chose a different name that Direct Query because of the Direct Query MODE that exists in an SSAS TABULAR model (not to be confused with ROLAP mode of SSAS multi-dimensional). Technically, you could have a LIVE CONNECTION to an SSAS TABULAR MODEL in DIRECT QUERY mode – although I would think these are on-premise, edge use cases rather than the intended normal of POWER BI.

Hi Steven.

Correct. The DirectQuery from SSAS to the data source is different. However, their name is the same. So you can have a Live Connection to SSAS, and the SSAS can have a direct query to the data source. a bit confusing with all naming around.

Great post . Thank you !

This is a great article – I’ve been wondering how DirectQuery would mix with more interesting analytical summaries via DAX. Clearly, it won’t 🙂

Thanks for the great examples!

Nick

Reza, please double check one of your statements regarding the gateway. Your post states that the gateway is required for an on-prem resource regardless of the connection type (import/DirectQuery/Live Connection), but I am able to import into Power BI Desktop without the gateway.

Hi George.

You are able to import it into Power BI Desktop without gateway. However, if you publish it into the service and want to schedule refresh it then you need gateway.

Cheers

Reza

This was a VERY USEFUL post. What a complicated story to explain to people…and you have to hear the story about three times before it makes sense.

Very nice article. So many issues have been clarified.

Nice article, very easy to follow! thanks

How are Azure storage solution mixed into this? Like Azure Storage Account, Cosmos db, etc..?

Some Azure sources support Live Connection (Such as Azure Analysis Services), some support DirectQuery (such as Azure SQL Data Warehouse and Azure SQL DB…), and for some, such as Azure Storage Account you have to choose Import Data. Or use an intermediate storage and then other methods of connection.

What a great article!

Thanks Reza!

Is there a another post or a part 2 of this subject which includes the newly introduced aggregation and composit models?

Yes. here is what I written about Composite Mode:

http://radacad.com/composite-model-directquery-and-import-data-combined-evolution-begins-in-power-bi

For aggregations; stay tuned for the blog series

Cheers

Reza

Great Article!

Such a brilliant write up. Very clear. Thanks

Nice blog. May be you may want to add Data refresh details.

Most dashboard requires up to date data. We cant achieve this with scheduled refresh (as we can configure scheduled refresh 8 times a day). So we have to choose DirectQuery method in this case.

I have written about Data Refresh in another post.

However, if you want to have up to date data, DirectQuery or LiveQuery is one of the methods. You can also use the Real-time dashboard approach. I have written about that in another blog post.

Cheers

Reza

It’s very helpful and resourceful post for a beginner like me. One thing I am confused about. You mentioned in DirectQuery “Cannot combine data from multiple sources” but I found I can use Sql server in Direct Query and then use CSV. So am I missing anything or Power BI updates the feature.

Thanks

Pure DirectQuery won’t allow you to combine data sources. when you bring another data source, you are switched to Composite mode, I wrote about it here.

Excellent Blog – a couple of questions

1. Import Mode (Centralized Gateway Source or Local Source Import)? Assuming recommendation is to centralize data source refresh through the gateway (for reusability vs import mode by each user on their desktop)

2. if our organization has a lot of data in Oracle, S3, Redshift platforms – is it better to centralize in summary tables in redshift with Direct Query or centralize in SSAS with Live connection? any recommendations?

Hi Ash

The centralized data source will reduce a lot of redundant code in the transformation stage, so better in that side. However, it also means that you have to spend probably months to bring all data sources into the centralized data source before it can be used in a report, So it doesn’t work with the purpose of self-service and agile reporting. You have to find the perfect combination between the two.

If you are using a centralized approach, I suggest SSAS Live connection (Especially Tabular SSAS), because SSAS is a modeling tool, and you can leverage DAX functions especially if you use SSAS Tabular.

Clearly an Awesome article read.

Very good explanation! Thanks.

Thank you for great comprehensive explanation!

Could you please tell me, if I transfer my current tabular model from .pbix to SSAS on-premises, will I get increased performance in calculation of really “heavy” measures? Thanks in advance! Great blog!

Hi Egor.

It depends. However, I have to say 80% of the time NO!

There is a reason that your measure is “Heavy” and takes time to run. you have to find that reason. and then you have to fix it. From my experience 95% of the time, that is because of a bad data model, and writing calculations in the wrong place. I have seen models, that a single measure calculation took more than 0.5 hour, and when investigated, seen a lot of row by row operations implemented in the context of an iterator before aggregating it. Moved the row by row operation to the data transformation side, and got the output in less than 10 seconds. Countless scenarios like that.

My advice is to investigate the issue of performance and solve it rather than increasing resources.

Cheers

Reza

Thank you!

You are absolutely right – my measure contains iterator over great amount of rows + additional nested iterator. Following your recommendations, I created additional transformation step in ETL and provide my data model with already pre-calculated column. The effect is amazing! I got speed of light.

Awesome to see your model is performing fast Egor.

Cheers

Reza

Hi Reza,

Very interesting and informative article. I am going to make an energy analysis portal with Power BI but I am not sure what sort of connection (should be live or directquery) and database (??) is the best and fastest one for this. Can you please look at the following scenario and give me your idea?

1. A customer registers over a public website and then under his/her account uploads their Excel energy data (with consistent format) into a web based database (I am not sure about type of the database yet)

2. The database has live connection to my Power BI pro account and when customer finishes their upload, the visual dashboard (shared via embedded code on the same website) is refreshed and shows the latest visual based on the energy consumption time period the customer chooses from a dashboard menu. The visual obviously should be refreshed fast after customer upload to the database finishes up.

Hi Babak

If you want the visual to be automatically refreshed after a new record added to the database, then Live Connection or DirectQuery is not the answer for you! because these two types of connections are not trigger based. With these two types of connection, you will see the new data after clicking on REFRESH page!

Your scenario looks like a real-time scenario option, where you want to trigger the Power BI dataset and dashboard after a new entry is added to the table. I have written about that separately here how to implement a real-time solution using Power BI.

Great article with lots of information.

Awesome one thank you for the wonder full Post.

Great Post Thank You

Thanks Reza for a very explainable and easy to understand article 🙂 Now I’m much more comfortable, when choosing Connection methods in Power BI.

Thanks Ronald,

Glad you found it useful 🙂

Cheers

Reza

Super helpful and saved me a lot of time!

Hi Reza…What are the advantages/disadvantages of using Azure Analysis Services Direct query over Power BI direct query to the same Data Source. Actually I am trying to understand whats the benefit as my data source is Azure SQL DW and I have to implement Direct Query with AAS.

Thanks, Ajit

Hi Ajit

Azure Analysis Services doesn’t work as DirectQuery, It works as Live Connection.

Azure SQL DW works with DirectQuery.

Live Connection is only supported for analysis services. which if you use that approach, it uses the in-memory engine of the analysis services. This engine is super fast compared to the data warehouse engine you are using. but has size limitations depends on the server and resources you use

Cheers

Reza

Excellent explanation

Amazing. Love it.

Very good information shared and no confusion now which method to use when. specially at architectural level it very detailed.

Thank you for your valuable information regarding this. It helped a lot for me.

It was awesome, it clarify me most of the things !!