Does it take a long time to get your data refreshed in your Power BI model? Should you wait for hours for the refresh to finish because you have complex transformations behind the scene? Does the long refresh time make it hard for you to develop your solution? The good news I have for you in this article is; how to use Power BI dataflows to help with reducing the refresh time of your Power BI models. I have previously explained some of the benefits of dataflows, and here is another one in action, let’s see how it can help. To learn more about Power BI, read Power BI book from Rookie to Rock Star.

The Problem: Slow Refresh Time

You have a Power BI file that takes a long time to refresh. The long refresh time can be because the data source is slow, or the set of transformations steps used are heavy and makes the process of data preparation a lengthy process. Having a long refresh time can cause a log of challenges, for example, you are doing a refresh to develop some changes, but due to the long refresh time, your development speed also reduces, and many other reasons. For example, the Power BI report below takes 5 minutes to refresh.

Note that 5 minutes for refresh is not a long refresh time. and I created that sample model for training purpose here. I’m just showing how to make it faster, even for a refresh that takes 5 minutes. You can apply the same method of refresh processes that take hours long.

Now instead of us waiting for a long time to get this refreshing, and seeing a message like below, we want to speed it up;

The Solution: Push the Transformation to Dataflow

I have previously explained about Power BI dataflow and use cases of it, I also explained how to create your first dataflow. If you are new to Dataflow, here is a very brief explanation:

Power BI Dataflow is a set of Power Query transformations running in the Power BI service independent from a Power BI dataset.

A Power BI dataflow can run Power Query transformations, and load the output into Azure Data Lake storage for future usage. And that is exactly, how it can help with reducing your Power BI dataset refresh time.

If your dataset refresh takes a long time because you have applied a set of heavy data transformations in Power Query, then what you can do instead is to push that set of heavy transformations to a dataflow. That way, the transformations happen on a different process, it loads the output into Azure Data Lake storage of Power BI service, and then you can use that output as the input of the Power BI dataset. The diagram below shows what I’m talking about:

Instead of doing the heavy lifting work in Power BI, just push them all to dataflows, and your data refresh time in Power BI dataset would be super fast! That said, you still need to schedule the refresh of the dataflow in the service. However, because that can run on a different schedule than the Power BI dataset itself, then you don’t have to wait for the refresh to finish to get you development work done. Now let’s see an example of such implementation.

Create a Dataflow from Blank Query

I am not going to explain how to create a dataflow, because that needs some prerequisite steps to be done such as creating a workspace version 2, and having the right access to create dataflow and so on. I have written an article about how to create your first dataflow, which you can read here. So let’s start here at the time of choosing what to do with the dataflow creation, first is to create the dataflow;

Choose Define new entities

Choose the data source as a Blank Query;

Copy the Query from Power BI Desktop to Dataflow

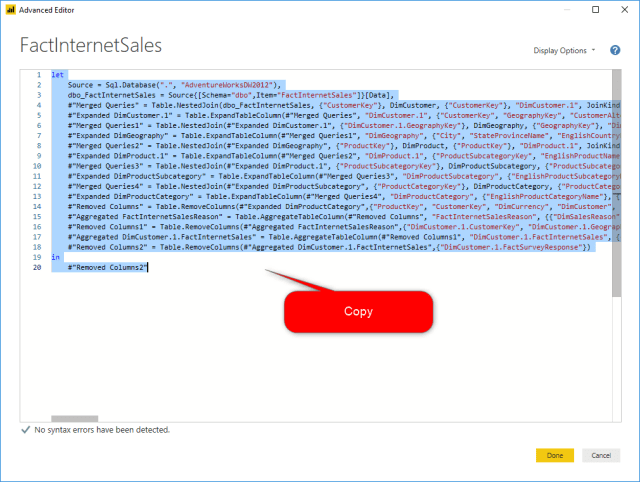

Moving your Power Query transformations from Power BI Desktop to Dataflow is as simple as copy and paste. You can copy the M script from the Advanced Editor of Power BI Desktop, and then paste it in the advanced editor of Dataflow. That’s it. Here is how it works. I open the Power Query in Power BI Desktop using Edit Queries and then selecting the query and going to Advanced Editor;

Copy the M script from there;

Then paste it in Power BI dataflow (under creating a blank query that we did in the previous step, or by using right-click and choosing advanced editor on an existing query);

After pasting it, you might get a message asking about on-premises data gateway (in case, you use an on-premises data source in your script);

The message is: An on-premises data gateway is required to connect.

Then select the gateway;

and click on OK. If your gateway setup is fine, then you should be able to go to the next step. Gateway is another component needed in the Power BI toolset if you are connecting from Power BI service to an on-premises (local domain) data sources. I have written an article explaining everything about the gateway, read it here.

Depends on if you used that step before or not, you might get a message about Editing credentials;

The message is: Please Specify how to connect.

Depends on the data source you are using, set the credential to access to it, and then connect.

Then you should see the data loaded in the graphical editor of Power Query in the dataflow.

You can then click on Close and Save, and Save your dataflow;

Few Notes for Moving Your Queries

If you are moving your queries from Power Query in Power BI Desktop to Power Query in the Dataflow, there are few notes to consider, let’s talk about those here;

- Gateway might be needed.

- You might need moving more than one query to move the transformation process.

- If you have queries sourcing each other, you might end up with creating Computed Entity

Gateway might be needed

In Power BI Desktop, and also the Power Query in the Power BI Desktop, you don’t need a gateway to connect to your local domain (or what we call on-premises) data sources. You just connect to it directly. However, Dataflow is a service feature, and in order to connect to an on-premises data source, it needs a gateway setup. If you are getting data from an online data source, such as Google Analytics, or Azure SQL database, you won’t need a gateway. However, if you are getting data from an on-premises data source, then you would need to have gateway setup, and then select it in the dataflow, like what we did in the previous step.

Gateway setup and configuration is a long process itself, I have written about it in an article; Everything you need to know about Power BI Gateway.

You might need moving more than one query to move the transformation process

Sometimes, In Power Query, you combine tables with each other using Merge or Append (read more about Merge and Append here). In such scenarios, you need to make sure that you get all tables needed into dataflow as well. A script cannot run if all relevant queries to that are not in the same process.

If you have queries sourcing each other, you might end up with creating Computed Entity

If you have a scenario such as what I mentioned above using Append or Merge, or any other scenarios that use the output of one query in another query, then you might end up with the creation of a Computed Entity in Dataflow. Computed Entities are generated when you source from another query, and that query itself is also set as “Enable Load”. Computed Entity is a dataflow-only concept, and does not exist in Power Query in Power BI Desktop.

Creating Computed Entities is good for performance because it will do transformations step by step, using the result of previous transformations which is loaded as an output of another query in the Azure data lake storage. However, Computed Entity is a Power BI Premium-only feature, and if you don’t have premium licensing, then you might find it hard to move your transformations to the dataflow. I have written an article about what Computed Entity is, and also another article about a workaround for Computed Entity using Power BI Pro account.

Schedule Dataflow to Refresh

After creating the dataflow, and saving it. Now you can set it to refresh using Schedule Refresh;

Power BI Desktop Get Data from Dataflow

As the last step of this sample, you need to get data from dataflow using Power BI Desktop. Doing the process in this way, you are getting the data that is already transformed and stored in Azure data lake storage of Power BI dataflows. Start by getting Data from Power BI dataflows;

After logging into the dataflow using your Power BI account, you can choose the workspace that contains the dataflow, then under dataflow, select the entity or entities you want, and then load.

Now Let’s see how long this new Power BI file takes to refresh. Here is the sample refresh length of that file;

I let you do the math to see how faster it is compared to 5 minutes refresh time that you have seen at the beginning of this example. This would show even much more effective if applied on data refresh scenarios that take hours to complete.

Summary

You learned through this article, that you can move your Power Query transformations to Power BI dataflows rather than the PBIX file itself to make the refresh time faster. The method you learned in this article, does make your refresh time faster, but it doesn’t make your Power Query transformations process faster! Using this method, we just move the heavy part of the refresh of Power BI dataset which is for heavy lifting Power Query transformations to a separate process in the Power BI service; Dataflow. That Power Query transformation is still taking a long time to run. However, now it is independent of your dataset. You can schedule that process separately. In other words;

Using dataflow, you can separate the heavy lifting transformations in the ETL (Extract, Transform, Load) process from the refresh of Power BI dataset.

I hope this method helps you in shortening your dataset refresh time if you have any comments or feedback or questions, please don’t hesitate to share it in comments below.

Hi Reza,

Great blogpost, one of the challenges I found with dataflow development is that (as a dev) you still need to download the data to your local .pbix environment before creating a dataset *which is compressed data. You actually see this in Power BI Desktop if you select dataflow as source. (E.g) So a 16 trillion row dataset can be around 7 GB’s. Having multiple fact tables can be time consuming to load initially in your local Power BI Desktop file. Any suggestions or workarounds?

Hi Reza,

Great blogpost! The benefits of a dataflow are really clear! However I see a challenge, in local Power BI Desktop development you then connect to a PBI dataflow (as a data source) if you want to create a new Tabular Model (Power BI dataset). This means that you have to download the data from Azure storage to your local environment. you see this happening every time you connect to a Power BI dataflows object within Power BI Desktop.

What if you have a 50 million/billion fact table? or multiple tables? In order to develop and publish a datamodel you have to download approx 20 GB’s of data to local environment… so in good development practise we should only ‘cap’ large Fact tables in the query editor, and than release the ‘cap’ in the Power BI service.

Any thoughts or suggestions on this topic of local loading of data from ‘dataflows’ to Power BI Desktop?

KR,

Michiel

Hi Michiel

Thanks for your comments.

I answer both of your questions in one 🙂

At the moment getting data from dataflows is only doing it via import. In the future, we MIGHT have the ability to do it using DirectQuery. and If that comes, then it also opens the door for composite models and aggregations.

In the meantime; It is correct. You have to load the entire data into Power BI to process it. However, moving transformations to dataflow still helps, because you just LOAD the data. Transformations is already done in the dataflow.

If you can use features such as Incremental load which is premium only at the moment, you will be able to do it with not loading the entire data each time.

There are other workarounds as well for incremental load, such as loading data into tables, and disabling the refresh of those tables at, etc. all of these are workarounds of course. Because the size of data is so large in your case that preferably needs dedicated compute to work with.

Cheers

Reza

Hi Reza,

According to my experience in the past two weeks trying dataflow, I think it is not so good for projects which data volume is big. Although we need to load data to Power BI in anyway either with dataflow or others, let’s say on-premise, but dataflow is on cloud while data warehouse server is close to my computer, so it can have significant difference. For example, I have one table in DB2 which has more than 10 million rows. When I load it to PBI directly, it only needs couple of minutes, but when I tried to load same data from dataflow to PBI, I couldn’t make it beforeI lose my patience, because the loading data reached 8G already (I don’t remember how long it look). I couldn’t find a way to optimize this with dataflow. Compare to Qlikview which is our current BI tool, Power Bi likes a nightmare (Qlikview save data to harddisk with its own QVD format, and load above data only needs about 30 seconds).

Hi Achates,

There are two things I like to mention regarding your question:

1. Dataflow is a good example of a cloud-based solution. Your Power BI file at the end of the day is going to be published to the Power BI service I assume? so it would be pretty much the same performance as you get with the data flow. If you are just looking at using it in the Desktop, then I would suggest On-prem replacement of the dataflow, which can be SSIS packages running Power Query as a source and storing it somewhere, in a DW for example.

2. Regarding the performance problem you have in general. You probably need to take some actions and increase the performance by reducing the number of columns that you don’t need, filter out part of the data that is not necessary. If the file size is 8GB, I also highly recommend using either Live Connection or Composite model, which you can speed it up with aggregations. Power BI is like driving a Ferrari car, you have to know some mechanics to get it working fast, and when you know it, I can tell you that there won’t be anything faster than that.

Cheers

Reza

Hi Reza,

You said: If you can use features such as Incremental load which is premium only at the moment, you will be able to do it with not loading the entire data each time.

We have premium capacity, but when I tested incremental refresh in Power BI Desktop with a (premium) dataflow entity, it still loads the same amount of data at every refresh (not just the first one). Of course it filters on the Desktop side the date range I want to keep, but network traffic and refresh times remain high.

I got the same results when I did the incremental refresh testing in the Power BI service = from one dataflow to another.

Am I doing something wrong or can you confirm this?

KR

Alex

Hi Alex

What is the data source? and where did you set up the incremental load? only in dataflow? or in Power BI dataset too?

Cheers

Reza

Reza, but what about the refresh time for the dataflow? How long does it take in this example? Only after comparing this time I can see a benefit, if exists. Otherwise, it doesn’t make sense to refresh de dataset if the dataflow did not refresh.

Correct?

Bests!!

The refresh time of the dataflow is still similar to the original refresh time we had in Power BI dataset. Because we haven’t changed anything in the data transformation. It is the same transformation running elsewhere.

However, the benefit of this approach is that you do not have to WAIT for your refresh to finish to do something. the refresh of Power BI is fast, you just need to make sure that the dataflow refreshes on the periods you want it too. It is a matter of separating the data transformation scheduling from the loading schedule.

Reza,

Great article, I appreciate the time you’ve taken to illuminate all these functionalities. We’re currently working off a Power Query // Excel // Sharepoint environment to build Shadow IT data warehousing for project financial management. Is there an update to Power Query in Excel that will allow access to these dataflows in the future? This would massively improve performance in a big way by pushing hundreds of SP access queries to the datalake instead of Sharepoint and Excel APIs.

Hi Julius

Thanks for your feedback.

I believe it will be very likely. But I don’t know any timelines for that.

It is also worth noting that using Dataflows allows reuse of the transformed data among multiple datasets, so the time saving benefit is now multiplied.

Hi Jerry

Exactly. one of the good points of having dataflow

Cheers

Reza

Thanks for all of the great info that you provide! You are one of my go to sites when I need power bi info.

Have you any idea about why a dataset refreshes using on premise gateway without issue but the same data in a dataflow does not?

I built a dataflow to include the same data that currently exists in one of my datasets. I have both the dataset and the dataflow refreshing daily (at different times) via on premise gateways(personal and enterprise editions respectively). The refresh of the original dataset is consistent and takes about six minutes to refresh. The dataflow refresh has been inconsistent at best and successful refresh duration is between nine and twenty three minutes. When it fails it is always one of two tables (or sometimes both) that cause the problem – Error: AzureBlobs failed to get the response: ‘The request was aborted: The request was canceled. It appears to “time out” on an entity when the duration of the refresh exceeds about eleven minutes. These are small tables from our Access database and should never take eleven minutes to run.

Thanks for any insights that you might have in this regard!

Lori

Hi Lori

I would say this should not happen usually unless there is something special about it. Please correct me if I’m wrong, I think you are not using Computed or Linked Entity, and your model is all running under Power BI Pro account? I’d like to see what transformations used, so if it is possible, you can send me an email with the M script of entities, then I can have a look. Are both dataflow and dataset running on the time that the data source are available? or maybe dataflow runs on a pick time?

Cheers

Reza

Hi Reza,

I wanted to know if there os a reporting capabillity on the Dataflow itself, something like reporting on the last refreshed date of a dataflow , how many failures etc.

Where and how can i find this data of a dataflow and report to ?

Any suggestions will be greatly appreciated.

Hi Valar

You can see this information in the workspace under each dataflow. There is not a single report that shows you last refresh time of all dataflows by the way. you need to go to each and see it. we might add this feature into Power BI Helper 🙂

Cheers

Reza

Hi Reza,

Thank is article simplified some ways for me to copy and paste from Power BI desktop editor to BI dataflow although am not a data scientist, but I have a problem if you can advise me, I have cube in AX2012 am using it from 8 months ago

i moved to dataflow a month ago when I got timeout error from Power BI desktop dataset

The dataflows was taking around 20 minutes to get the data from SQL , suddenly its jumped to two hours and its give me again timeout error, the table has around 250K to 300k row is bi has a limitation for such this number .

Thanks,,,

Hi Mohamed

If your dataflow is now taking much longer, without you changing any codes, then something is wrong in the source database. Maybe the load on the source database is too high? or you are reading data at a time that the source is not operating well. or something happened on the server that lacks some resources. investigations should be done on the source server and db

Cheers

Reza

Hi Reza,

Thanks for the wonderful gift of your website.

Please what advice would you give as a workaround in the case where I keep receiving ‘We couldn’t parse the input provided as a DateTimeZone value’ in Power BI service.

I have made use of dataflow, following your blog passionately, in order to make refresh or update faster, the data in question has to do with some IoT which is being generated in minutes, presently a couple of million rows now, and it is increasing. Now the field of Date/Time has kept on giving the above errors ever since. I have tried all sorts of ‘helps’ online nothing has worked. I understood that Power BI service doesn’t support various time zone yet, but as much as I tried to set it as DateTimeZone option from that particular column, while trying to refresh, it keeps on throwing that error in return. The whole data with that particular Date/Time field is from cloud storage stored as ‘Text’, but converting it to Date/Time, and making it to refresh or update so has been impossible.

I tried to do it from Power BI Desktop, and copy query to dataflow, it wouldn’t complete without the error. I tried to do it from dataflow(BI Service), and connect it to Desktop, that error will ensue. I don’t know what else to do, but I know there ‘s always a way out.

Hi Dare.

Do you need the entire data from this field? I mean the data and time is needed, but do you also need the time zone information? what is the sample value for this field?

Cheers

Reza

Thanks Reza for this great post. My question would be on the opposite: Is there a way to copy the code from Dataflow back to Power BI Desktop? 🙂

Thanks.

Of course you can do that.

It is the same Power Query M script which you can use anywhere. as long as you have access to the data source.

Cheers

Reza

Hi Reza,

Appreciate the depth of the article. I am having some issue with moving over the querys to dataflows. My current model in desktop contains 4 fact and 8 dimension tables. The original Pbix model was connecting directly to SQL Server and when published to the premium workspace was taking between 10 – 14 min to refresh the entire model. I moved the queries to dataflows (total time for dataflow refreshes was 8 min, so saw some improvement there) and pointed the model queries to the dataflow entities. What I am observing is refreshing the updated model is now taking aprox 30 – 35 min after the dataflows have been refreshed. Is there a setting which needs to be updated in Power BI or in the Gen 2 storage which is affecting this, or is there something else I need to do to speed this up. Thanks in advance for any help!

Hi Andrew

Do you test it in PBI Desktop get data? or after publishing it in the service? the PBI Desktop might be slower because of the internet connection

Cheers

Reza

Hi Reza, I have a question here. I am using dataflows to transform my data which is coming from REST API. Data is refreshed in the dataflow using the incremental refresh(Although not sure since my data source does not support query folding.

Now I am a little bit confused here, I understand that when I bring the data into Power BI desktop it will import the entire data set which might create an issue when the data expands. ( I am assuming around 10,000 new records to be added hourly in the dataset)

How can I make sure that my model works fine? Can I also do an incremental refresh on the dataset coming from the dataflow?

Hi Raks

You can definitely do incremental refresh from dataset side as well, Usually it makes sense to have it in both sides, the dataflow and the dataset.

Dataflow doesn’t support Query folding yet, which makes the incremental refresh process a bit unnecessary, but this would change very soon.

Cheers

Reza

Hey Reza,

Several of my scheduled data flows are running twice/day (when they are only scheduled to run once). If we tested with others even they are facing the same problem with dataflows. Happening twice schedule refresh instead of one schedule refresh

Hi Rahul

Have you contacted Microsoft support team about it?

Cheers

Reza

Hi Reza,

Once we’ve established our dataflow, do you know of a way to capture the refresh date/time of the dataflow in a report/dataset?

Currently using PowerBI.Dataflows(null) in PQ to bring in basically all other relevant metadata for my dataflows like workspaceid, dataflowid, entity etc. but frustratingly don’t see refresh time in there.

My current work around is to just create an Entity in each Dataflow with DateTime.LocalNow and pull that into my dataset.

Thanks!

Hi Scott

I’d say easiest would be creating that entity with the LocalNow PQ function in the dataflow that you mentioned.

another way is to use REST API calls to the dataflow (either through PowerShell, or .NET), and get the refresh history. However, that requires other components and can’t be done just with pure Power BI.

Cheers

Reza