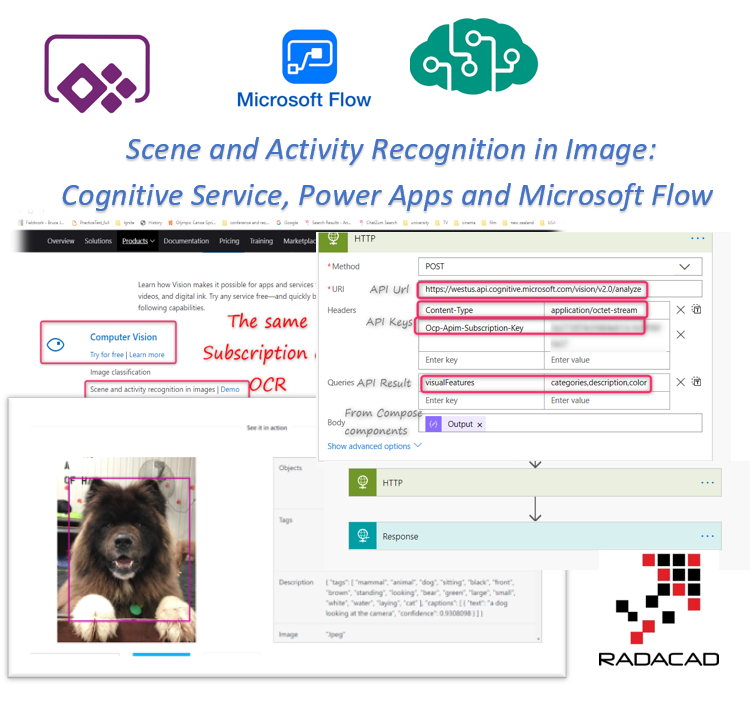

There are lots of possibilities to embed cognitive services API in application like Power apps with help of Microsoft flow

in the last posts, I have shown how to create Face Recognition application or How to create an OCR application to convert image to text.

https://radacad.com/face-recognition-application-with-power-apps-microsoft-flow-and-cognitive-service-part-4

In this post, I am going to talk about one of the interesting API we have in Cognitive service name

Analyze an image

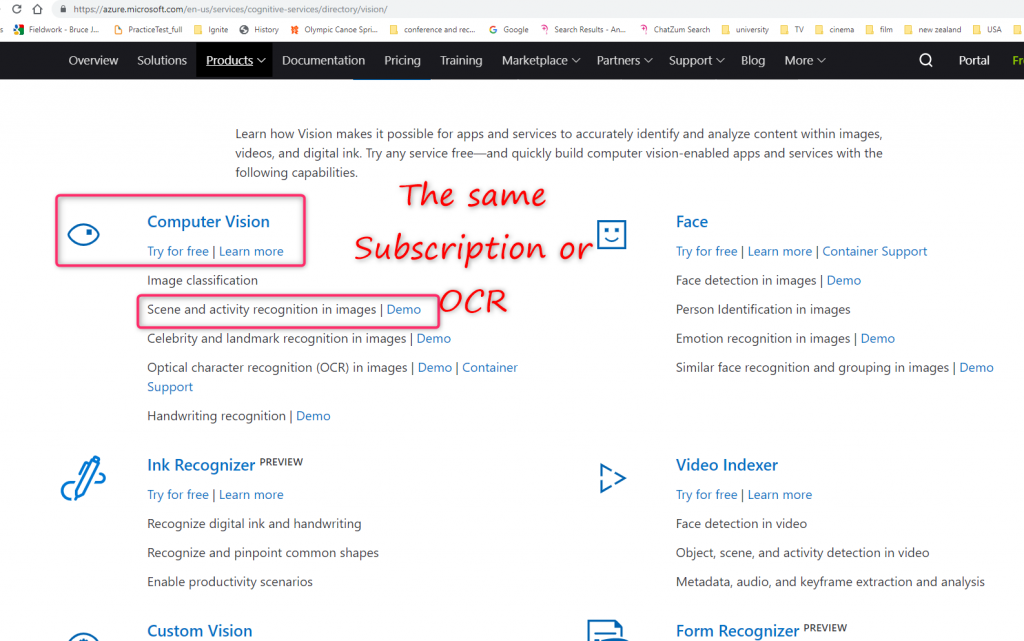

for a demo just navigate to the cognitive service website

Then click to Computer vision and then click on Scene and activity recognition in images

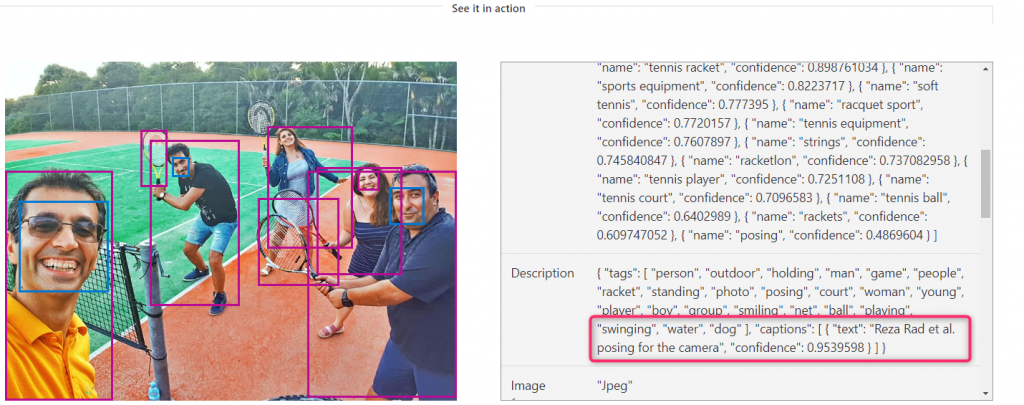

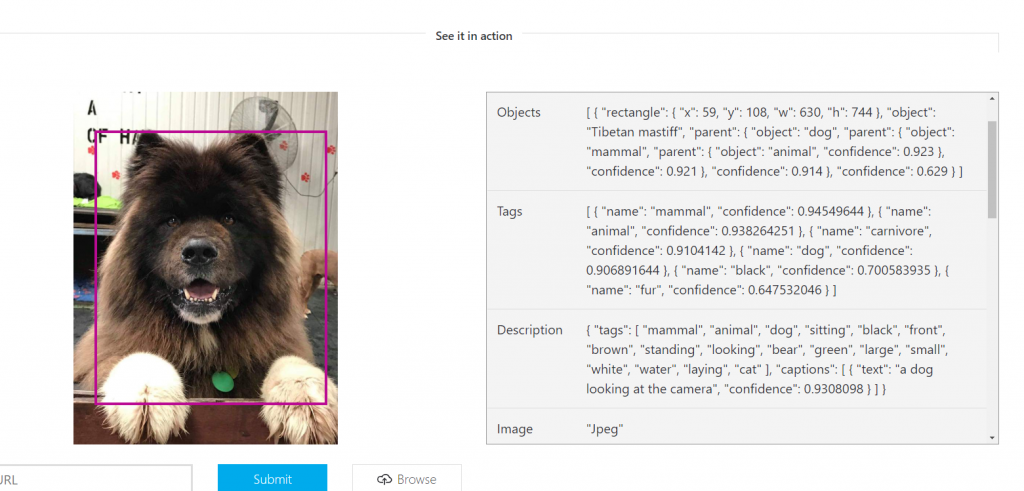

to see how it works click on a demo and upload a picture, I did the same and I upload the picture of my dog posing for the camera in doggy daycare.

Look at the result on the right side,

The object list able to identify the animal–> mammal–> and Dog with the confidence

Tibetan Mastiff is 0.62 which I can say they are similar breed, not the same that is why the confidence is low

The dog is 0.92 which is totally correct

Now let’s test another one, as you can see this one also able to detect the object like Tennis Players, Rackets Tennis and even some people like Reza Rad that is popular in social media!

Now I am excited to use this API in my application, the process that I am explaining can be applied to other webservices as well.

The process is the same as Face recognition

https://radacad.com/create-power-apps-for-taking-photo-part-1-face-recognition-by-power-apss-microsoft-flow-and-cognitive-service

https://radacad.com/create-microsoft-flow-part-2-face-recognition-by-power-apss-microsoft-flow-and-cognitive-service

https://radacad.com/face-recognition-application-with-power-apss-microsoft-flow-and-cognitive-service-part-3

Now I will summarise the process

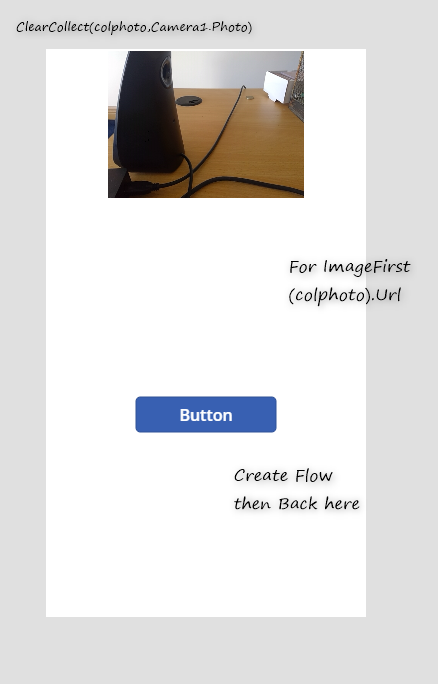

1-Power APPS

Create an application with the format of the Mobile app in Power Apps take a photo and pass the photo to the Microsoft Flow.

Create Power Apps Application to Take a Photo.

For this scenario, I use the community plan: Community Plan: https://powerapps.microsoft.com/en-us/communityplan/

For this scenario, I use the community plan: Community Plan: https://powerapps.microsoft.com/en-us/communityplan/

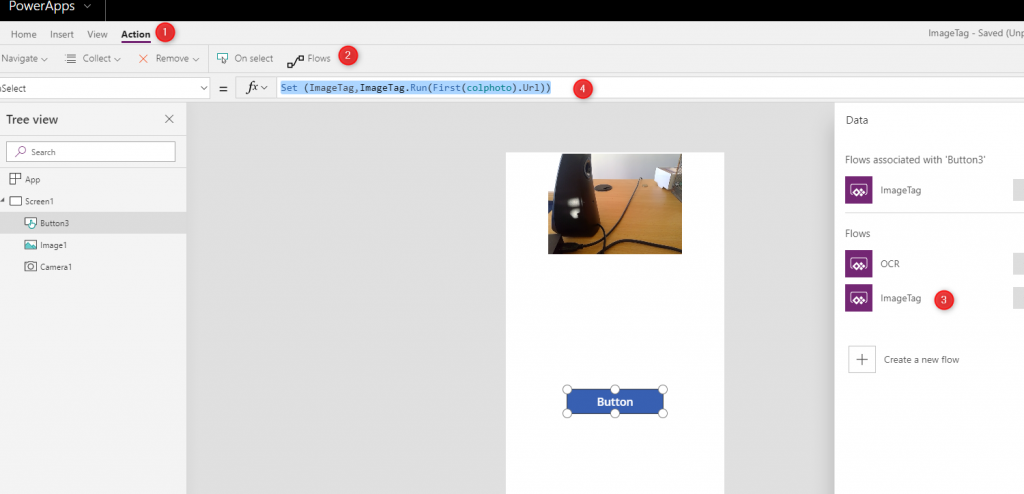

create an Application as below ( for more detail see the Post 1 and Post 3).

so create the first draft of App with Camera, and Image as below (explanation has been done in Post 1 and Post 3)

2- Microsoft Flow

Now we need to create the flow.

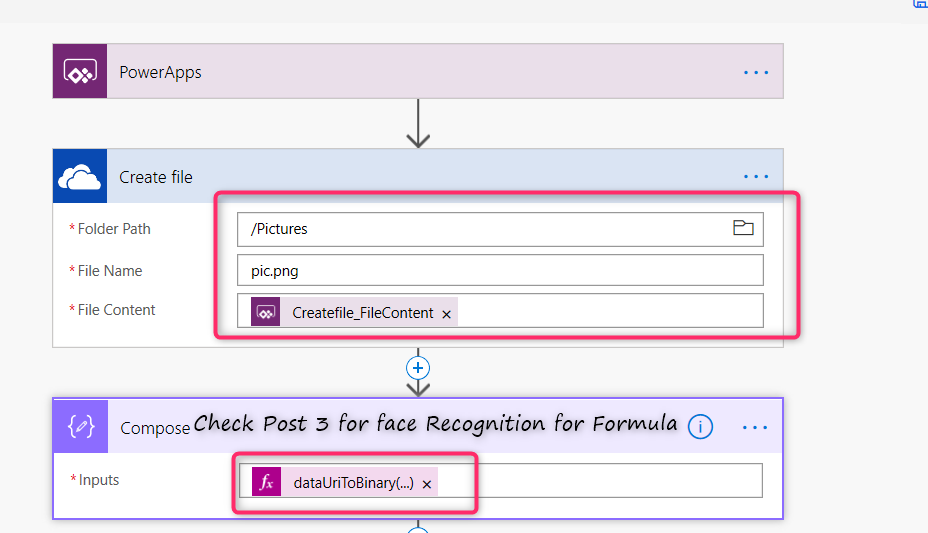

to create flow we follow the same process

1- Login into Microsoft Flow,

2- blank template

3-trigger Power Apps

4- Store data in OneDrive or SharePoint

5- Next store it in Compose as datatoUriasBinary

this the same process we do the get the picture from the Power Apps

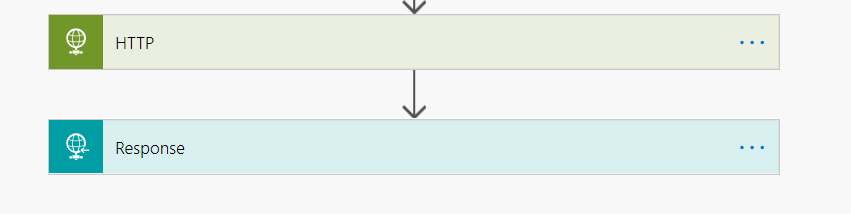

3- Two component for call any API

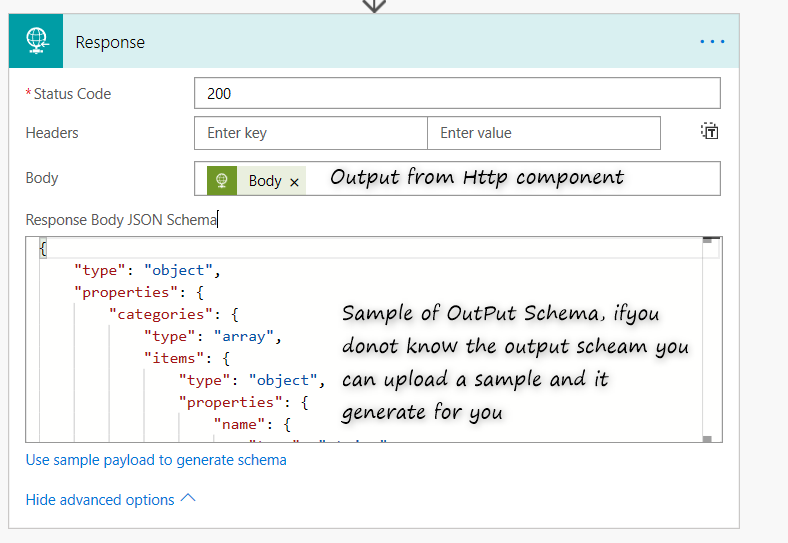

Now we use a component in Flow as Request and Respond

by having this two node you able to call most of API

in this scenario I have check the documents of Image tag and activity from Cognitive service and

First Http component:

this component is responsible for the calling the API using API Uri, Key, and what it need to return

for this example, I put only for Image tag API but you will see the same scenario for Face API in Post 1 to 4.

for Response, the same but you need to provide the JSON format of that, if you do not have it, then copy the output you have and it gives you the output schema

Save the flow and Back to Power Apps, in Power Apps for the button you need to write a line code to connect flow to apps, first, click on the button, then click on the Action, then flow, find the flow and write the below code for it

each API return specific data type

for instance, in Face API the output schema was like

{

"type": "array",

"items": {

"type": "object",

"properties": {

"faceId": {

"type": "string"

},

"faceRectangle": {

.

.

.

.

But for the image tag