Dataflow is the data transformation service in Power BI, and also some other Power Platform services. Data Warehouse is the cloud storage and also compute engine for data. I often get this question that: “Now that we have dataflow in Power BI, should we not use the Data warehouse? What are the differences? which is better? When to use what?” This article and video, explains answer to these questions.

What is Dataflow?

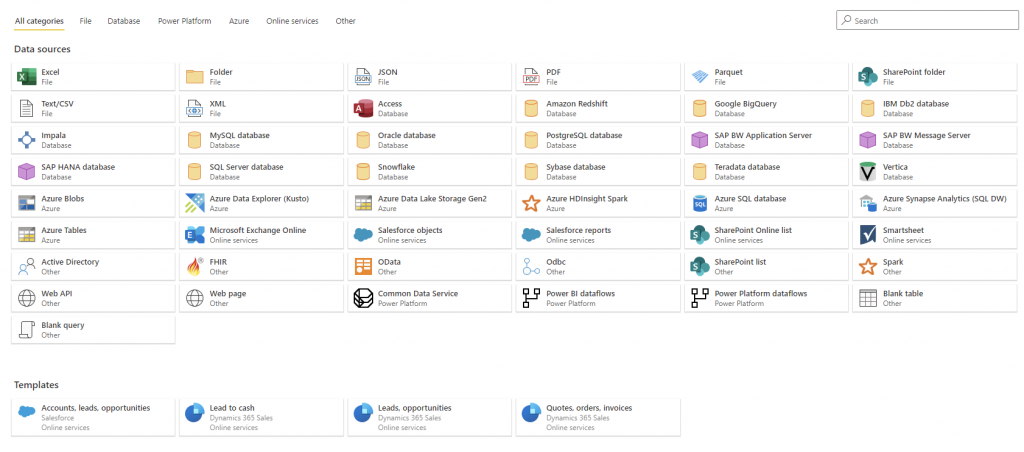

I have written some articles about dataflows in the past and I recommend you to read them to understand the full picture. In a nutshell, dataflow is a Power Query process that runs in the cloud independent from any Power BI reports.

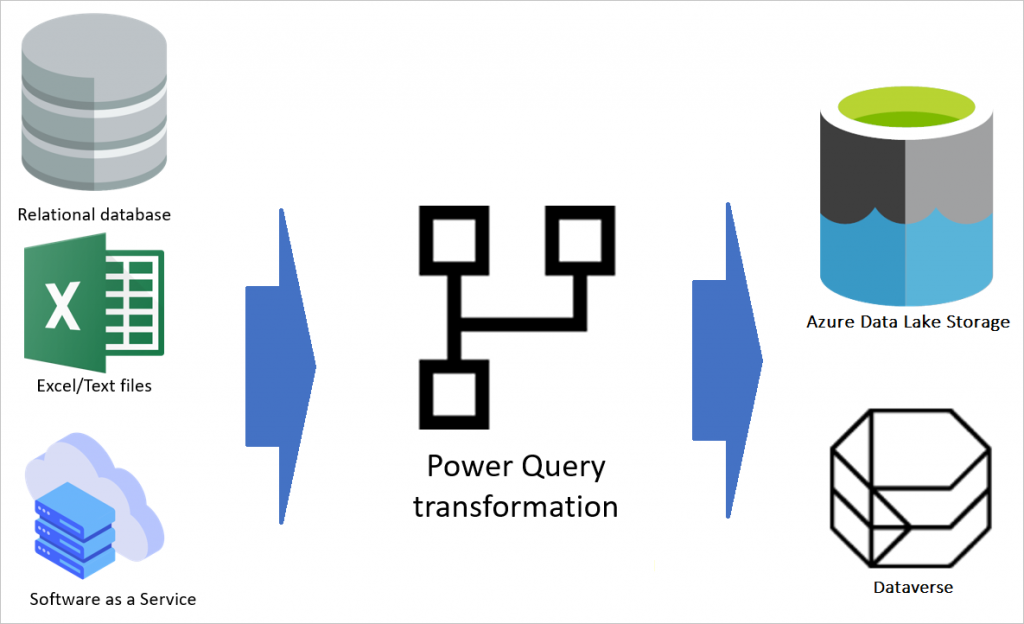

Dataflow includes the data transformation engine (Powered by Power Query), and the storage (Powered by Azure Data Lake Storage Gen2, or Microsoft Dataverse – the new name for Common Data Services).

What is a Data Warehouse?

The reason I didn’t say Azure SQL Data Warehouse, or Azure Synapse, or SQL Server Data Warehouse or etc, is that there are variations of technologies that organizations use as their data warehouse. Some chooses cloud-based (Azure SQL Data Warehouse, Synapse, Azure SQL Database), Some users on-premises (SQL Server Data Warehouse, or other non-Microsoft tools and services), and some use hybrid plans.

A Data Warehouse is a place to store the dimensional data for the purpose of reporting and analytics. The data from this storage often will be used by an analytical technology (such as Power BI).

The Differences

Now that we have a generic definition of the two terms, let’s talk about the differences.

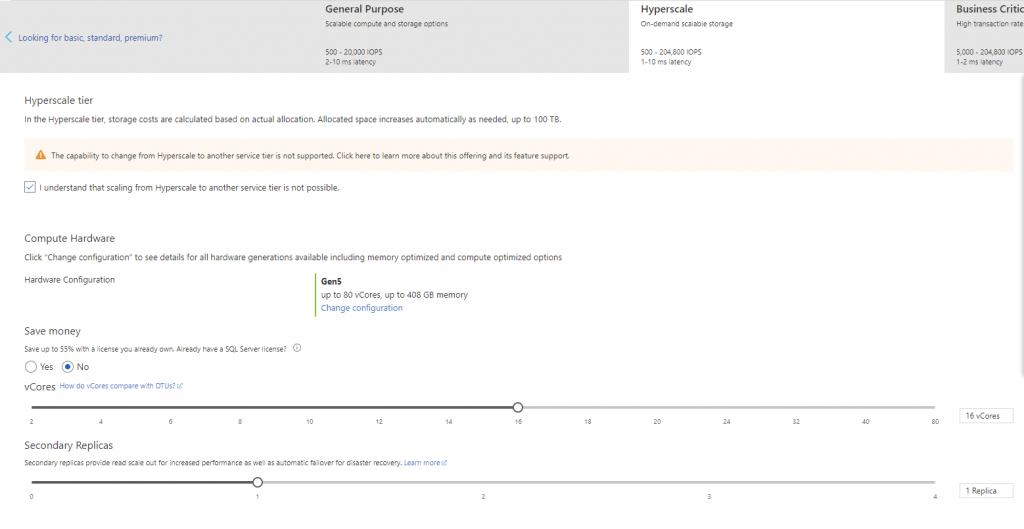

Data Warehouse is Scalable

Using technologies such as Azure SQL Data Warehouse means you can use scalable compute and also storage for the data and also querying it. You can have bigger storage or compute power if needed. This is also true in some cases of using on-premises technology, however, you are bound to the hardware specifications of your server mostly.

Dataflows can use scalable storage if you choose the option of bringing your own Azure Data Lake Gen2 storage. For the compute, you can choose to leverage the enhanced compute engine. However, the scalability option that you get with dataflow is much more limited than what we have in technologies such as Azure SQL Data Warehouse or Synapse.

Dataflow is user-friendly

Dataflow, like many other things in Power BI and Power Platform, designed to be user-friendly. With low or little knowledge of data transformation, you would be able to build a dataflow solution. There is a simple graphical interface for doing the transformations (which uses the Power Query engine), and scheduling refresh and other parts are all designed very simple.

Data Warehouse however, requires a developer touch. You can’t expect someone beginner to use it and build a data warehouse or even artifacts of it in a few hours.

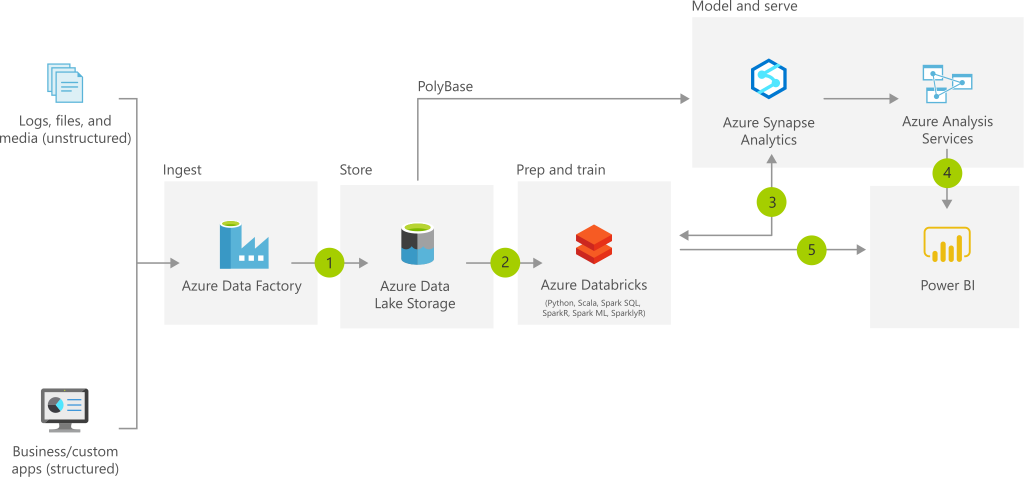

Data Warehouse is not enough by itself (often)

A data warehouse is the storage and also a compute engine. However, it does not have the data transformation engine often. If you are use Azure Data Warehouse or Azure Synapse, then very likely you need to use Azure Data Factory, and sometimes even combined with other services such as Azure Databricks to do the transformation.

Dataflow is all-in-one

Dataflow is designed to be all-in-one. Behind the scene, it uses Azure Data Lake Storage Gen2 or Dataverse (Common Data Services) as the storage engine. However, this interaction is seamless from the developer’s point of view (if you don’t bring your own storage).

Dataflow can be cheaper

Talking about licensing and costs are not simple here. Because we need to go through each specific scenario case by case. However, overall, an example can explain it. If an organization is already using the Power BI Premium license, then they will have dataflow at no additional cost. If the same organization wants to use Azure Data Warehouse or Data Factory or other services, they need to pay additional costs.

This doesn’t mean that dataflow always comes cheaper. As mentioned, there are difference scenarios to consider, and in some other scenarios, something else might come as a cheaper option.

Data Warehouse needs a developer’s touch

To work with a data warehouse, you need to have a developer’s knowledge. There are many components that need to work beside each other, each component might need learning a language or tricks. You might need to know about T-SQL and some other languages. You also need to know how to do things with Azure Data Factory, etc. The knowledge required to work with a data warehouse is the knowledge that takes time to build and that is why it requires normally a developer’s touch.

Data Warehouse has a longer learning curve

For Dataflow: You just need to learn Power Query

Dataflow is for citizen data engineer

Dataflow on the other hand is designed for someone with low or little knowledge of the development to build a model. The whole Power Platform suite came with the promise of enabling citizen developers to do things for themselves. Dataflow is also one of the technologies that you can expect a citizen data engineer or wrangler to build a solution with that.

Dataflow development can be fast

Data Warehouse is designed for Enterprise, administration, and team development

Data warehouse is designed for customization, scalability, team development, administration and many other elements of a fully-fledged BI system. You can have someone doing the data transformation, someone else taking care of the storage, and someone looking and optimizing things. You can control the administration in a detailed granular level.

Data Warehouse technologies took years to build and through that period, they became team friendly. Dataflow is not there yet. there are limitations on the administrations, team work and other parts here and there. However, considering the purpose of dataflow (which is for citizen developer), this still fits the purpose.

Buying a car vs Building it using components

If I want to some up everything in one place, this is what it is. The analogy of dataflow vs data warehouse, is like buying a car vs buying the components and building something customized.

If you want to have a car, get from a place to another, there are not many obstacles in the road, it is a normal and simple route, you would go and buy a car. that is the sensible thing to do. Why building it?!

But if you are taking a very long road, with many obstacles, you probably need a car that drives fast, but also has a strong base, you might need this to fly sometimes because there is no road (use a bit of imagination here ;)). In that case, there might not be a car to buy to do it for you. You can, however, buy components (such as engine and other things). Then using the skilled team that you have, build what you want, something that can even fly if needed 😉

Final word: If you are looking for an answer, here it is: “Can I build a data warehouse using dataflows?”, Short answer is “Yes, you can”, but then if you ask “Would it be as powerful and as scalable and as customizable as doing it with other technologies”, then the answer is “No, of course not”. “So, should I always use Data Warehouse instead of dataflow”, “Absolutely not. It all depends”.

Video

Please let me know your thoughts and questions in the comments below, I like to hear what you think.

In fact I have used it . YOY data is save and no incremental refresh required for last few months data. Works with Pro (NO Premium ) But U summarized all very well.

Thanks Anand

I think one important difference is with dwh, you can more Easily use the dwh as an integration HUB to 3rd part software. With daraflows, you are

More locked to ms software.

Hi Terje

that is a good point.

Although, in dataflow, using analytical dataflows, you can write into your own Azure data lake storage, and use that as a source for other software. But I agree that it doesn’t come with many variable options.

Cheers

Reza

Thanks for sharing! I’ve been looking for this comparison since dataflows was launched.

Currently, we are using dataflows with Premium lincense and enhanced engine, instead of a data Warehouse and, in our case, this article validate that it is a correct approach.

Hi Jess.

I am glad this is helpful.

Yes, dataflow is good for many scenarios. It all depends. Thanks for sharing your scenario.

Cheers

Reza

Hi Reza. Is it not worth mentioning that Azure Data Lake Storage (or just dataflow storage) comes in two flavours – 1. standard/text file and 2. premium dataflows where you have a cached SQL version of the data including column store indexes? In theory at least, the latter should offer a significant performance gain. Great article, but not sure about the car anology TBH.

Hi Ragnar.

What you are mentioning is what is available through the ENHANCED COMPUTE ENGINE. This feature behind the scene is doing what you are talking about. Although, that is a good performance boost. However, it never is comparable to the scalability of a data warehouse service and spreading the compute across multiple spark clusters (for example). The dataflow team are working on bring more compute scalability. However, the point here is that dataflow will be always some steps behind data warehouse for those scenarios. the promise of the dataflow is to bring that to citizen developers.

Cheers

Reza

Hi Reza, Thanks for sharing the article. Is there anyway you can build dataflow once in Dev environment and deploy it to UAT an Prod?

Hi Ali

you can export the JSON metadata of the dataflow from DEV workspace, and import it in UAT or PROD.

Cheers

Reza