Power BI Datamart is integrating well with other components of the Power BI ecosystem (such as workspaces, sharing, deployment pipelines, endorsements, sensitivity labels, etc). In this article and video, I’ll explain how Power BI works with other features and services in Power BI implementation. If you are new to Power BI Datamarts, this article explains what it is and its use cases and this article gets you through the Datamart editor and your first experience with it. You can also learn about the components of the Power BI Datamart from this article.

Video

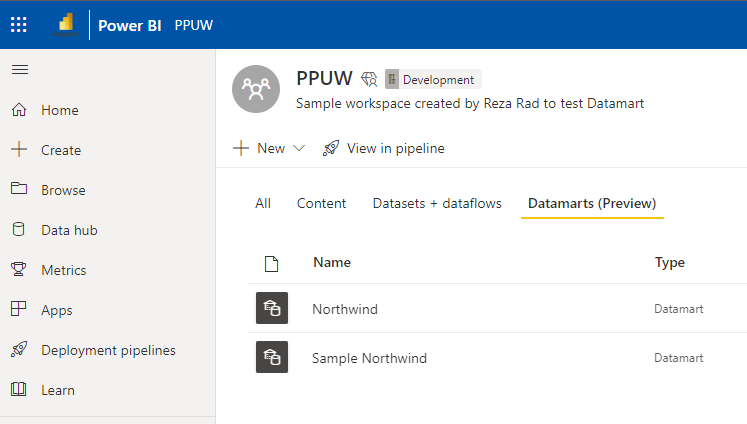

Power BI Datamart is part of a workspace

A Datamart is created (like many other objects in Power BI; Dashboard, Report, Dataset, and Dataflow) inside a workspace. This means it comes with all the abilities that workspaces have to offer for governance. For example, in a workspace, you can define a set of users as Contributors or Members of that workspace, so that they can contribute to building that Datamart. To learn more about access levels in a Power BI workspace, read this article.

This also means that the Datamart created in one workspace can be shared to be consumed by the objects of other workspaces (such as reports in other workspaces consuming the Datamart in this workspace). The design of the workspace structure can play an important role in the re-usability of the Datamart throughout the organization. This article explains what things you need to consider for such a design.

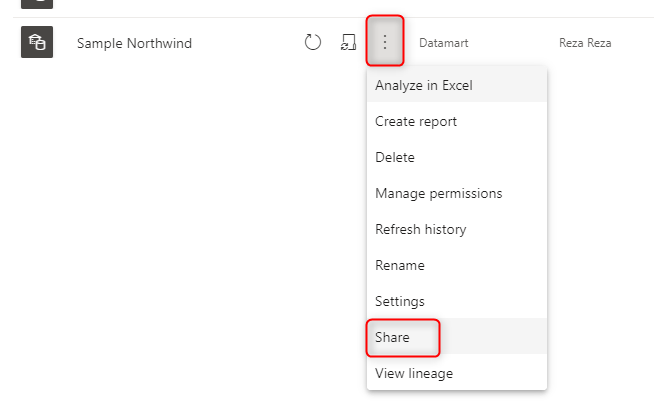

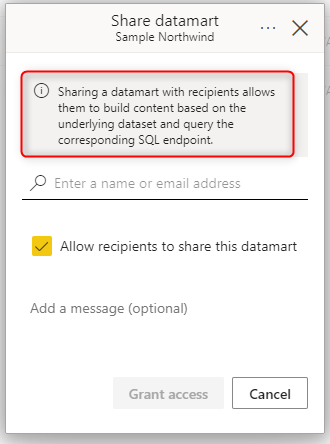

Sharing for Datamart users

Not only you can give the view access to the users of the Datamart (those users who are not changing the Datamart but wants to build content such as report and dashboard using the data of Datamart) through the Workspace roles such as Viewer. You can also, share a Datamart individually with users.

When you share a Power BI Datamart individually, users will be able to read data from it and build reports using it, but they cannot change it (unless they have contributor or higher-level access in the workspace);

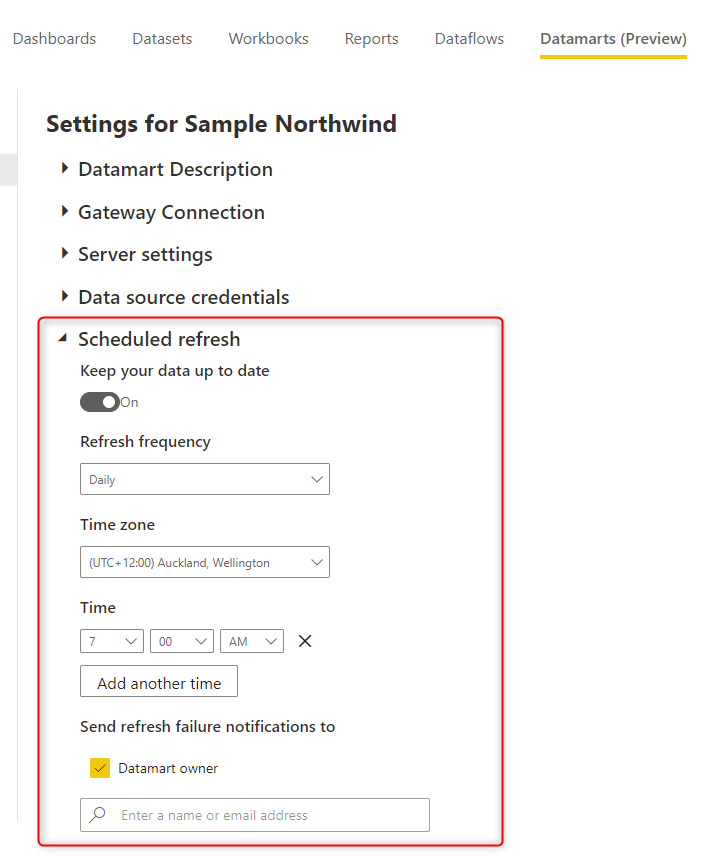

Schedule Refresh

To schedule the refresh of a Datamart, you need to set it at the Datamart (not on the Dataset associated with it).

This will be the time that the Dataflow refresh happens, meaning that based on the screenshot above, at 7:00 AM the Dataflow will read data from the OData source and loads it into the Azure SQL Database. Right after that, the data gets processed into the Power BI Dataset. You don’t need to set up two different refreshes, just one scheduled refresh takes care of it all.

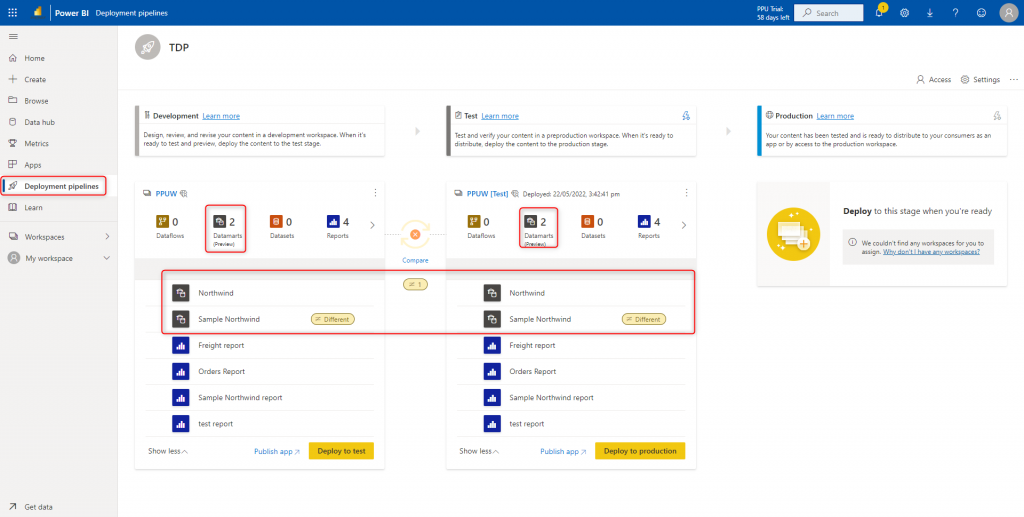

Deployment Pipelines

Power BI Datamarts, like other objects (Datasets, Reports, and Dataflows) can be part of a Deployment Pipeline. A deployment pipeline is a way to push the changes from an environment like the development environment to the Test environment and later on to the Production environment.

Endorsement and Sensitivity Labels

You can set the Endorsement for Power BI Datasets associated with the Datamart, and you can set sensitivity labels for them. The Sensitivity labels can help to be sure that even if the data is shared with someone who should not have access to this data, the information won’t be exposed.

Summary

Although Power BI Datamart is the newest component added to the Power BI ecosystem, the integration of that with other services throughout the Power BI is seamless. In this article, you’ve learned that Power BI Datamart is part of a workspace, can be shared through the workspace or individually, will be part of the Deployment pipelines, and can have the sensitivity labels.

Here are my Power BI Datamart article series for you to learn about it;

- Power BI Datamart – What is it and Why You Should Use it?

- Getting Started with Power BI Datamart

- Power BI Datamart Components

- Power BI Datamart Integration in the Power BI Ecosystem

I provide training and consulting on Power BI to help you to become an expert. RADACAD team is helping many customers worldwide with their Power BI implementations through advisory, consulting, architecture design, DAX support and help, Power BI report review and help, and training of Power BI developers. If you need any help in these areas, please reach out to me.

Thank you! Just need to make sure I’m clear – the Datamart Scheduled Refresh will also refresh dataflows? Scheduled refresh settings on the dataflow(s) can be disabled?

Hi Jason

Datamart is not exposing the dataflow separately to you. so you cannot really go and set the schedule refresh only on dataflow. When you set schedule refresh, you are setting it for the entire process, which is the dataflow from source to azure sql db, and then from azure sql db to the dataset import

Cheers

Reza

Thanks for all the explanations. What happens when you import data in a datamart from an existing dataflow? Is the data then stored twice within Power BI?

And would it be a best practice to first import data into dataflows and then use that data in several datamarts to avoid doing the same transformation steps on the source data in multiple datamarts?

As I mentioned, the dataflow part of the datamart is not exposed yet.

this means you cannot edit or change it separately from the datamart.

You can get data from another dataflow, but that means that the data will be populated from where the data of that dataflow is stored (ADLS) and then datamart will have another dataflow inside to build the load process into the Azure SQL DB etc.

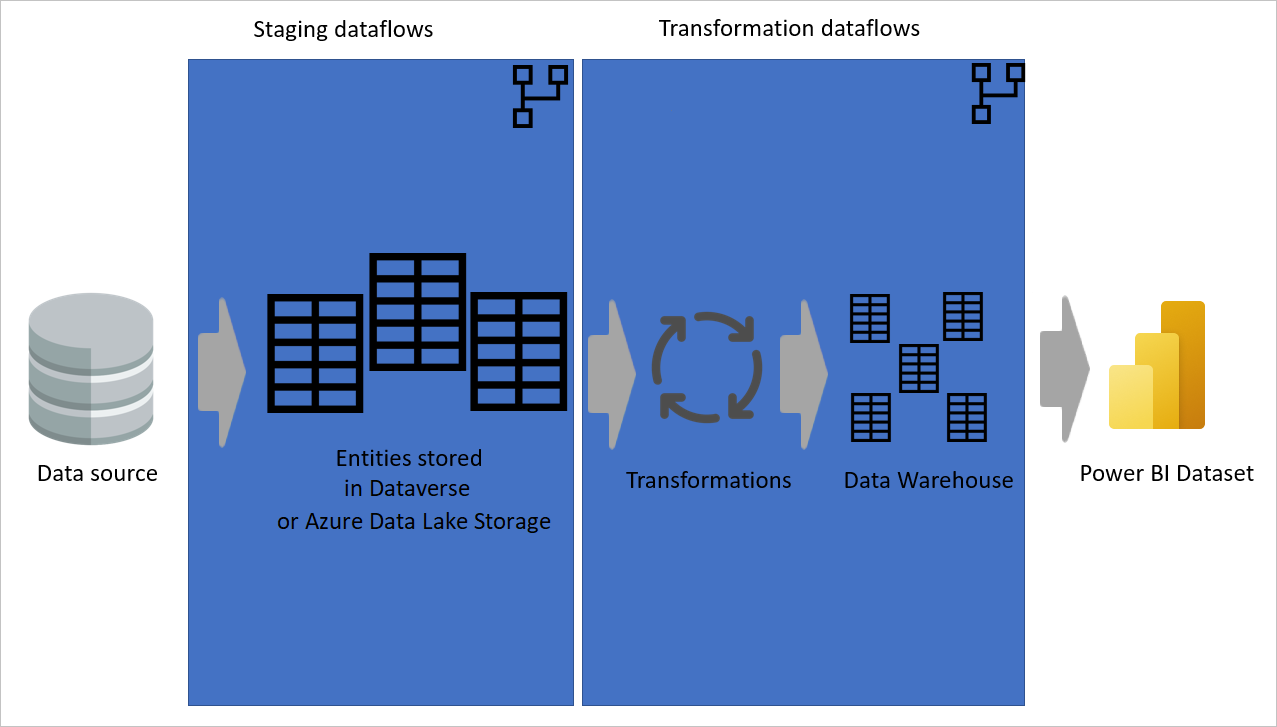

It would definitely help to have another layer of dataflows. I have written some articles about how you can have layers of staging and transformations with multiple dataflows. those can then be the source for the Datamart. I do recommend such approach.

Hi Reza,

Amazing set of article you wrote about Datamarts!

I have just read it all! Thanks for this great content!

Two questions that came to my mind:

It’s not clear to me which storage type of the dataset built on top of the datamart is. I thought Direct Query at first (because of the RLS being defined at the Azure SQL DB level), but then you mentioned that the datamart refresh would trigger the dataset refresh, suggesting that it could be at least mixed, if not import. Any idea about that?

Also, did you try to tune the dataset a little bit with Tabular Editor? E.g. calculation groups or partitioning?

Hi Nicolas

thanks for your kind words

The dataset will be using IMPORT data from the Azure SQL Database. So it means it has all the power you can use in aggregations, DAX, etc.

However, I haven’t yet tried to edit it using Tabular Editor or another tool to see how it works.

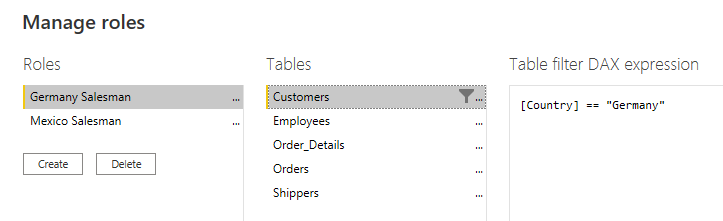

The RLS setting is just duplicated in both places (in Azure SQL DB and also in the dataset). There are in fact two different places that it sets it. But the Datamart editor helps you doing that in just one place.

here you can see how it is done in the dataset associated with the datamart;

Thank you.

“When you set schedule refresh, you are setting it for the entire process, which is the dataflow from source to azure sql db”

To me, this sounds like the datamart refresh also refreshes dataflow data, which I do not understand how as dataflows can be used in many datamarts that may have many unique refresh schedules (interfering w/incremental refreshes, etc.).

Am I thinking about it wrong?

Hi Jason

This is a good question.

As I mentioned, the dataflow part of the datamart is not yet exposed. when it becomes available (hopefully in the future), then you can re-use it in multiple places.

However, I have written an article in the Microsoft Dataflow series and explained that you can have layers of dataflow for staging and transformations, You can do your transformations in there and then the datamart’s dataflow be just the ingestion part of it.

Hi, just wondering if all end users need PPU or Premium Capacity in order to view reports built using a Datamart as the source? Or is it possible to just have the developer with a PPU, then end users with Pro?

Based on what I been told, the report connected to the Power BI Datamart’s dataset can be consumed by Pro users with no problem.