Do you have a Power BI dataset that gets data from a dataflow? have you ever thought; “can I get the dataset refreshed only after the refresh of dataflow completed and was successful?” The answer to this question is yes, you can. One of the recent updates from the data integration team of Power BI made this available for you. Let’s see in this blog and video, how this is possible.

The scenario

If you are using both dataflows and datasets in your Power BI architecture, then your datasets are very likely getting part of their data from Power BI dataflows. It would be great if you can get the Power BI dataset refreshed right after a successful refresh of the dataflow. In fact, you can do a scenario like below.

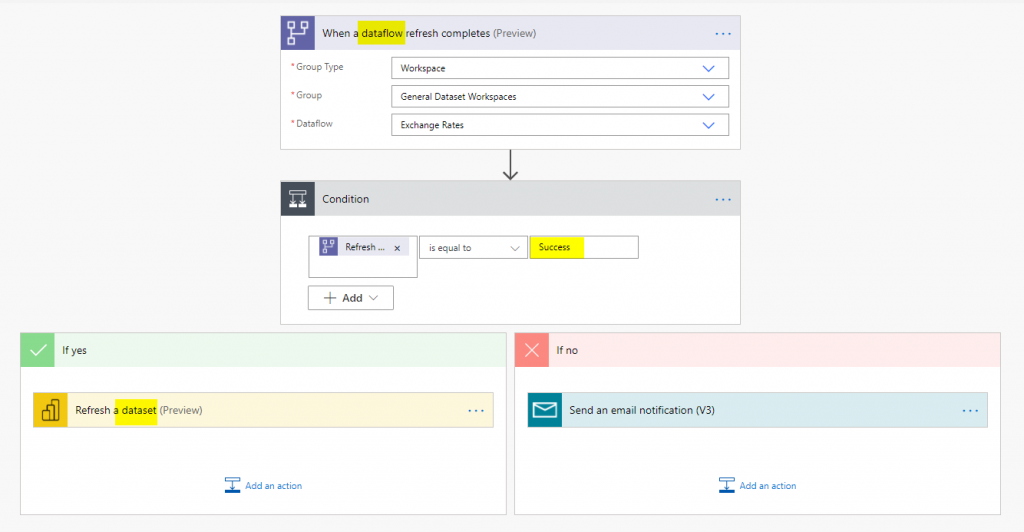

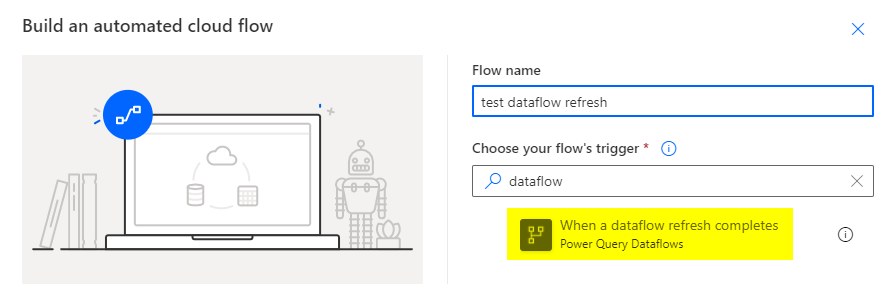

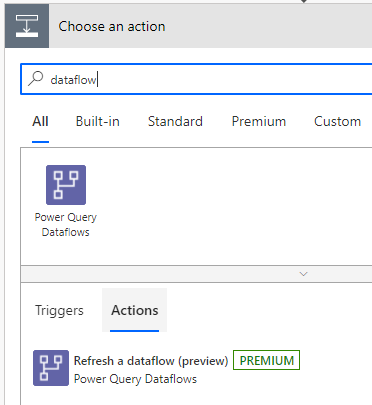

Power Automate connector for dataflow

Power Automate recently announced availability of a connector that allows you to trigger a flow when a dataflow refresh completes.

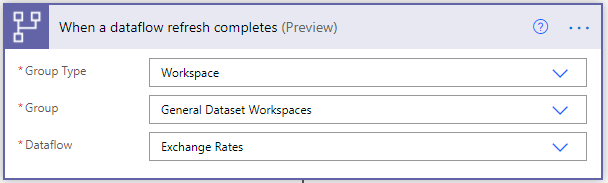

Choosing the dataflow

You can then choose the workspace (or environment if you are using Power Platform dataflows), and the dataflow.

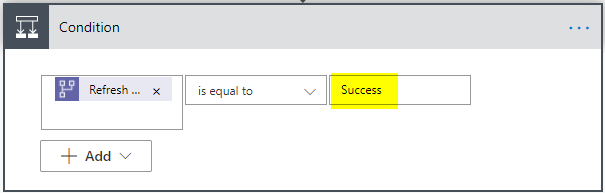

Condition on success or fail

The dataflow refresh can succeed or fail. You can choose the proper action in each case. For doing this, you can choose the result of refresh to be Success.

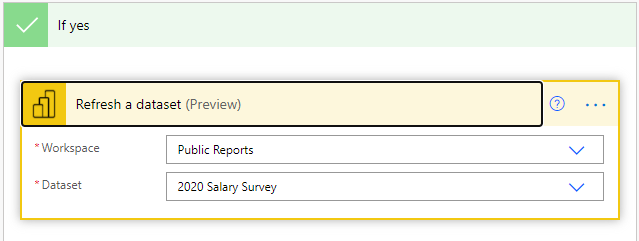

Refresh Power BI dataset

In the event of successful refresh of the dataflow, you can then run the refresh of the Power BI dataset.

Refreshing Power BI dataset through Power Automate is an ability that we had for sometime in the service.

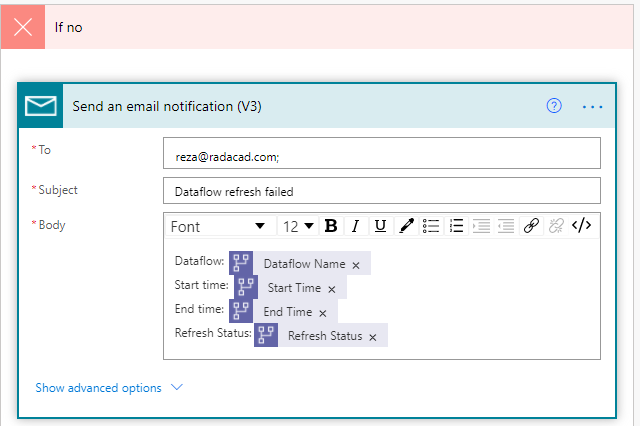

Capture the failure

You can also capture the failure details and send a notification (or you can add a record in a database table for further log reporting) in the case of failure.

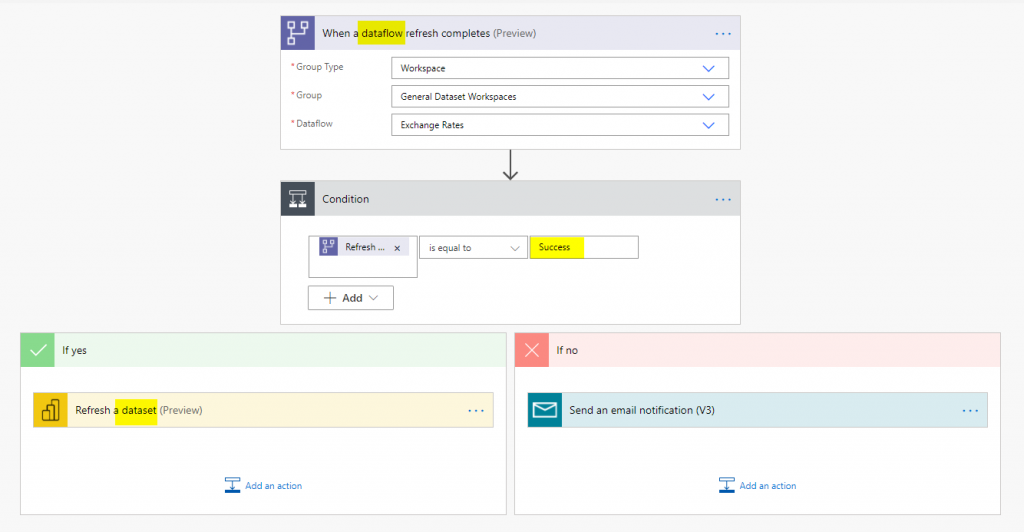

Overall flow

The overall flow seems a really simple but effective control of the refresh as you can see below.

My thoughts

Making sure that the refresh of the dataset happens after the refresh of the dataflow, was one of the challenges of Power BI developers if they use dataflow. Now, using this simple functionality, you can get the refresh process streamlined all the way from the dataflow.

Dataflow refresh can be done as a task in the Power Automate as well. Which might be useful for some scenarios, such as running the refresh of the dataflow after a certain event.

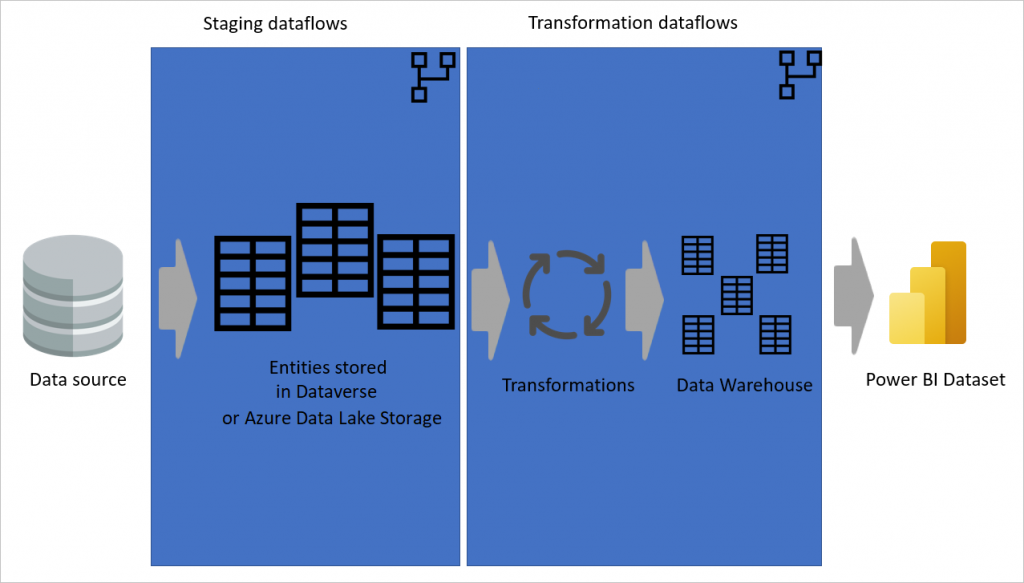

This is not only good for refreshing the Power BI dataset after the dataflow, it is also good for refreshing a dataflow after the other one. Especially in best practice scenarios of dataflow, I always recommend having layers of the dataflow for staging, data transformation, etc, as I explained in the below article.

Although, dataflow is not a replacement for the data warehouses. However, having features like this helps the usability and the adoption of this great transformation service.

Do you think of any scenarios that you use this for? let me know in the comments below, I’d love to hear about your scenarios.

I implemented these dataflows as soon as I saw the release. Very useful for my use case.

I know it is a preview feature, but there were a few things I wish it did or that I could figure out how to address.

First, I wish the connectors more consistently supported Dynamic Values. Dynamic Values would be useful for populating notices and keeping things clear. Dynamic Values for the Refresh a Dataset connector could then support use of conditional logic on success/failure applied. Dataflows are the origination for most issues, but conditional notice would offer peace of mind.

Second, my flows seem to inconsistently wait for refreshes and updates to complete before proceeding to the next flow step. I will often get my “success notices” before the PBI dataset refreshes have completed. Doesn’t seem to affect the function but doesn’t seem consistent with the connector’s intended design.

Last (and not a dataflow connector issue), my process is initiated by loading data files to SharePoint. I first tried to build around the “When a file is created or modified in a folder” SharePoint Connector, but that connector would execute before the upload of the largest files completed, causing issues. Adding delay steps did not seem to help either.

I ended up settling for a manual trigger for now.

I also incorporated the steps to build a Dataflows Monitoring Report (https://docs.microsoft.com/en-us/power-query/dataflows/load-dataflow-metadata-into-dataverse-table). I could see this being useful for larger scale adoption of this automation.

That said, I publish to a PBI Dataset and [Duration] would make the monitoring more useful, but I could not figure out how to reverse engineer from the published example or address given the limitations of working with a dynamic dataset. Any suggestions?

Overall, though, a useful and appreciated feature.

Hi Jose

Thanks for sharing the details. Interesting to know about those. And I guess like what you mentioned that this (hopefully) will be addressed before GA. I would also like the connectors to consider when the upload is finished.

regarding your question about the monitoring report/dataset. What are you trying to reverse-engineer? have you got the log already in the dataverse?

Cheers

Reza

Reza,

Specifically, I am trying to figure out how to calculate Duration from the available Start and End Dynamic Values. I am using the Publish to a PBI Dataset connector and don’t see any means to calculate it on data publishing or while retrieving the data for reporting.

Dynamic datasets don’t seem to allow calculated fields or DAX measures.

Really appreciate all your articles and insights.

Joe

you can use Q&A for calculations 🙂 that is the trick to do it in a streaming dataset 😉

Cheers

Reza

Hello Reza, I would like to ask 2 questions regarding dataflows in shared capacity workspace.

1. In a shared capacity workspace, is there any restriction on the maximum size of the default dataflow storage (not the bring your own lake)? For example – the dataset in shared capacity workspace can be maximum 1GB (post compression)

2. In shared capacity workspace, does the 10GB shared capacity limit of the workspace include the dataflow size?

3. In a shared capacity workspace, suppose I bring my own data lake, then does the 10GB shared capacity of the workspace apply or can my dataflow have data as much as supported by the lake capacity I purchase?

4. Is there any compression applied on the data that is stored by the dataflows?

Thanks.

Hi.

1. I am not aware of any limit on the size of the dataflow, which would make sense. Because one dataflow can include multiple entities, and each entity can have multiple CSV files. your main limitation is the size based on your Power BI license.

2. the 10GB would be your total space to use, including dataflows. If dataflows takes half of it, then you have the other half for Power BI datasets etc.

3. when you bring your own data lake, then there is no limitation on the size of dataflows. as big as your azure subscription allows

4. nothing that I am aware of. they are stored as CSV files.

Cheers

Reza