Using Power BI is simple. However, using it properly needs a good architecture. In a multi-developer environment, the architecture of a Power BI implementation should allow multiple developers to work on the same solution at the same time. On the other hand, the Power BI architecture should be designed so that different layers of that can be decoupled from each other for better integration. In this article, I explain how Dataflows, Datamarts, and Shared Datasets can help build such architecture in Power BI. To learn more about Power BI, read the Power BI book from Rookie to Rock Star.

Video

Challenges of a Single PBIX file for Everything

Before I start explaining the architecture, it is important to understand the challenge and think about how to solve it. The default usage of Power BI involves getting data imported into the Power BI data model and then visualizing it. Although there are other modes and other connection types, however, the import data is the most popular option. However, there are some challenges in a model and a PBIX file with everything in one file. Here are some;

- Multiple developers cannot work on one PBIX file at the same time. Multi-Developer issue.

- Integrating the single PBIX file with another application or dataset would be very hard. High Maintenance issue.

- All data transformations are happening inside the model, and the refresh time would be slower.

- The only way to expand visualization would be by adding pages to the model, and you will end up with hundreds of pages after some time.

- Every change, even a small change in the visualization, means deploying the entire model.

- Creating a separate Power BI file with some parts it referencing from this model would not be possible; as a result, you would need to make a lot of duplicates and high maintenance issues again.

- If you want to re-use some of the tables and calculations of this file in other files in the future, it won’t be easy to maintain when everything is in one file.

- And many other issues.

Suppose you are the only Power BI developer in your organization and are working on a small model that won’t grow into multiple Power BI files in the future. In that case, you can keep going with having everything in one file. However, I do not recommend everything in one file if the scenario is different.

Dataflow to Decouple the Data Preparation Layer

Power Query is the tool for data preparation in the world of Power BI. Based on my experience and what I have seen from other implementations, you will need to spend 70% of your time on data preparation for a proper data model. That means if you are doing a Power BI implementation for ten months, then seven months out of that was spent on data preparation with Power Query! You don’t want that time to be wasted and duplicated for another work. You want to maintain the efforts you have made for your data preparation.

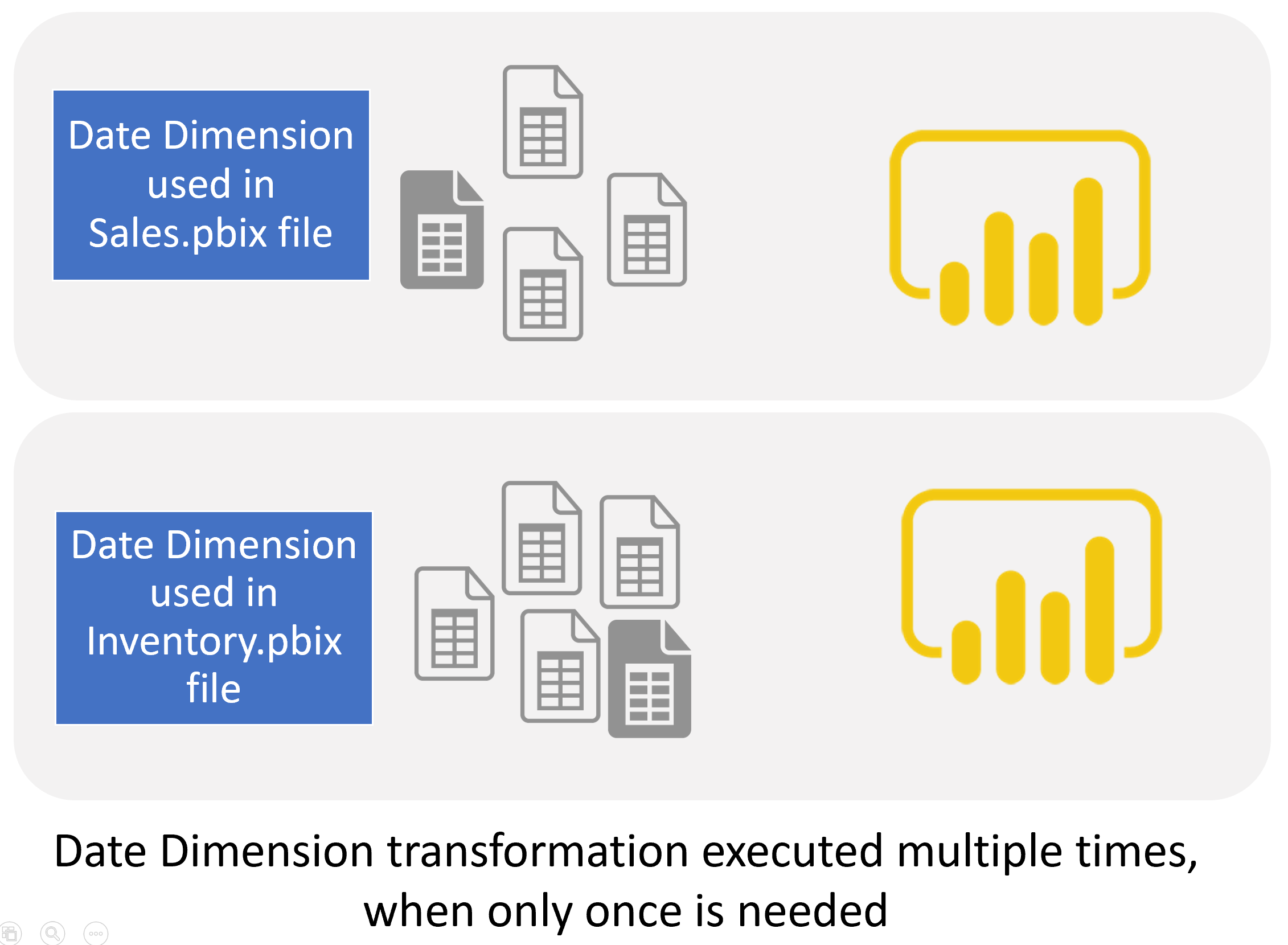

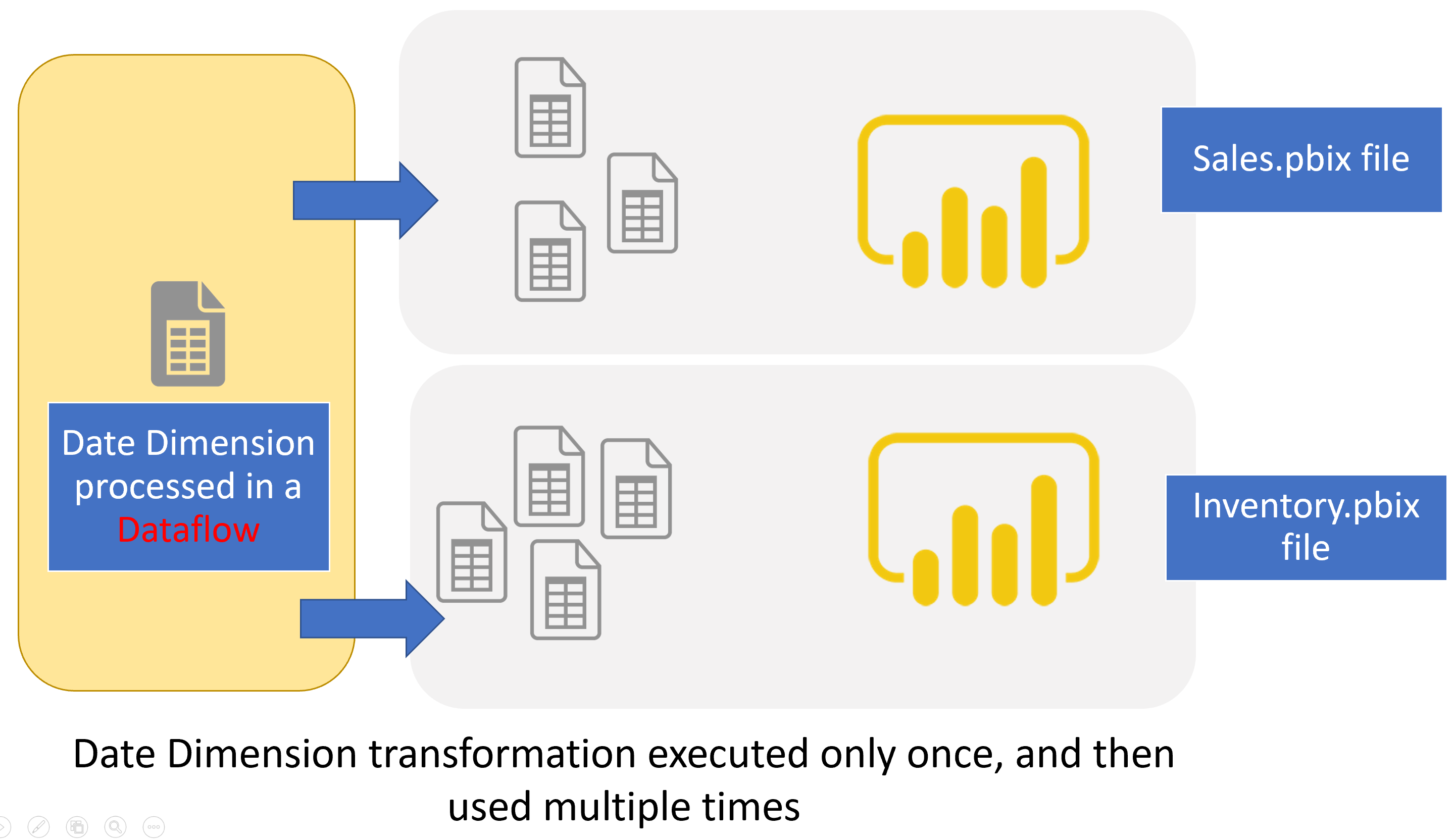

Instead of doing your data preparation as part of a Power BI file, which will link that work only to that file, you can do it through Power BI dataflow. Power BI Dataflow is running Power Query independently from Power BI files. The independence of Dataflow from a Power BI file means you can do data preparation for one entity only once and use it multiple times! In other words, you can use Dataflow to decouple the Power BI system’s data preparation (or ETL) layer from the Power BI file. After doing the data preparation using Dataflow, then Power BI files can get data from it using Get Data from Dataflow.

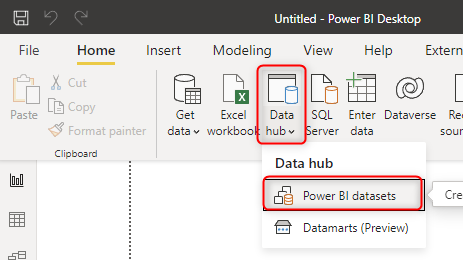

Here is the diagram of the usage of Dataflows with multiple Power BI files:

I have written many articles about Dataflows, which I strongly recommend you to read;

- What are Dataflow and Use Case Scenarios of that in Power BI?

- Creating your first Dataflow

- What are Computed Entity and Linked Entity?

- Workaround for Computed Entity and Linked Entity in Power BI Pro

- How to Use Dataflow to Make the Refresh of Power BI Solution Faster

- Move your Shared Tables to Dataflow; Build a Consistent Table in Power BI

Shared Dataset for the Data Modeling Layer

Although Dataflow creates a shared data source for Power BI models, still, if you want to create multiple Power BI models, you then need to set field-level formatting inside the Power BI file. You need to create DAX calculations inside each file and create hierarchies and any other modeling requirements inside the Power BI model. These settings and formatting cannot be done in the Dataflow. These are parts of the Power BI Dataset.

Instead of doing all the modelings and calculations in each Power BI file separately, you can leverage the Shared dataset concept. The shared dataset concept means you create a Power BI file with a model only and no visualization pages.

A shared dataset then can be used in multiple Power BI reports as a centralized model. You won’t need to duplicate your DAX calculations, hierarchies, and field-level formattings using the shared dataset. The shared dataset will act like the modeling layer of your Power BI solution.

Thin Power BI Reports, Paginated reports, or Analyze in Excel.

Now that you have the Power BI data model in a shared dataset, you can create Power BI reports that gets data from that shared dataset. These reports will create a live connection to that dataset. These are called thin reports.

You can create multiple thin reports getting data from the shared dataset. This enables multiple report visualizers to build visualizations at the same time.

Not only can you create Power BI reports on top of the shared dataset, but you can also create Paginaged reports, or even in Excel connect to the same Power BI dataset.

Power BI Architecture with Dataflow, Shared Dataset, and thin reports

As a result, here is the multi-layered Power BI architecture I recommend using. This architecture hasn’t considered Datamart (I’ll explain that later in this post) because the licensing for Datamart might require a different setup.

In this architecture, we are using layers as below;

- Dataflow for the ETL or data preparation layer

- Shared dataset for the data modeling layer

- Get data from Power BI dataset for the visualization layer (thin reports), paginated reports, or Analyze in Excel

Benefits of a multi-layered Architecture

Every architecture plan has pros and cons. Let’s discuss some of the benefits of this architecture.

Decoupling data preparation layer using Dataflow

The development time you put using Power Query will be preserved and can be used in other Power BI models. As the Dataflow stores the data in Azure Data Lake. Decoupling the data preparation from the modeling means that you can apply changes in the data transformation with the minimum effect on the other layers (modeling). Moving shared data tables to Power Query dataflow is a recommended approach that I wrote about it in another article.

A multi-layered architecture without the need for other developer tools

You can use SSIS or Azure Data Factory, or other ETL tools to take care of the data preparation. However, the power of Power BI resides in its simplicity of it. Everyone with fair knowledge of Power Query can use dataflow too. You don’t need to be a C# or SQL Server developer to work with that tool. When you build the shared dataset, you are still using Power BI to build it. All the skills you need are within the Power BI skillset.

Multi-Developer Environment

The proposed architecture supports multiple developers simultaneously on one Power BI solution. You can have multiple ETL developers (or data engineers) working on Dataflows, data modelers working on the shared Dataset, and multiple report designers (or data visualizers) building reports. They don’t need to wait for each other to finish their work and then continue. They can work independently.

In this environment, everyone can do the job they are skilled best on. The Dataflow developer requires Power Query and M skills but not DAX. The Data modelers need to understand the relationships and DAX, and the report visualizer needs to understand the art of visualization and tips and tricks on how to build informative dashboards.

Re-use of Calculations and Modeling using Shared Dataset

Having a centralized model will reduce the need for writing DAX calculations repeatedly. You will do the modeling once and re-use it in multiple reports. On the other hand, you are sure that all reports are getting their data from a reconciled and fully tested model, or in other words, it is a gold data model.

Minimum redundancy, Maximum Consistency, Low Maintenance

A solution implementation that reduces the need for duplicating the code (using Dataflows and shared Dataset), and re-using the existing code (using get data from Dataflow and Datasets), will be highly consistent. Maintenance of such implementation would be possible with minimal effort.

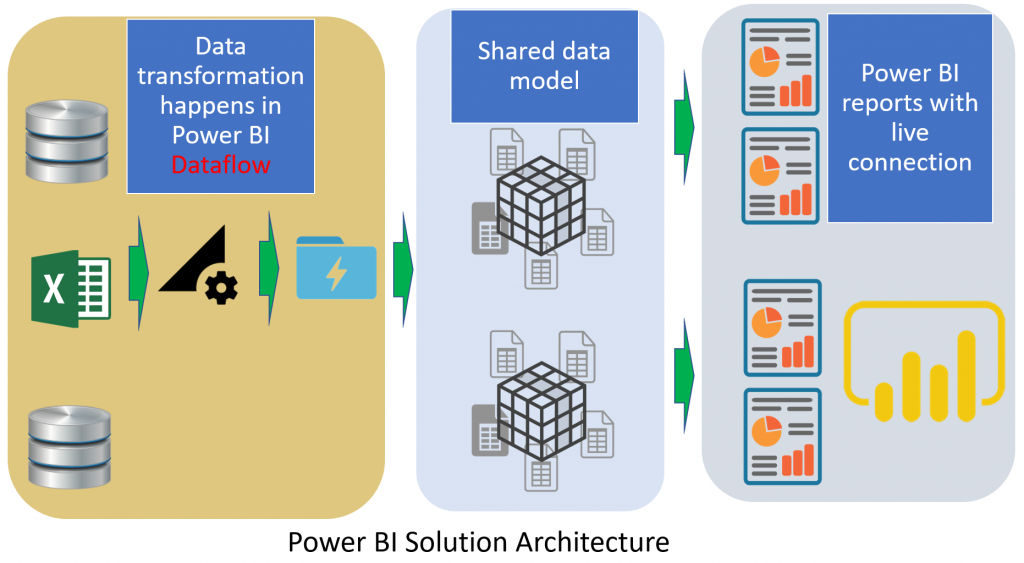

Enhancing the architecture using Power BI Datamart

Power BI Datamart is one of the recent components added to the Power BI ecosystem. This component can take the architecture of Power BI implementation to the next level by bringing one unified UI to build the Dataflow, the data warehouse using Azure SQL Database, and the shared Dataset. Because Datamart requires premium (PPU or Premium capacity) licensing, I did not explain it in the original version of the architecture.

If you have the required licensing, I would recommend using Datamart, which will bring the Datawarehouse as the fourth layer of the Power BI architecture.

I have explained Datamart in some other articles, which I recommend you to study here;

- Power BI Datamart – What is it and Why You Should Use it?

- Getting Started with Power BI Datamart

- Power BI Datamart Components

- Power BI Datamart Integration in the Power BI Ecosystem

Layered Architecture for Dataflow

The architecture mentioned above includes three (or four if you use Datamart) layers. However, this can be expanded into many more layers in a real-world situation. The Data preparation layer, which is done by Dataflow, can split into multiple layers itself. I have explained this concept in my article here. I copied the related parts of that below;

*The below is referenced from my article here: https://docs.microsoft.com/en-us/power-query/dataflows/best-practices-for-dimensional-model-using-dataflows*

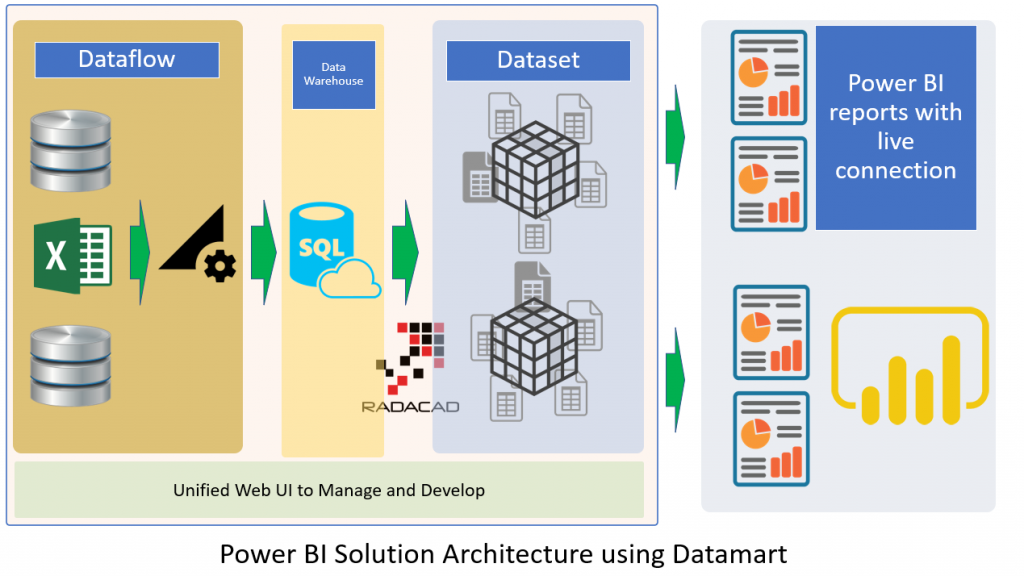

Staging dataflows

One of the key points in any data integration system is to reduce the number of reads from the source operational system. In the traditional data integration architecture, this reduction is made by creating a new database called a staging database. The purpose of the staging database is to load data as-is from the data source into the staging database on a regular schedule.

The rest of the data integration will then use the staging database as the source for further transformation and converting it to the dimensional model structure.

We recommended that you follow the same approach using dataflows. Create a set of dataflows that are responsible for just loading data as-is from the source system (and only for the tables you need). The result is then stored in the storage structure of the Dataflow (either Azure Data Lake Storage or Dataverse). This change ensures that the read operation from the source system is minimal.

Next, you can create other dataflows that source their data from staging dataflows. The benefits of this approach include:

- Reducing the number of read operations from the source system, and reducing the load on the source system as a result.

- Reducing the load on data gateways if an on-premises data source is used.

- Having an intermediate copy of the data for reconciliation purpose, in case the source system data changes.

- Making the transformation dataflows source-independent.

Image emphasizing staging dataflows and staging storage, and showing the data being accessed from the data source by the staging dataflow, and entities being stored in either Cadavers or Azure Data Lake Storage. The entities are then shown being transformed along with other dataflows, which are then sent out as queries.

Transformation dataflows

When you’ve separated your transformation dataflows from the staging dataflows, the transformation will be independent of the source. This separation helps if you’re migrating the source system to a new system. All you need to do in that case is to change the staging dataflows. The transformation dataflows are likely to work without any problem because they’re sourced only from the staging dataflows.

This separation also helps in case the source system connection is slow. The transformation Dataflow won’t need to wait for a long time to get records coming through a slow connection from the source system. The staging Dataflow has already done that part, and the data will be ready for the transformation layer.

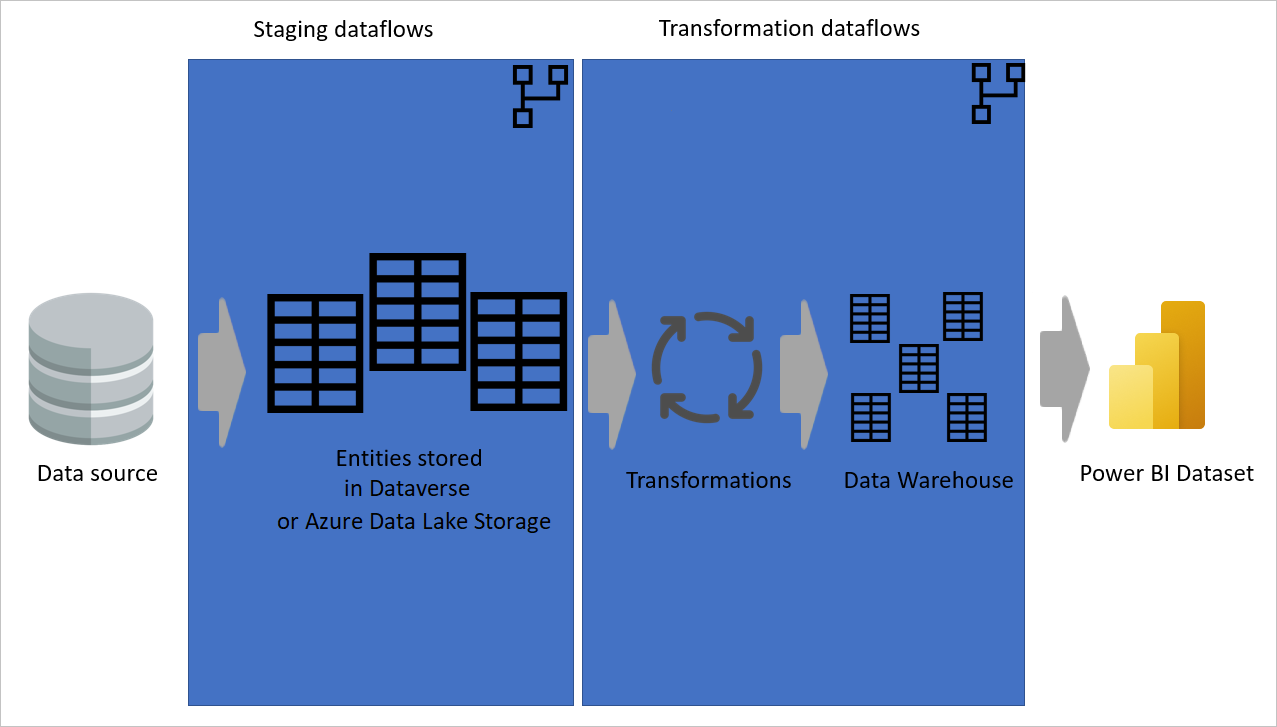

Layered Dataflow Architecture

A layered architecture is an architecture in which you perform actions in separate layers. The staging and transformation dataflows can be two layers of a multi-layered dataflow architecture. Trying to do actions in layers ensures the minimum maintenance required. When you want to change something, you just need to change it in the layer in which it’s located. The other layers should all continue to work fine.

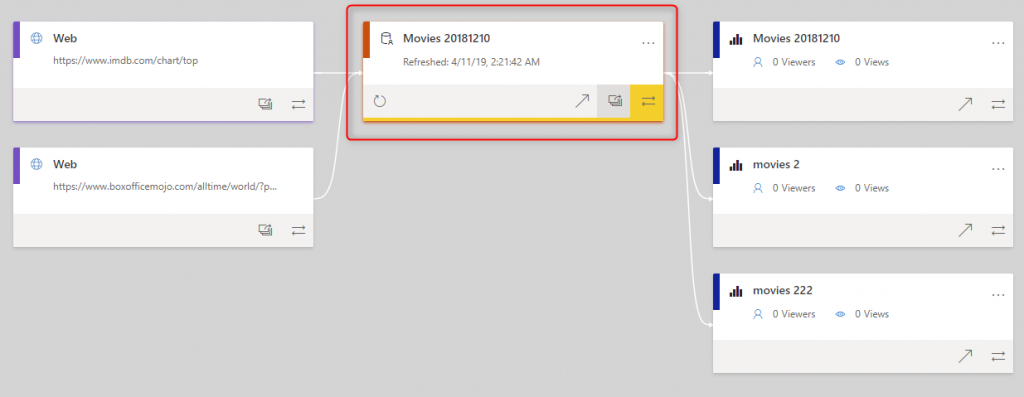

The following image shows a multi-layered architecture for dataflows in which their entities are then used in Power BI datasets.

The Dataflow layered architecture is not only helpful if you are using the 3-layered architecture, it is also helpful if you use the 4-layered architecture with the Datamart. The Datamart can get data from dataflow that are ingesting data from some other transformation dataflow, which are then getting data from the data source using some staging dataflows.

*The above is referenced from my article here: https://docs.microsoft.com/en-us/power-query/dataflows/best-practices-for-dimensional-model-using-dataflows*

Chained Datasets

Power BI Datasets can also be implemented in multiple layers. Chained datasets do this. Chained Datasets are datasets that are using DirectQuery to Power BI Dataset. These chained datasets can have further modeling or combining data from other sources in them. This is particularly helpful for scenarios where data analysts use a centralized model built by the BI team and extend it to a smaller chained model for their use cases.

As you see in the examples above, the Dataflow and the Dataset can become multiple layers each. The number of layers in the architecture is not an important factor. Designing the layers in a way that leads to less maintenance and more re-usable objects and components is the critical thing to consider when you design the architecture of a Power BI solution.

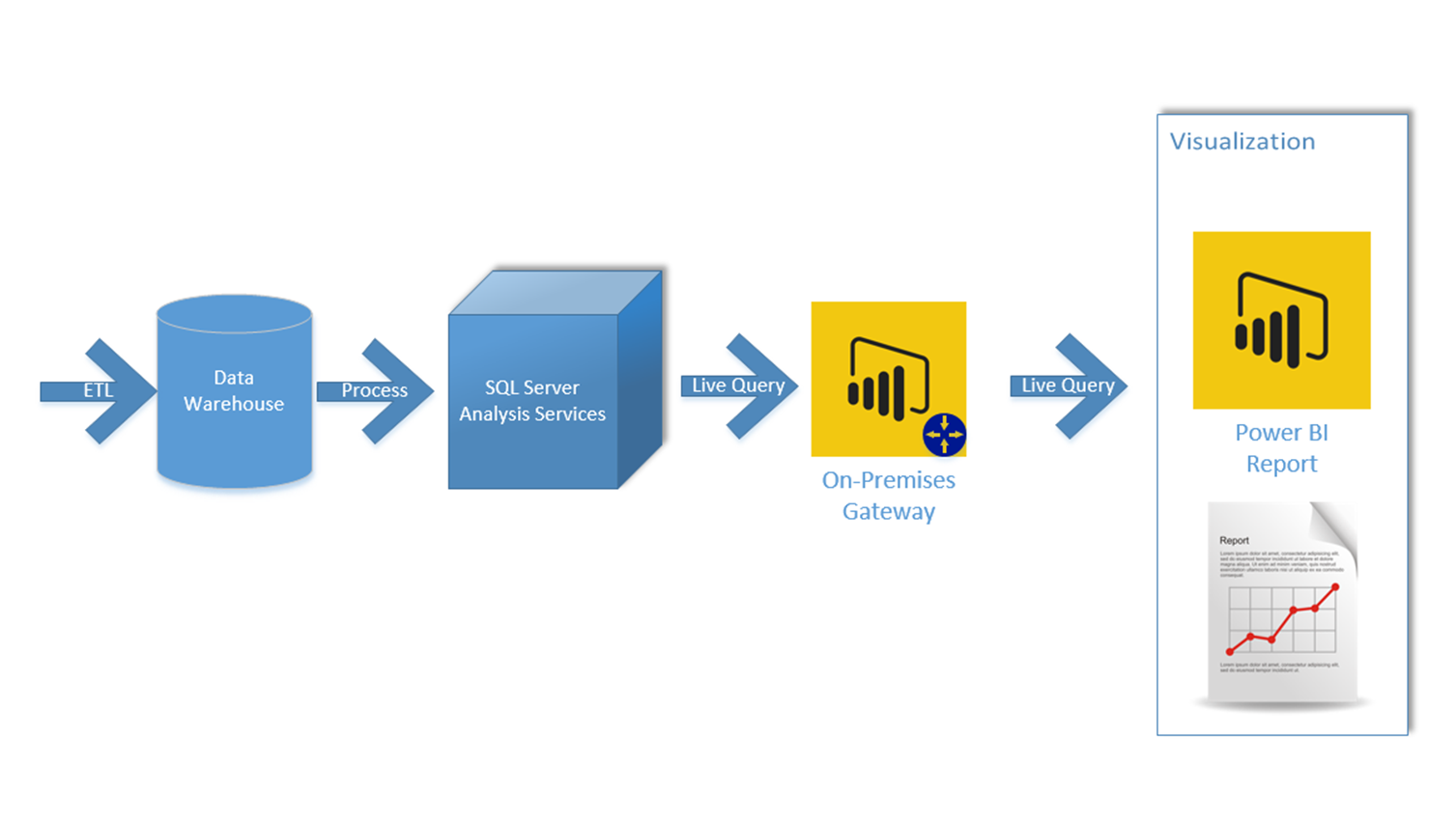

What about Enterprise Architecture?

You might already use Power BI in an enterprise solution using Azure Data Factory or SSIS as the ETL tool, and SSAS as the modeling tool and Power BI live connection as the visualization tool. Such architecture is similar to what I mentioned here already. If you already have that architecture in place, I recommend you to proceed with that.

Summary

Implementing a Power BI solution is simple. However, maintaining it is not! You must have a proper architecture if you want low maintenance, highly scalable, consistent, and robust Power BI implementation. In this article, I explained the benefits of a proposed architecture that leveraged Dataflow for the data preparation layer and shared Dataset for the data modeling layer. The architecture can also be enhanced using Power BI Datamart. The proposed architecture will have the minimum maintenance efforts; it is highly scalable. And I strongly recommend you consider using it in your implementations.

Do you have any thoughts about this architecture or any questions? Please let me know in the comments below.

Hey Reza, thanks for another one nice article!

One question: Since you don’t pay any extra cost for Dataflow, how many data (size of data in MB or GB) can you load and transform with Dataflow since all data are stored in Data Lake Storage.

Hi Yiannis.

It would be the maximum size that you get in your Pro account. Also, consider that the 10GB size in your pro account is not all for dataflows. part of that is used for datasets.

Cheers

Reza

Hi Reza,

It may also be worthwhile just to cover off the differences in capabilities in Dataflows between those offered in Premium and Pro licensing.

I see a lot of the value in Dataflows as being lost due to not being able to offer computed or linked entities without the Premium licensing and for a large number of small to medium organisations, they are finding the cost of entry to Premium too high.

Hi Andrew.

I might write a blog post about that. In the meantime, here is my workaround approach for computed entity

Cheers

Reza

Hi Reza, thx for this insight. Not that it would be best practice of any kind but more of an “options and possibilities” type of question: The model.json file in a cdm folder already presents the ability to define relationships between gen2 dl data objects which gets picked up automatically. To what extent will it also be able integrate abilities that are now described as a pro for shared datasets e.g. dax measures . Now that I think of it could you actually have multiple model.json files pointing to the same data objects in various cdm folders?

Hi

Thanks for mentioning that. I didn’t want to talk about the details of setup in Dataflow in this post, as it was highlights of the architecture.

Yes, true, the relationship helps in that essence. However, it has nothing to do then with calculations in the shared dataset. You still have to do those in the PBIX file that is for the shared model.

Cheers

Reza

Great article!!! Thank you.

Although I don’t think it is truly multi developer environment. Only one developer can work at a single time, it is not like multiple developer can work at the same time. May be my definition multi-developer environment is different than wht is mentioned in this document or I’m totally wrong.

Keep writing these amazing blogs…

Hi Parry.

Multiple developers can work at the same time because they are working on different objects. at the time that the data developer is building dataflows, the data modeler can build the shared model and calculations, and at the same time report designers can build visualizations, because they are all working on different environments. It is not the same file. The only one that doesn’t allow this is that the shared data model has to be created in one PBIX file. I agree that having something like version control that has the ability to Merge the code would be helpful and make it even better in a multi-tenant developer environment. However, this architecture by itself is already a multi-developer environment.

Cheers

Reza

Hi Reza,

Thanks for this great wrap-up. One point that should be noted: Unless you have PowerBI Premium, shared datasets can only be used inside a single workspace for the moment. Hopefully the XMLA endpoint becomes also available for PowerBI Pro soon. Then this allows you to create a workspace with the enterprise model that others get only read-only access.

Hi.

Shared datasets across multiple workspaces are on its way 🙂

Cheers

Reza

Thank you for the post Reza, It’s very useful! But I have a question: isn’t it possible work in the same dataflow two or more developers yet? We have several issues with that limitation, only someone who has admin rights and has created them can work on them :S

Hi Jose.

Dataflows are a new feature in Power BI. so many updates are coming that way, I’m sure of it. In the meantime, my suggestion is this: the most time-consuming part of creating dataflows is the transformation part itself. multiple developers can use Power BI Desktop and Power Query in there to create their transformations, then copy the script and send it to the person who has access to create the dataflow.

Cheers

Reza

This architecture will be great if/when Datasets will be available across all workspaces 😉

Because for now they are not it is not so easy 😉

But, Shared Datasets are planned to be implemented 😀

Hi Marcin.

Yes, shared datasets across multiple workspaces will be available very soon.

Cheers

Reza

Hi Reza,

Thanks for all your insights.

I am trying to use the data flows now, but I am a bit skeptical about reaching the 10GB allocated to our current Pro license. We do not have large data sets tho, but it can become larger over time…

Is there any way that we can work out or project the space it would consume? I just do not want to develop on this and the have to switch back to do it file by file.

Thank you

Hi Oscar

if you are concerned about the space, I’d recommend using your Azure Data Lake subscription. It would be definitely much cheaper than premium. and it won’t consume the space of Power BI pro for dataflows

Cheers

Reza

Hi Reza!

Thank you for this article.

When I read this, I wonder where is the benefit of using tools like Azure Data Factory or Azure Analysis Services when you have a Premium subscription, since the ETL part is covered by Dataflows and the Modeling part by the Shared Dataset part. Is there any drawback compared to these solutions?

Hi Yas

Good question!

At the time of writing this comment, I’d say using ADF and Azure AS would give you more computing power to work on a larger dataset and work with big data.

for example, using ADF, you can expand and scale up to more compute if needed.

dataflows and shared dataset, on the other hand, are great for having everything with one service: Power BI.

Dataflow is still new and hasn’t got those features. however, I am sure this will change soon in the next few months.

Cheers

Reza

Hi Raza Thanks for sharing knowledge.

I agree with you,but power bi premium only allow 10GB data set size right?

In our case dataset size will grow more than 10GB, if we use only one dataset, so we are using multiple datasets which have size around 1 GB. What would be the best solution in that case. we are thinking to take SSAS to azure and do live connect.What are your thoughts?

Power BI size limitation of 10GB recently lifted. you can size it up to 400GB (depend on the capacity you get of course)

Cheers

Reza

Hi Reza, looking at using Power Apps and Common Data Service as a source for Power BI, but I need to append data to an existing entity with data. Everything I’ve tried and can find infers that you can only overwrite the existing data rather than add to it.

“If you use Power Query to add data to an existing entity, all data in that entity will be overwritten”.

I’m looking to append the data in CDS upfront.

Are there any ways around this?

Many thanks

Hi Scott. Are you talking about dataflows or datasets? if dataflows; are you talking about Power BI dataflows or Power Platform dataflows?

Cheers

Reza

Great article! What are your thoughts on using the online report editor to enable multiple visualization developers? I know there are definite limitations with the editor. However, if the model and Power Query development are separate, does the viz developer need everything Power BI Desktop offers? We’ve done some testing and you can definitely have multiple developers editing separate pages in the same report and even developers making changes to the same page. Besides not having Power Query or any DAX capabilities, we also found you don’t have an Image Visual, Performance Analyzer and Themes. Also, if you want to download the PBIX, after you’ve edited online, you have to have the most recent version of PBI Desktop.

Doing the visualization in the service is a viable option. You mentioned some of the limitations already. the benefits is that report visualizer can work with it with any machine even without having Power BI Desktop.

Cheers

Reza