In Microsoft Fabric, the Data Factory is the workload for ETL and data integration, and the Data Pipeline is a component in that workload for orchestrating the execution flow. There are activities in the pipeline, and you can define in which order you want the activities to run. In this article and video, you will learn about the execution order and output states in Data Pipeline and how they can be used in real-world scenarios of data integration.

Video

Data Pipeline

A Data Pipeline is a component in Microsoft Fabric, as part of the Data Factory. This component enables the implementation of scenarios in which you want to control the execution of multiple tasks. Tasks in the pipeline are called Activities. There are many activities; some examples of those are Dataflow activity, Semantic Model refresh activity, Office365 email activity, etc.

To learn more about Data Pipeline, read the articles below;

- Get started with Data Pipeline

- Using Data Pipeline to refresh Power BI semantic model after the refresh of Dataflow

- What is the Data Factory

Sequence of Execution

Activities can run in parallel or sequentially. To define a sequence of execution, you can connect the output of one activity to another.

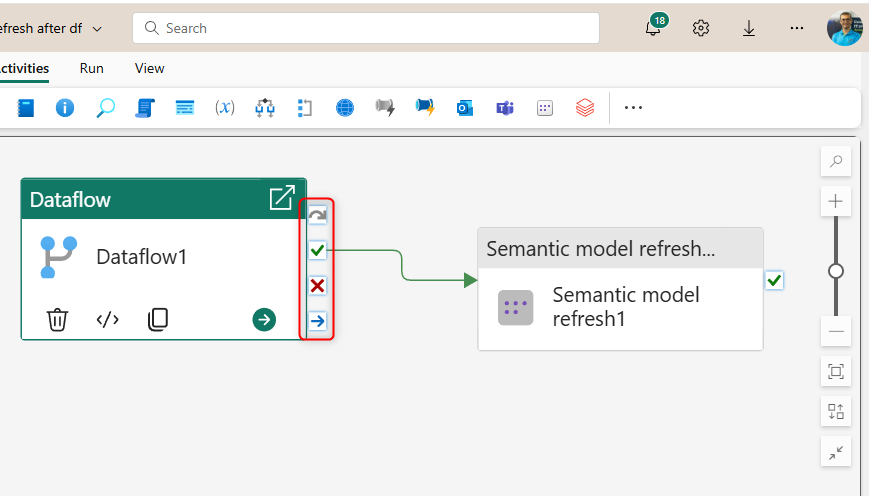

Sequential execution

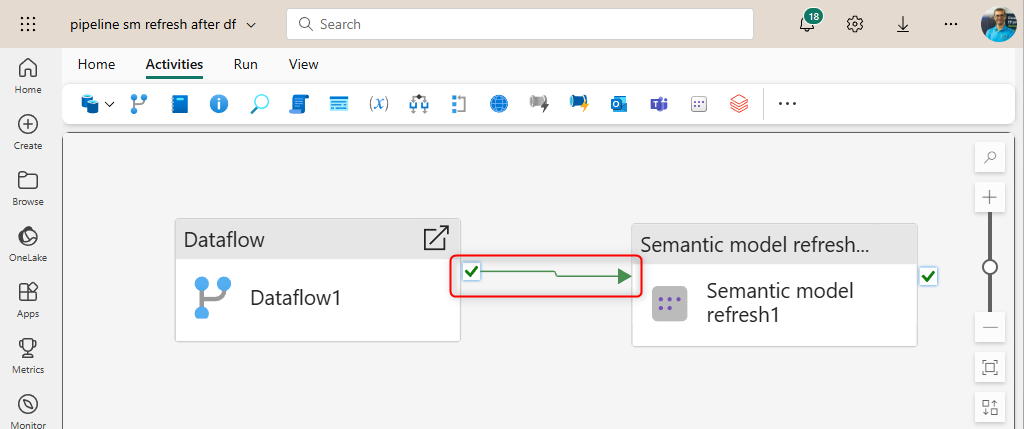

The arrow above between Dataflow1 and Semantic Model refresh activity means that the Semantic Model refresh activity will run only AFTER the Dataflow activity reaches a specific output state. This means the sequence of execution.

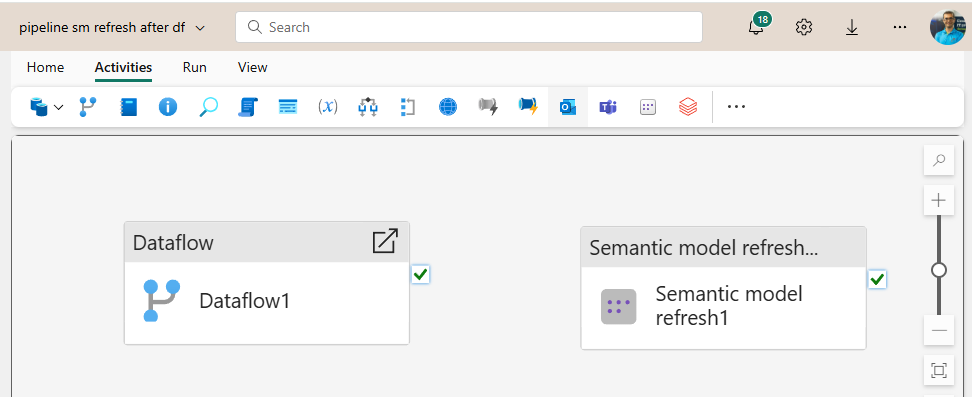

Parallel execution

But if the two activities are like the below, then they won’t wait for each other; they will run in parallel;

Output State

Each activity in the pipeline has four output states: On success, On Failure, On skip, and On completion.

The output states of On Success and On Failure are self-explanatory. They mean the execution of the next activity will be started only if the activity is completed successfully or fails, respectively.

On completion means the activity is completed, either successfully or with failure. This is useful for things such as Log activity. Something that isn’t important in the execution of other activities.

On skip is only happening in specific scenarios; for example, in an IF condition, only one of the parts of the IF, which is either THEN or ELSE, will be executed, and the other part will be SKIPPED. We usually use this output state to send information to another activity for logging purposes.

When you connect any of these output states to the next activity, the next activity will only run when the current activity completes with that state.

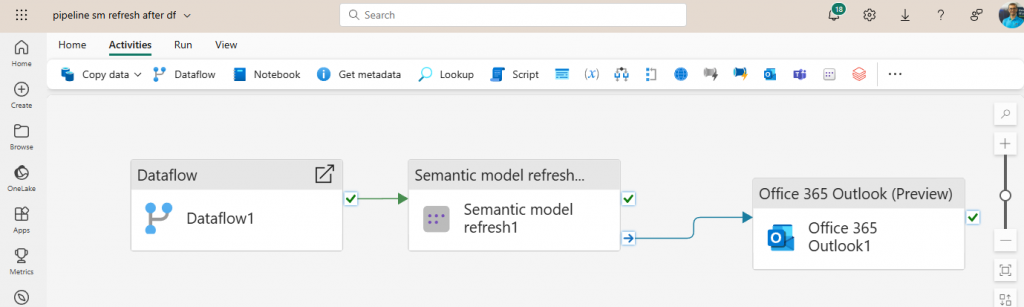

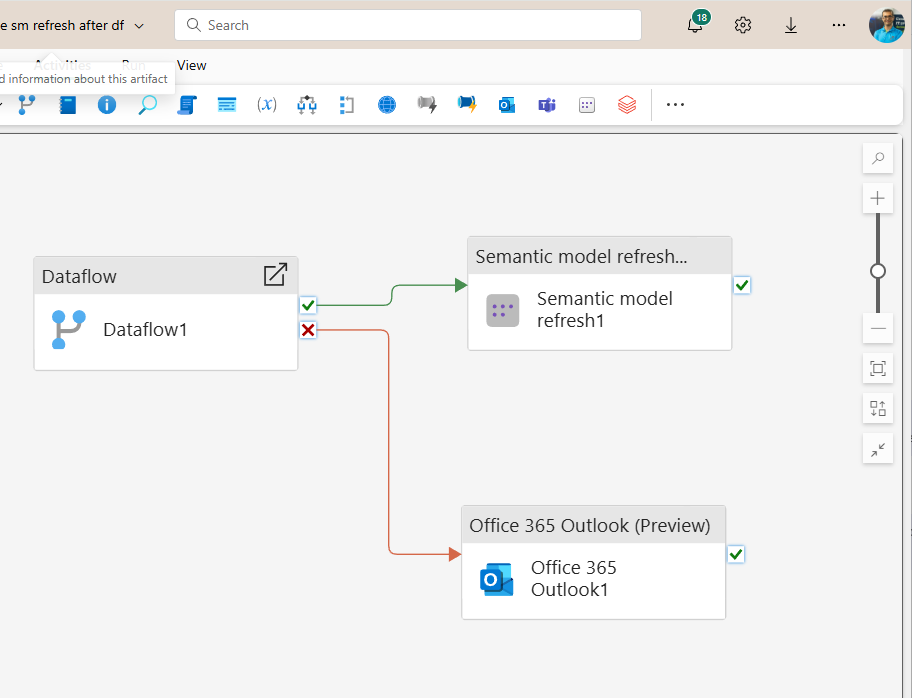

The screenshot above means that if Dataflow completes successfully, then Semantic Model refresh happens; otherwise, if it fails, an email will be sent by the Office 365 Outlook activity.

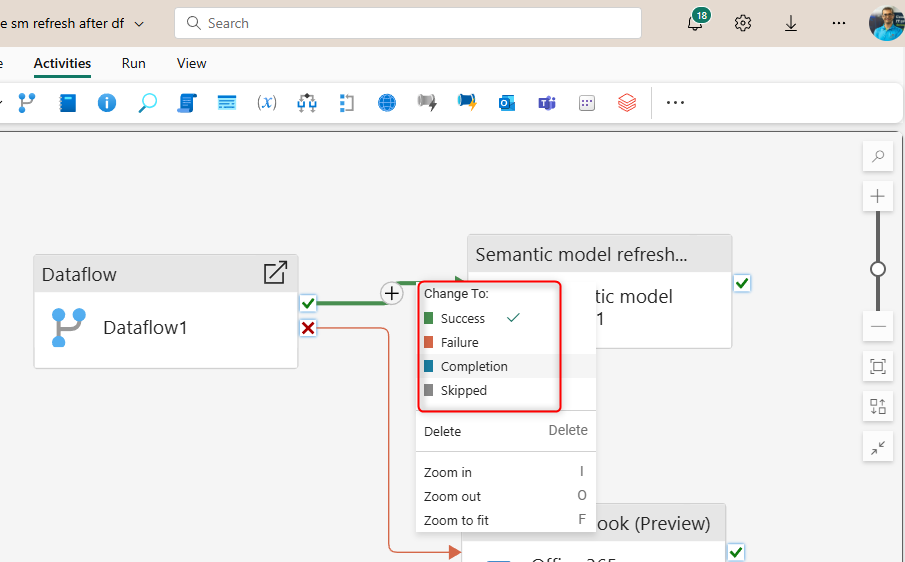

You can also change the output state connection by right-clicking on the line between activities and choosing any other states you want.

Multiple inputs to one Activity

You may have this situation where you have multiple activities connecting to the same activity. In these cases, only upon completion of ALL the next activity will execute.

In the screenshot above, the Office 365 Outlook activity will only run when all three other activities are completed, meaning Notebook1 and Dataflow1 have to succeed, and Notebook2 has to be completed. Only after all of these are finished with the mentioned states will the execution of the Office 365 Outlook activity start.

The above means that in situations of multiple inputs to one activity, the AND of all those activities output state will lead to execution of the the subsequent activity.

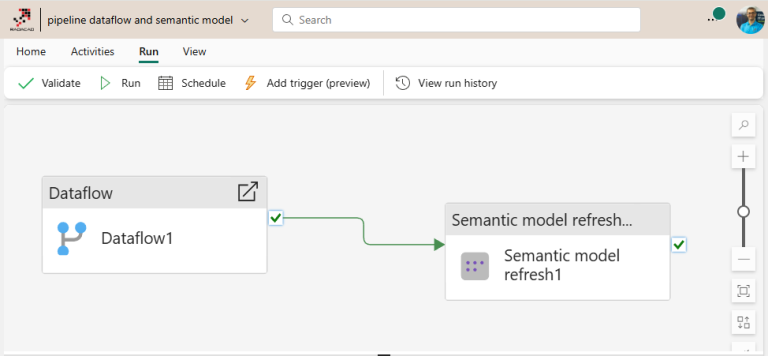

Refresh of Power BI Semantic Model after refresh of Dataflow

A common scenario of using the execution order is a simple setup used to refresh the Power BI Semantic Model right after the successful completion of the Dataflow (or Dataflows)

Please read this article to know more about it.

An example real-world scenario

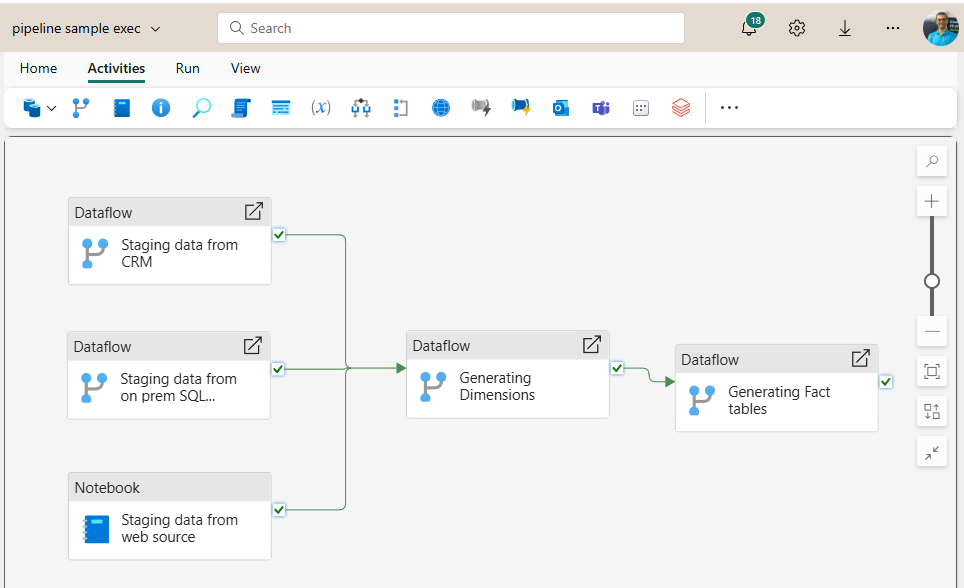

The above can be helpful in situations where you want multiple activities to run in parallel and then lead to the execution of another activity. For example, You want the staging of data from various sources to run in parallel, but once all the data is ready, you want to process dimension and fact tables in a sequence. The screenshot below shows such a process.

In the above, we want the staging process to start simultaneously from all three sources (SQL Server, CRM, and web source), but depending on the source’s performance and volume of the data, each may finish at a different time. We want to generate dimension tables only after all the staging activities are finished because the data isn’t complete before that. Once the dimension tables are fully populated, then we want the fact tables to be populated. That is what the pipeline above does.

Summary

This article explained some of the principles and concepts in the Data Pipeline for the execution order of activities. Activity can run in parallel or sequentially. You can choose what output state from the first activity will lead to the execution of the next activity. In the case of connecting output states from multiple activities into one subsequent activity, only after execution of ALL of those activities will the subsequent start executing.

Here are some links to study more;