Although this seems to be a simple thing to do, it is not a function that you can turn on or off. If you have a Dataflow that does the ETL and transforms and prepares the data, then to get the most up-to-date data into the report, you will need to refresh the Power BI semantic model after that, only upon successful refresh of both dataflow and semantic model is when you will have the up-to-date data into the report. Fortunately, in Fabric, this is a straightforward setup. In this article and video, I’ll explain how this is possible.

Video

Dataflow for ETL Layer

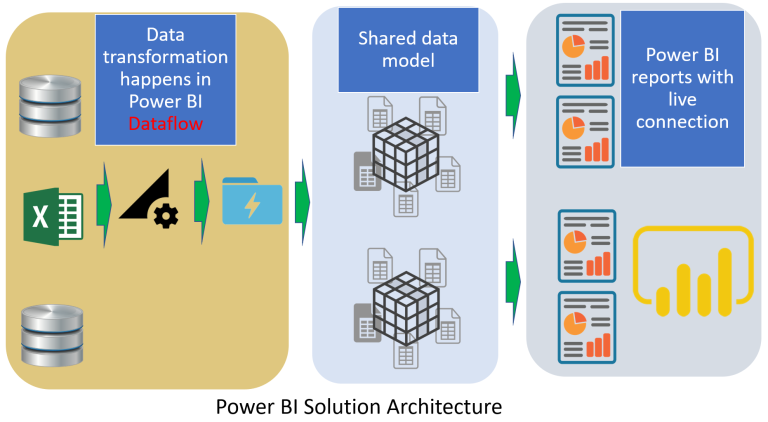

Dataflow is a component that separates the data transformation and data preparation from the Power BI model. Using Dataflow, you can get the data from different sources, transform it using Power Query transformations, and load the results into the destination options (Dataflow Gen1 will be either ADLS Gen2 in CSV file format or Dataverse and Dataflow Gen2 can have multiple destinations such as Lakehouse, Warehouse, Azure SQL Database, KQL Database, etc).

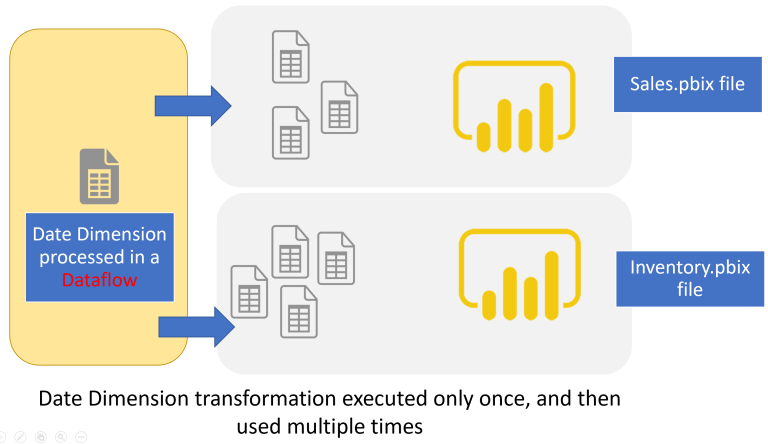

One of Power BI Architecture’s best practices is using a multi-layered architecture, where Dataflow does the ETL. This approach helps in many situations, such as multiple semantic models using the same table generated by the Dataflow, the Dataflow Developer working simultaneously as the data model developer, etc.

When you follow an approach like that and build a Dataflow, the Dataflow refresh is needed to get the up-to-date transformed data into the destination ready for the Power BI model.

Here are a few links to learn more about Dataflow;

- What is Dataflow and its use cases

- Dataflow Gen2 in Microsoft Fabric

- Dataflow Gen1 vs Dataflow Gen2

- Multi-layered architecture for Power BI

Power BI Semantic Model

Power BI Semantic Model used to be called Power BI Dataset. This is where the connection to the data source exists, where the tables and their data exist. Calculations using DAX measures, etc, are also added to the semantic model. Power BI report is just a thin layer of visualization that reads data from the semantic model. The Semantic model behind the scenes keeps the data in file format and loads it into memory for faster processing in a tabular engine called Vertipaq.

When used in an Import mode, the Power BI Sematic model requires the data to be refreshed to get the most up-to-date data available for the report.

To learn more about Power BI Semantic Model, read this article;

Refresh the Semantic Model after the Dataflow

When you use Dataflow in your Power BI implementation, you need to ensure that Dataflow is refreshed before refreshing the Semantic Model.

You need to make sure that these two steps run in the correct order as below;

- Refresh Dataflow(s)

- Refresh Power BI Semantic Model

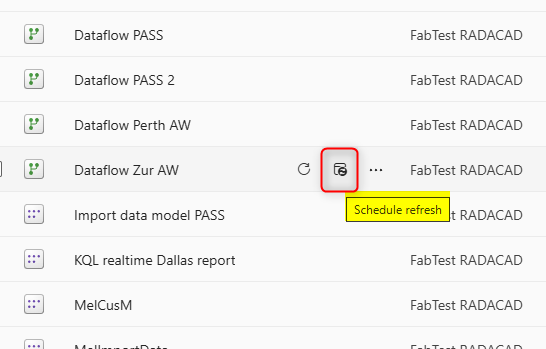

You can schedule the refresh of each of these individually. But you cannot set the refresh of the Semantic Model to be done after a refresh of Dataflow. There isn’t an option like that in the refresh settings. However, there is an easy solution.

Data Pipeline

The Data Pipeline is a component of the Fabric Data Factory that orchestrates the execution of activities. Activities such as sending emails, running SQL code, etc. There are many different types of activities to choose from. The pipeline enables you to set the execution order and mention under which output state you want the second activity to run from the first activity.

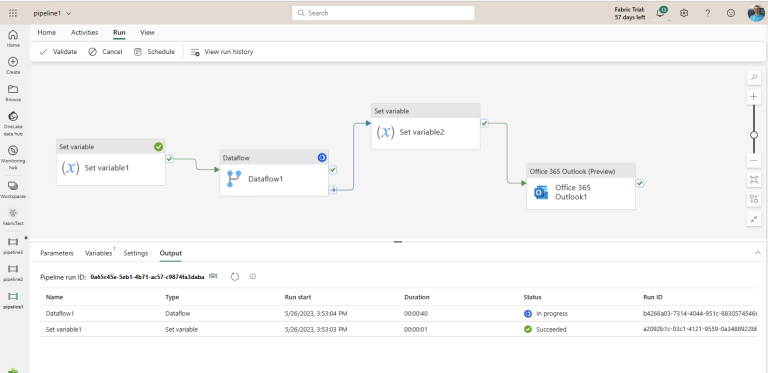

Here is an example of a data pipeline;

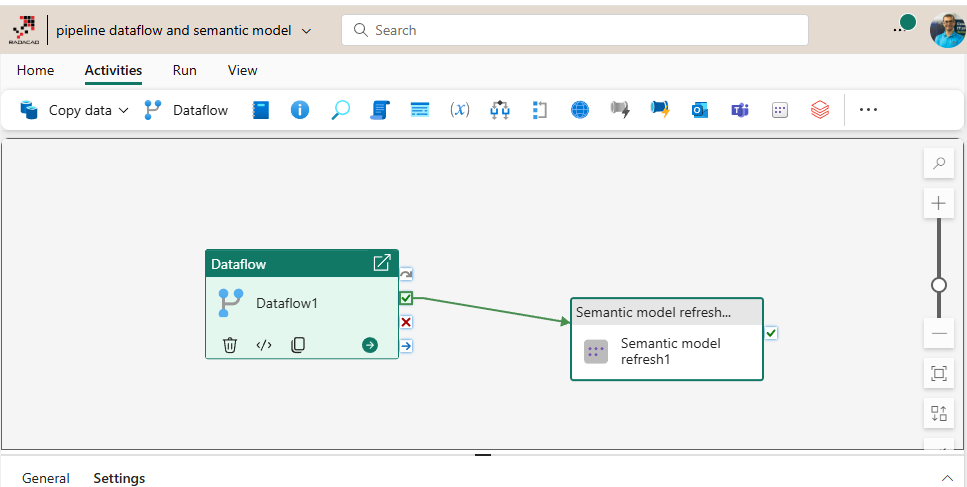

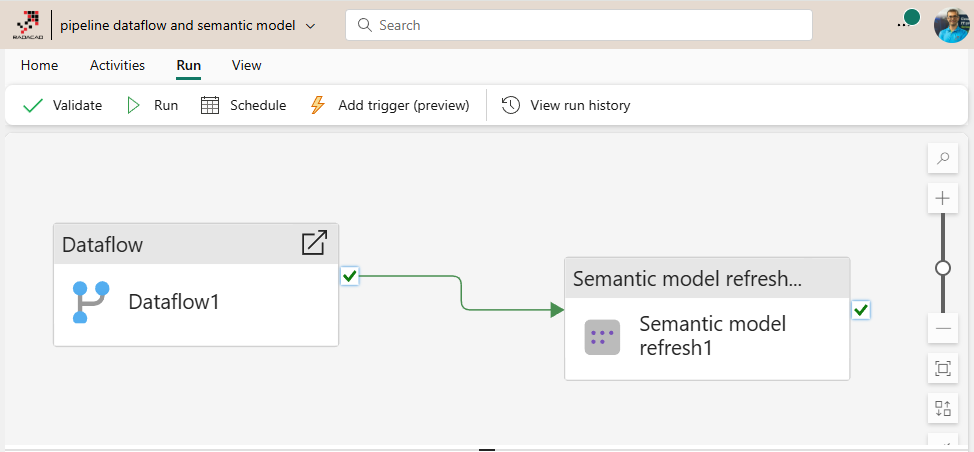

Now, using Data Pipeline, you get the solution for our problem. You just need two activities: One Dataflow Activity, and upon successful execution of that, you add the Semantic Model Refresh activity. Then, you schedule the pipeline itself. There is no need to schedule Dataflow or Semantic Model refresh themselves because they will run through the execution of the pipeline.

here are the steps to do it;

- Go to the Fabric portal and select Data Factory

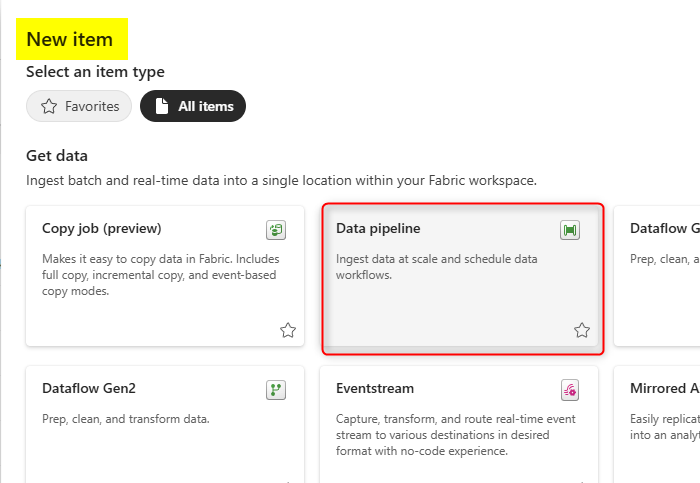

- Create a Data Pipeline

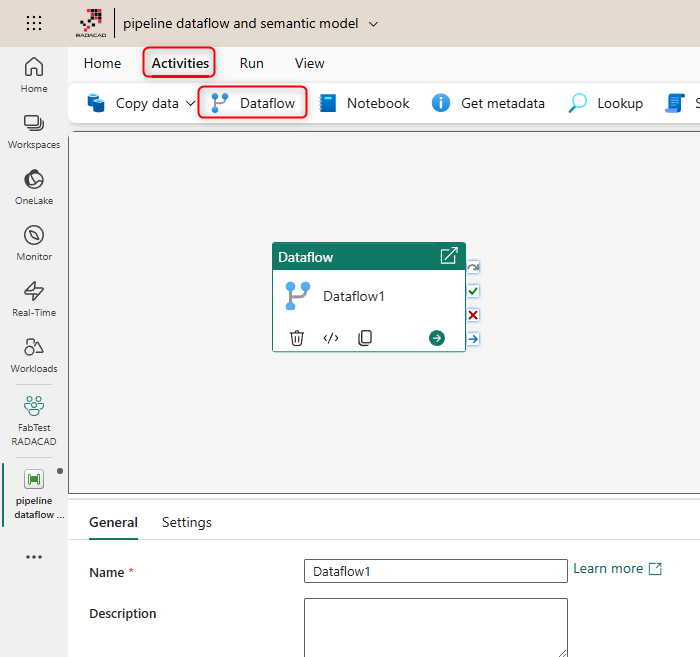

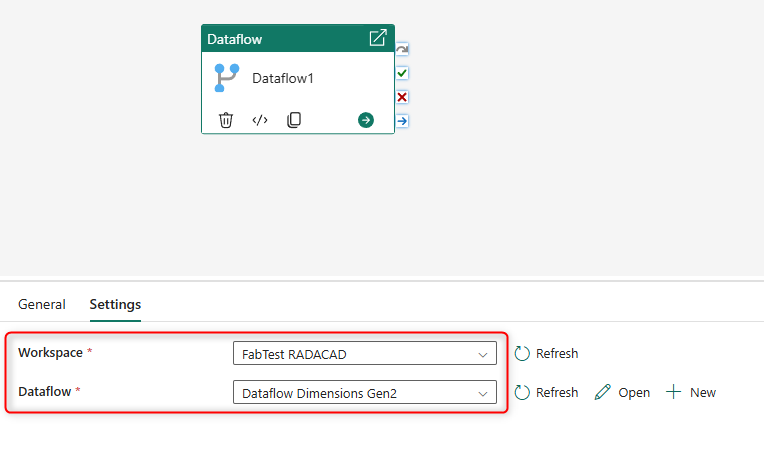

- Add a Dataflow activity

- Select the Dataflow you want to run in this activity

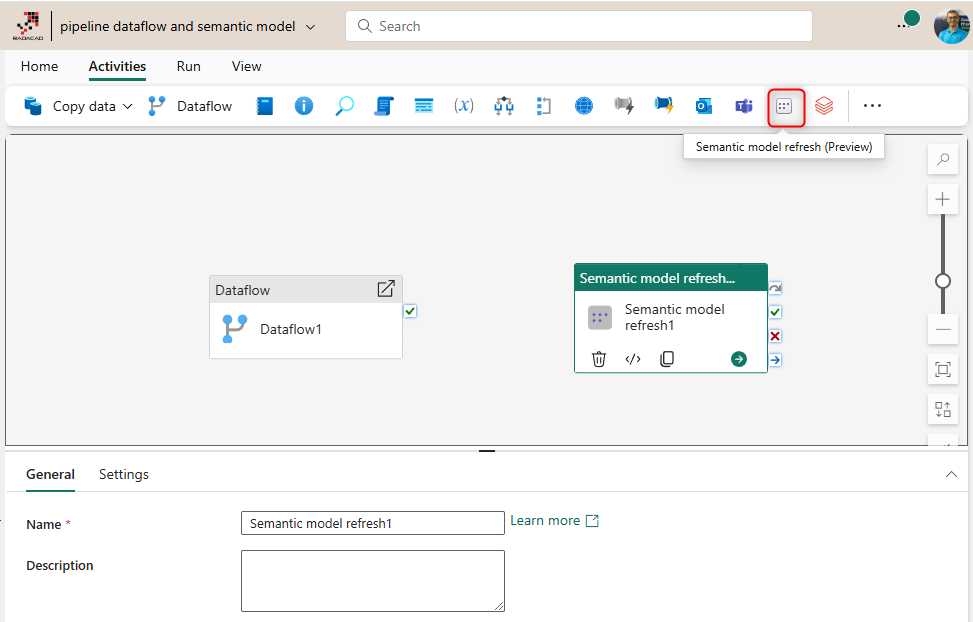

- Add a Semantic Model Refresh activity

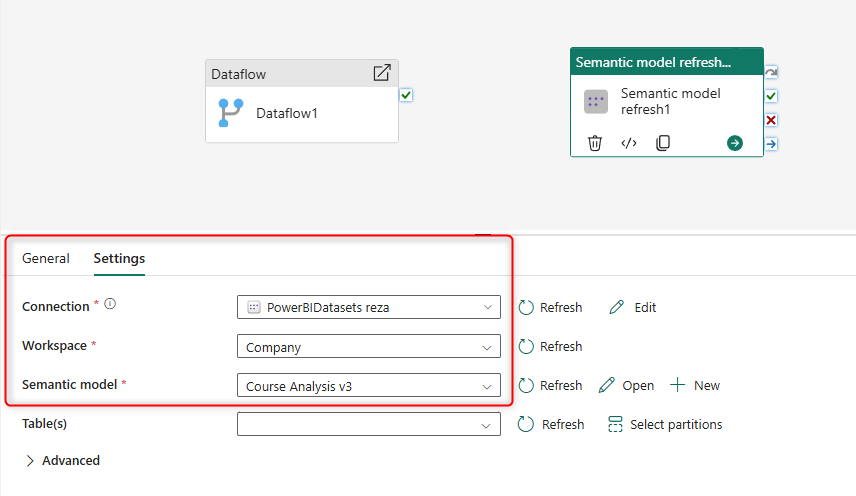

- Set the connection and choose the Semantic model you want to refresh

- Set the output state of Dataflow to be successful.

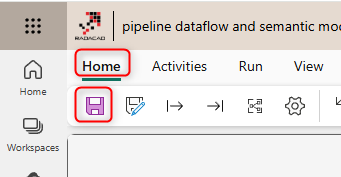

- Save the pipeline

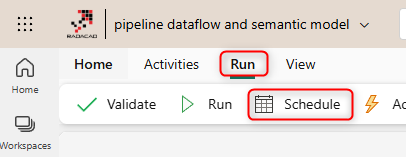

- Schedule it to run

This is what the pipeline looks like;

Data Pipeline has many other activities that can be useful to enhance this process event, such as a more detailed notification set up with Office365 or Teams activity, or even an action such as running a notebook to perform a machine learning training process, etc.

Summary

Microsoft Fabric brings a lot of practical scenarios for Power BI development, one of them being the Data Pipeline, which can be easily used to streamline the refresh of the semantic model right after the refresh of Dataflow. The same pipeline can also run a notebook, send an email notification, etc.

Here are some helpful links mentioned in this article which can help you to study more;