I have written previously about the use cases of Dataflow in Power BI, and one of them was using one Power Query table in multiple Power BI reports. However, I that article just covered the surface, and I still see many solutions duplicating Power Query scripts in multiple files which brings a lot of inconsistency issues. In this article, I’m going to explain in details what I mean by keeping a single instance, the one version of the truth, for your Power Query table through Dataflow, and build a consistent table in your Power BI solutions. To learn more about Power BI, read Power BI book from Rookie to Rock Star.

What is Power BI Dataflow?

Dataflow is Power Query scripts running on the cloud and storing the result in Azure Data Lake storage. I would highly recommend you to read this article to know what Dataflow is and what are use case scenarios for it. I also encourage you to read this article to learn how to create a dataflow. I also recommend reading a few other articles about dataflow as below;

- What is Dataflow and Use Case Scenarios of that in Power BI?

- Creating your first Dataflow

- What is the Common Data Model and Why Should You Care?

- What is Computed Entity and Linked Entity?

- Workaround for Computed Entity and Linked Entity in Power BI Pro

- How to Use Dataflow to Make the Refresh of Power BI Solution Faster

Shared Table in Power Query

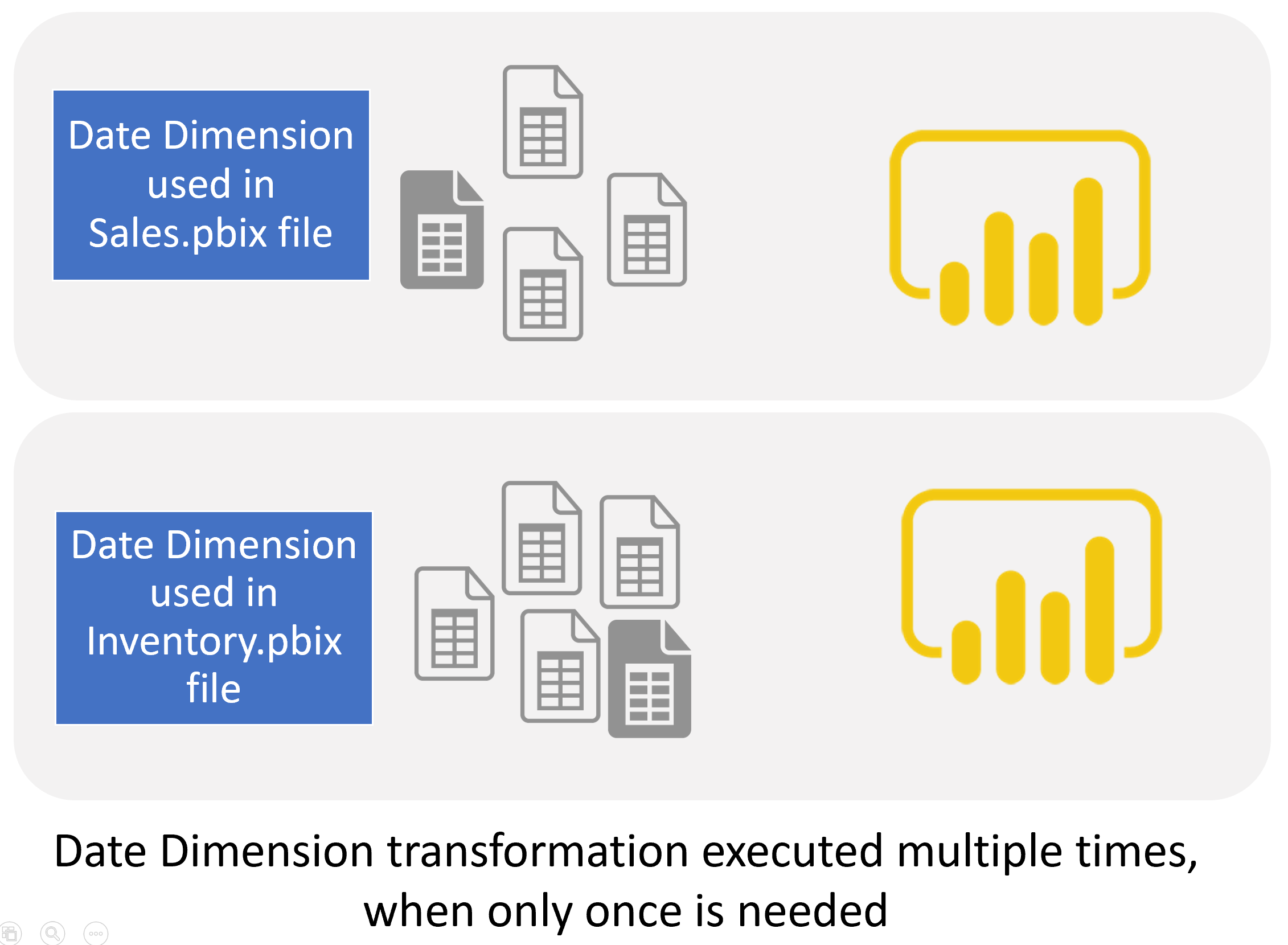

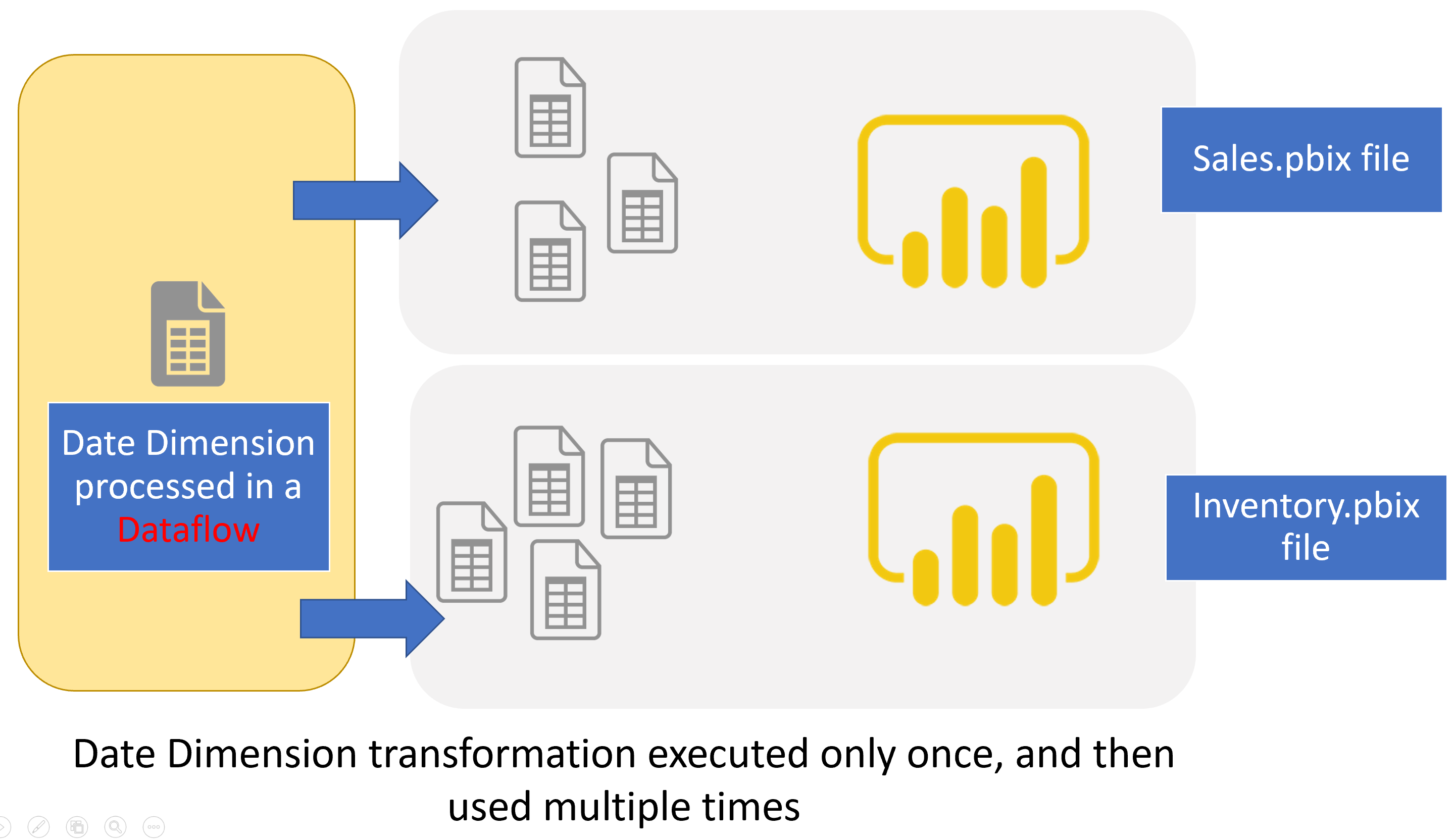

It happens often that you work with multiple Power BI (*.pbix) files at your day to day job, and it also happens that you might need one table to be available in two or even more separate files. Customer, Date, Product, Vendor, and etc are all examples of tables that might be needed in multiple files. You might use the Product table in the Sales.pbix file for sales analysis, and also in the Inventory.pbix file for warehouse inventory analysis. The diagram below shows a Date table that is used in two different *.pbix files;

Date dimension is not the only shared table you may have. Product, Customer, Sales transactions, Vendor, Warehouse, and many other tables might be needed in multiple files, and as a result, can be shared tables. You need to know the right way to handle these tables.

Any Power Query table that you may use in multiple *.pbix file is a shared table.

Bad Way: Copying

Shared tables between multiple files happen, and it is important how you deal with them. There is a bad way, and a good way, let’s check the bad way first;

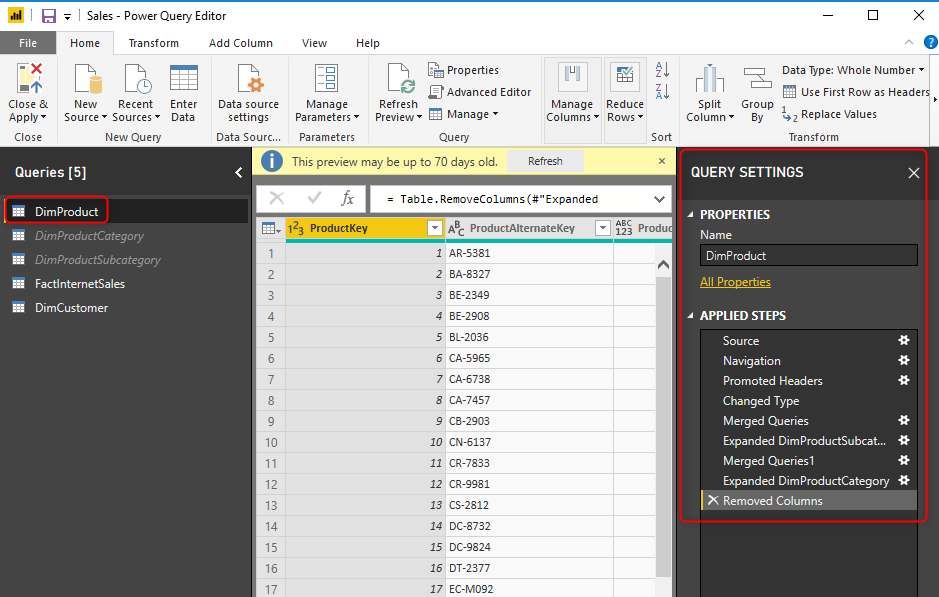

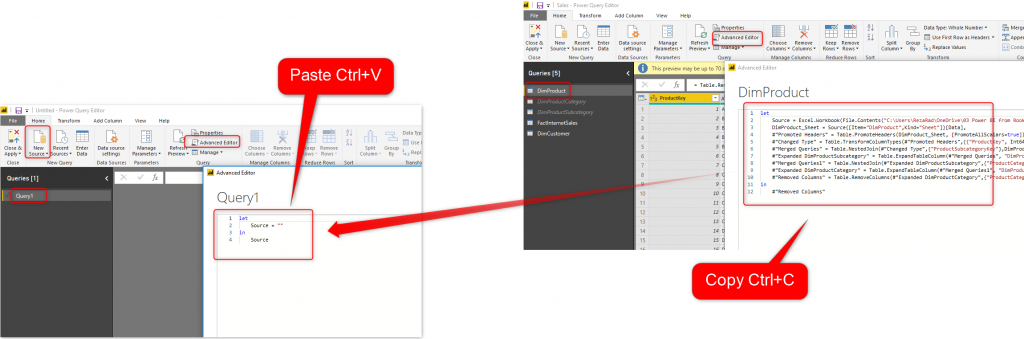

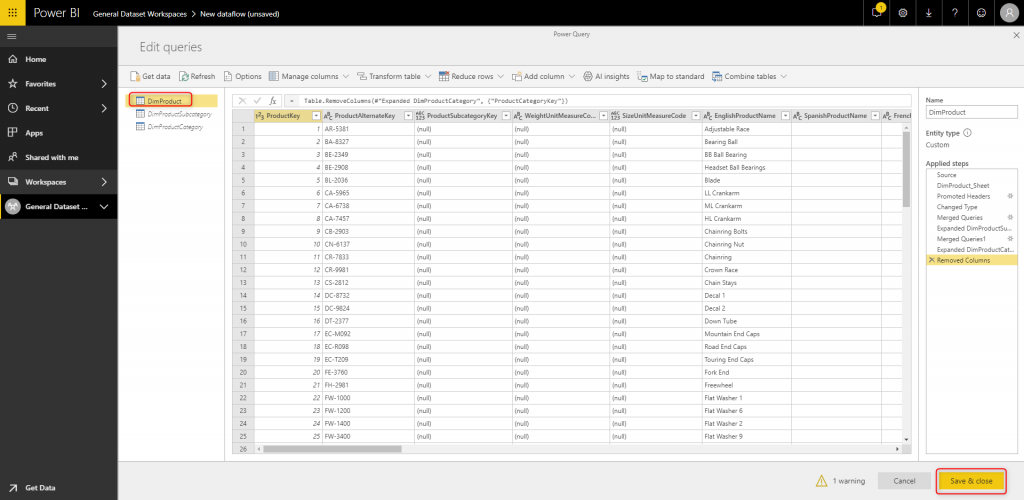

What do you do in those scenarios? probably copying the Power Query M script from the Advanced Editor of one of the files, and then pasting it into the other file as a new blank query. It works, but it brings a whole lot of complications and issues with it. In the example below; I have created a flatten dimension using a combination of three tables of DimProduct, DimProductSubcategory, and DimProductCategory with Merge in Power Query. I have this table in my Sales.pbix file, and the table is called DimProduct.

DimProduct already includes a lot of applied steps.

Now I want to have the same DimProduct in the Inventory.pbix file. what I will do is to copy the M script from Advanced Editor of one file, and then paste it into the other file!

It is easy, but here come challenges;

What if you want to edit transformations on this table after some time?

You need to go to one of this (which you may call it the original), apply changes, then copy and paste again into the other file!

What if there are more than two files?

What if the DimProduct is needed in not only Sales.pbix and Inventory.pbix, but also in BOM.pbix, Customer Retention.pbix, and many other files. Do you copy it across all these? and you won’t miss anything?

There is no Single Version of the Truth for this Code

As soon as you create copies, you will lose the single version of the truth. Now your code is everywhere, and you have to maintain it everywhere! There is no single place that you change the code and all references get fixed automatically. There is no single version of the truth for your code. As a result, the maintenance of this code is very high.

Good Way: Maintain a Single Table

Now that you know some of the issues of the bad way of copying, let’s talk about a good way of implementing it. A good way of implementing this would be keeping a single copy of that shared table, and any other shared tables. When you keep one single copy, then that would be the source of all other tables. when you change that, all other tables will change automatically. Dataflow is not the only way that gives you a single copy. you can do it using other methods too;

- Running a separate ETL (extract, data transformation, and load) process and storing the output in a shared data sources. For example, using ADF or SSIS to run transformations, and then store the output in a SQL Server database.

- Creating a Power Query Custom Connector which does all the transformation on the table and creates it ready for you.

- Using Dataflow.

The first two methods are the BI developer methods of doing things, and the fact is that probably 90% of Power BI users are not BI developers. On the other hand, some of them involve other tools and services (such as SQL Server, ADF, SSIS), which you might not have. Dataflow, however, is a perfect method, that despite being a good solution, it is also easy to implement for even a business user with a small knowledge of Power Query. And that is the method we are talking about here.

Dataflow for Shared Table

The highlight of the process is that dataflow will run the Power Query script, and it will store the output in Azure Data Lake storage, and then using Get Data from Dataflow in Power BI, we get data sourced from that single table. You can use that table then in multiple *.pbix files, as much as needed. If you want to change the transformation, there is only one place to change; the dataflow script of that table. This would be a consistent, low-maintenance, and robust solution.

Now let’s see how you can do that in action;

Create the dataflow with the shared Table

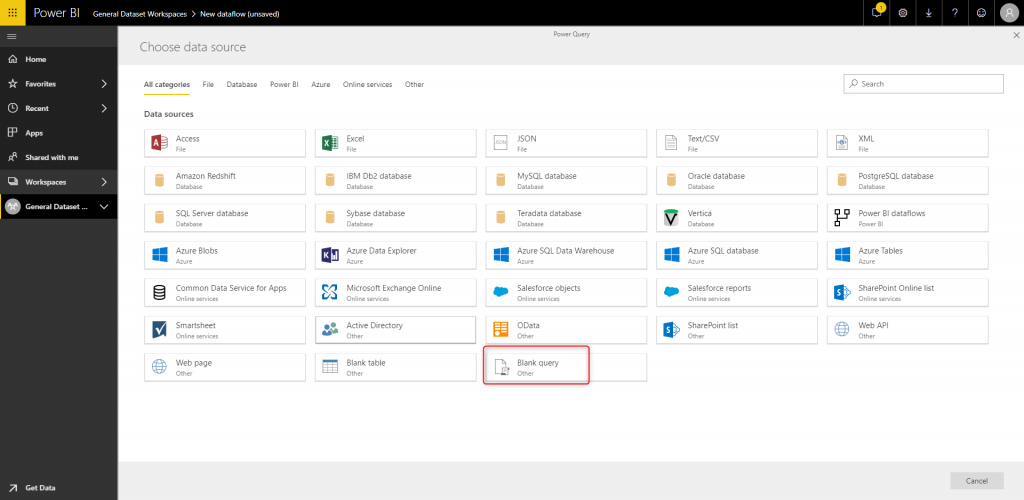

Start by creating a dataflow (if you don’t know how? this article explains it in details), then choose a Blank Query as the source;

Then copy and paste the Power Query M script from the Power Query in Power BI Desktop, to this place;

If your data source is an on-premises (local domain) data source, then you do need to select a gateway. Read here to learn all about the on-premises gateway. Note that, the Power Query script might run fine in Power BI Desktop without needing the gateway, because the data source is located on the same network, but in the dataflow, if the data source is a local domain data source, it would need the gateway, as the process now is running on the service. for online, cloud-based data sources, you don’t need a gateway.

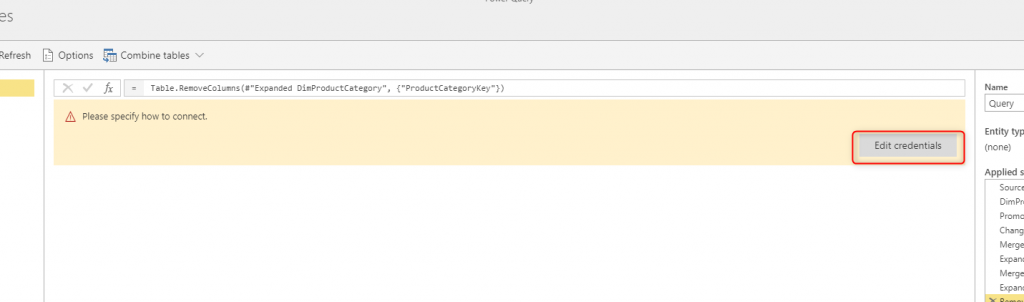

Then you might be asked to set up the credentials for the data source, which then you need to set up.

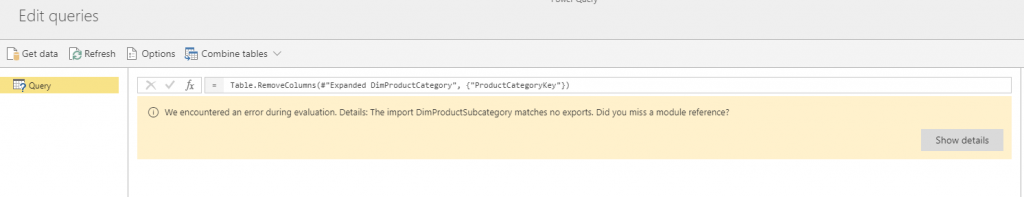

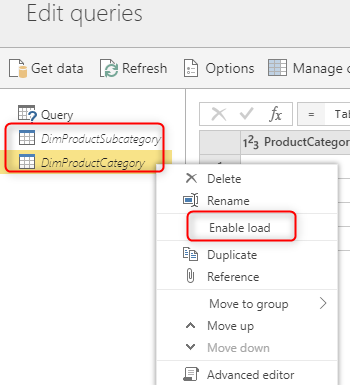

If your table is referencing other tables, make sure you bring those through the same process too;

Make sure that you get all other queries needed, and then rename them exactly to the name that the main query is referring to.

Also; if you just have Power BI Pro account, and you don’t want to hit the limitation of Computed Entity in Dataflow for Premium, then uncheck the Enable Load for those queries, I explained about a workaround for Computed Entity in the Pro account here.

Then refresh your main query, and make sure the name of that query is something you want it to be, and then Save and Close.

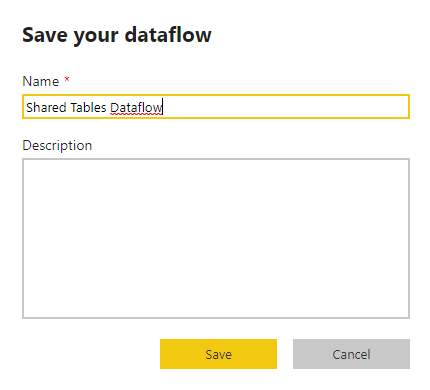

Then save the dataflow.

Note that one dataflow can have multiple shared tables in it.

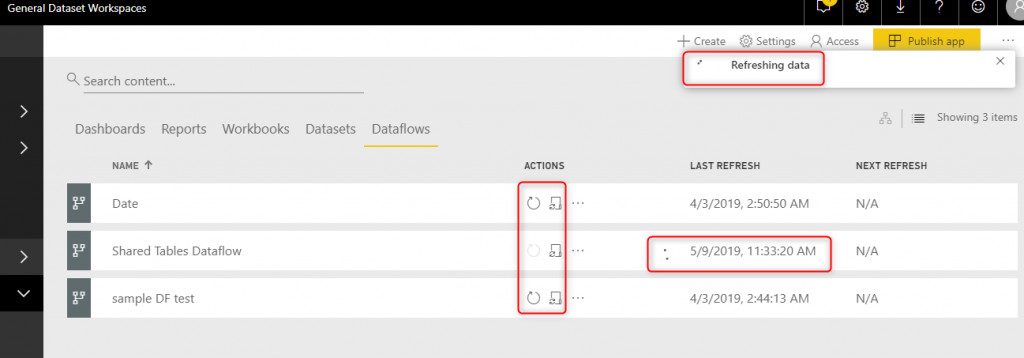

And finally refresh the dataflow; (you need to set a scheduled refresh, later on, to keep this table always up-to-date)

Use the Dataflow table in Power BI files

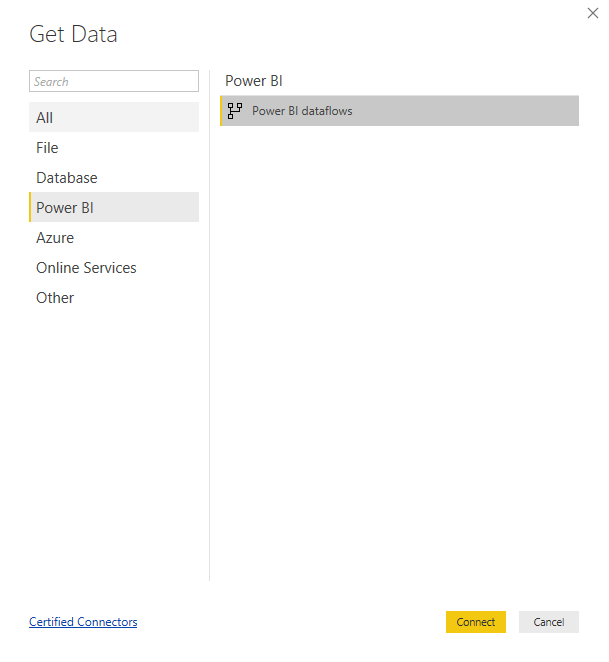

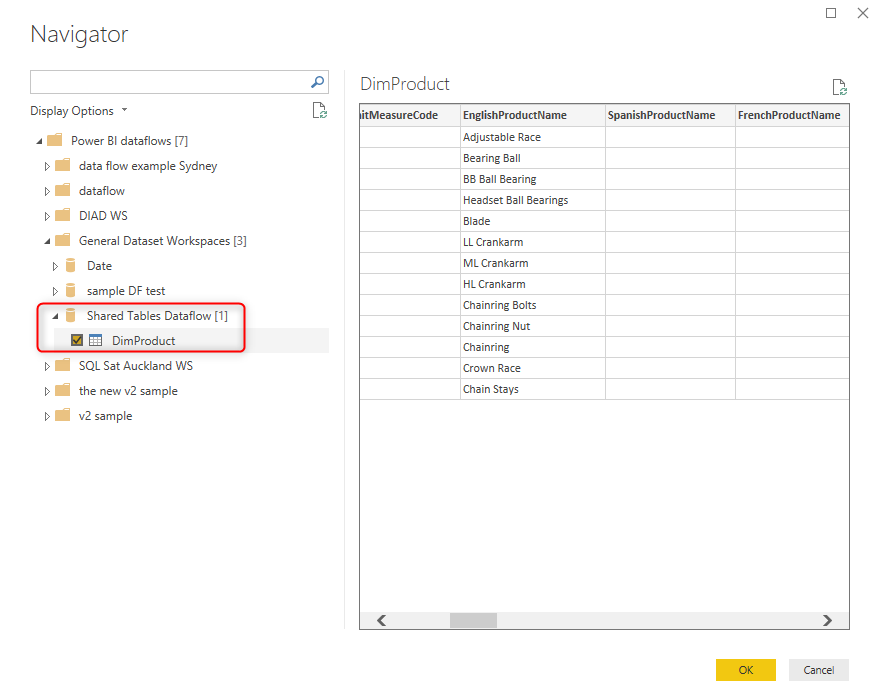

The last step of this implementation is to Get Data from the dataflow that contains that table. In Power BI Desktop, use Get Data from Dataflow

Then set up the connection to the dataflow using Power BI account, and then select the table;

You can do this process in as many as Power BI files you have. They will be all referencing to the dataflow table.

What are the benefits of this method?

- Using Dataflow is much easier than services such as Azure Data Factory, or tools such as SSIS. You don’t need to be a developer to implement this.

- You don’t need any extra licenses or services. It is all part of your Power BI Pro account. (for some specific types of entities, you might need premium, however, here is a workaround).

- You have a single version of the truth for your shared table in the dataflow. Any changes can be done in the dataflow script now.

- The transformation step is now separated from your *.pbix data refresh, and this can make the refresh faster.

- You can use the table generated by the dataflow in *.pbix files in other workspaces too.

Summary

Dataflows have many benefits, I have written only about a few of those so far in my articles. This article was focusing on creating a consistent transformation code using Power BI dataflows. You learned a low-maintenance way of creating shared tables between multiple Power BI files. The method explained here, doesn’t need any extra licenses or services, and is easy enough to be done by anyone with a little bit of Power Query knowledge. I strongly recommend you to read other Power BI Dataflow articles here. If you have any questions, don’t hesitate to ask in the comments below.

- What is Dataflow and Use Case Scenarios of that in Power BI?

- Creating your first Dataflow

- What is the Common Data Model and Why Should You Care?

- What is Computed Entity and Linked Entity?

- Workaround for Computed Entity and Linked Entity in Power BI Pro

- How to Use Dataflow to Make the Refresh of Power BI Solution Faster

Hi,

My colleague and I both created a dataflow entity each for Date Dimension, not too different from the one you demonstrated in your 4-step process. I am able to view and use both entities in Power BI Desktop, but my colleague is not. He is owner and admin of PBI and of one of the dataflows. Is there any reason this could be happening?

does he/she have access to the workspace that this dataflow is located on?

Cheers

Reza

I just ran into this. Have you checked desktop versions? The people who had an older version of Power BI Desktop application could not see the entities. When we all upgraded to the most recent release, all users could now see the entities and select them.

Hi Mick

Yes, I can guess that would be an issue, because Get Data from Dataflows is not available in many old version of the Desktop.

Cheers

Reza