The default configuration for the Power BI dataset is to wipe out the entire data and reload it again. This can be a long process if you have a big dataset. Hybrid tables in Power BI keep part of the data in DirectQuery, and the rest is imported for data freshness and performance. In this article, I explain how you can set up an incremental refresh in Power BI and its requirements. You will also learn about Hybrid tables in Power BI. Incremental Refresh is not just in Power BI datasets but also in Dataflows and Datamarts. In this article, you learn to load only part of the changed data instead of loading the entire data each time. To learn more about Power BI, read the Power BI book from Rookie to Rock Star.

Video

What is Incremental Refresh?

When you load data from the source into the destination (Power BI), there are two methods: Full Load or Incremental Refresh. Full Load means fetching the entire dataset each time and wiping out the previous data. When I say the entire dataset, I mean after all Power Query transformations because there might be some filtering in Power Query; whatever data loads into the Power BI dataset in this sentence is considered the entire data.

If the dataset is small, or the refresh process is not taking a long time, then the full load is not a problem. The problem happens when the dataset is big or the refresh process takes longer than an acceptable timeframe. Consider you have a large dataset including 20 years of data. From that dataset, probably the data from 20 years ago won’t change anymore, or even the data from 5 years ago, sometimes even a year ago. So why re-processing it again? Why re-loading data that doesn’t update? Incremental Refresh is the process of loading only part of the data that might change and adding it to the previous dataset, which is no longer changing.

Partitioning

Incremental Load will split the table into partitions. The quantity of the partitions will be based on the settings applied at the time of Incremental refresh. For example, if you want to have the last year’s data refreshed only, a yearly partition will likely be created for every year, and the one for the current year will be refreshed on a scheduled basis.

Hybrid Tables

If you need data freshness (near real-time) on a big table, there is an option for you. You can have your table designed to keep both DirectQuery and import data in one table. The DirectQuery part is to ensure the near real-time data, and the Imported part is to ensure the best performance in Power BI.

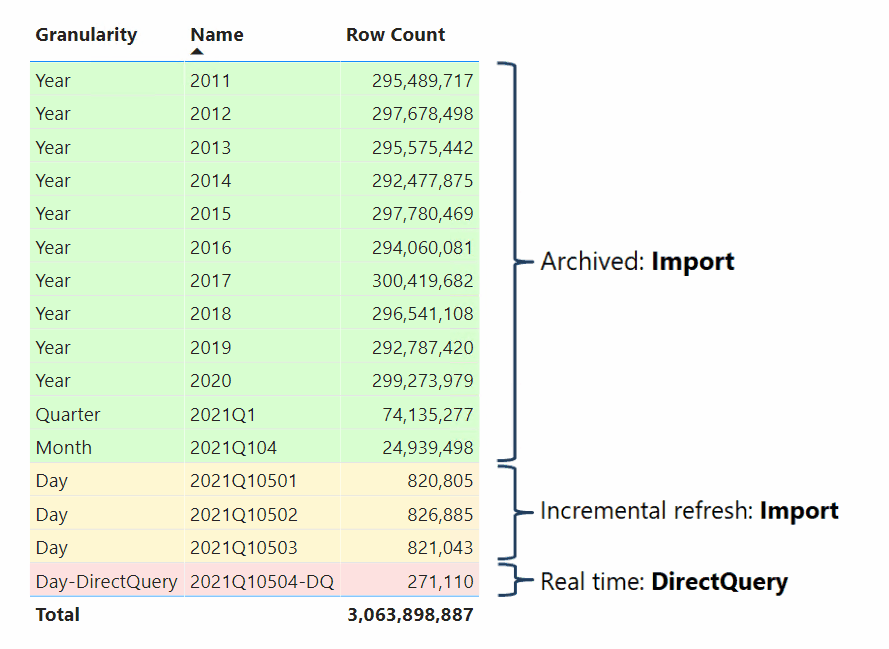

Hybrid tables are partitioned, so their most recent partition is a DirectQuery from the data source, and their historical data is imported into other partitions. This is not a Dual storage mode. It is a table that part of it is imported, and part of it is DirectQuery. Hybrid tables can only be applied on a table that incremental refresh is set on it. The image below shows how a hybrid table might have the structure of the data behind the scene.

Requirements: What do you need to set up?

Table with Date Field(s)

To set up the incremental refresh, you need to have a table (or more) with date field(s). The date field is the field that will have an impact on the partial refresh of the data. For example, Let’s say you have a FactSales table. You want to load all sales made earlier than a year ago just once, but everything from a year ago to now regularly. So you need to have a date field in your table for it. Most of the time, this field can be created date, modified date, order date, publish date, etc. You need to have a field like that as of date data type.

A data source that supports query folding

Query folding means that the Power Query transformations will be translated to the data source language (such as T-SQL when querying from SQL Server). Although you can implement Incremental Refresh on any data source, even if it is not supporting query folding, It would be pointless to do it for such data sources.

The main point of Incremental Refresh is that Power BI just reads the data that is recent and changed rather than reading the entire data from the source. With a data source that supports query folding (such as SQL Server or any other database system), that is possible because Query folding happens, and Power BI only queries the recent part of the data. However, If your data source doesn’t support query folding, For example, it is a CSV file. Then Power BI, when connected to it, reads the entire data anyway.

Licensing Requirement

Incremental Refresh doesn’t need a Premium or PPU license. You can even set it up using a Power BI Pro license. However, Hybrid tables require a Power BI Premium capacity or PPU.

Limitations: Things to Know Beforehand

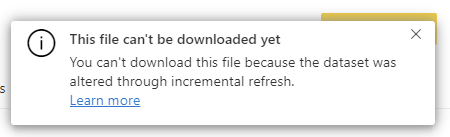

A limitation that can be important is that after setting up the incremental refresh, you cannot download the PBIX file from the service anymore because the data is now partitioned. It also makes sense because the data size is probably too large for downloading.

How to Setup Incremental Refresh

Setting up Incremental Refresh has some steps in Power BI Desktop and then in the Power BI Service. Let’s check them one by one.

Parameters in Power Query

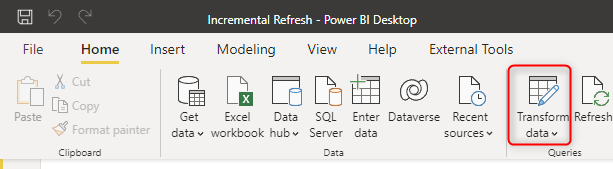

You need to use Power Query parameters to set up the incremental refresh in Power BI. You need to create two parameters with the reserved names of RangeStart and RangeEnd (Note that Power Query is a case-sensitive language). Go to Transform Data in your Power BI Desktop solution,

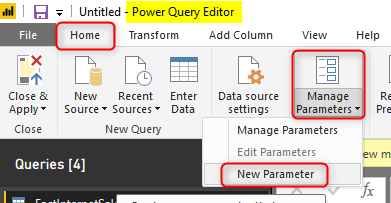

And then click on New Parameters.

Then create two parameters of DateTime data type, with the names of RangeStart and RangeEnd, and set a default value for each too. The default value can be anything, but the name and the data type should be as mentioned here.

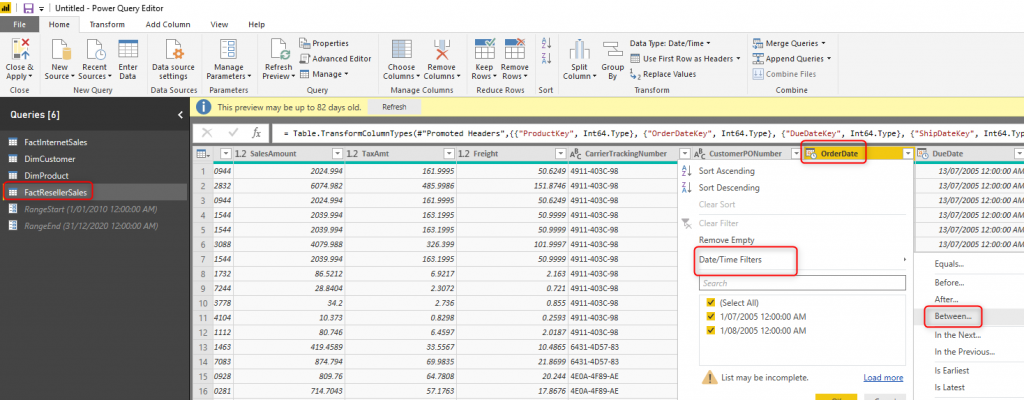

Filter Data Based on Parameters

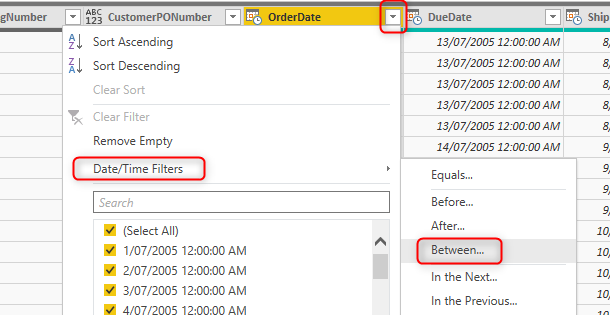

After creating the two parameters, you need to filter the data of the date field based on these two parameters. For example, in the FactInternetSales, I can filter the OrderDate using the column filtering as below;

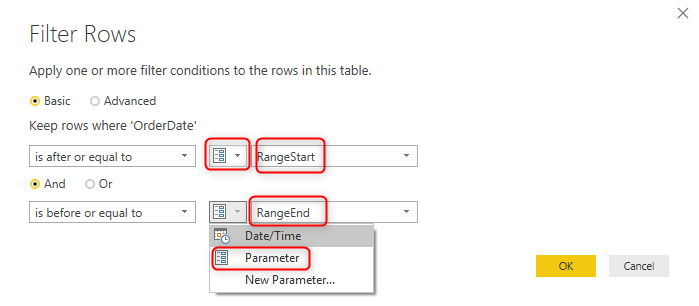

You can use the Between filter using the RangeStart and RangeEnd parameters as below;

After this action, your data in the table will be filtered based on the default values you set for the RangeStart and RangeEnd parameters. However, don’t worry about that. These two parameters will be overwritten with the configuration you make in the Incremental Refresh setting of the Power BI Desktop.

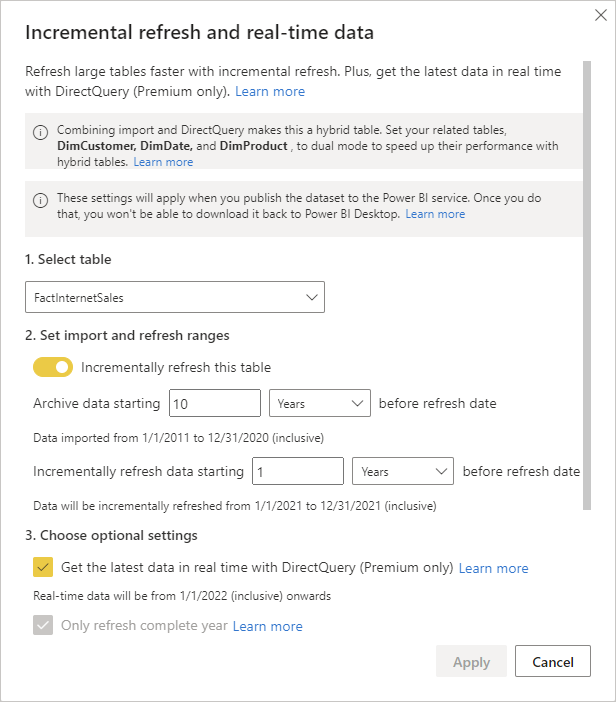

Power BI Desktop Incremental Refresh Setup

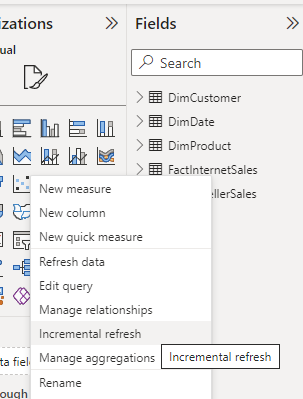

The final step in Power BI Desktop is to close&apply the Power Query Editor window and set up the incremental refresh setting for the table. You have to right-click on the table in the Power BI Desktop and select Incremental Refresh.

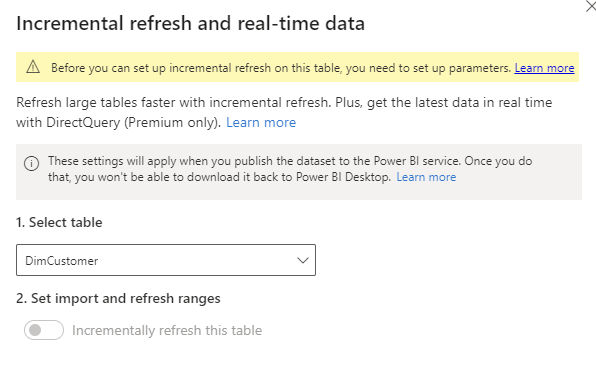

In the Incremental Refresh settings window, you can choose the table first. If the table is a table that doesn’t have the two parameters of RangeStart and RangeEnd used in filter criteria, then you won’t be able to do the setting for it. For example, DimCustomer won’t give me the option to do the incremental refresh settings.

However, I can do the settings for FactInternetSales because I did filter the OrderDate field of this table based on the parameters. There are two things to notice at this step: If your data source comes from a data source that supports query folding, then Incremental load works best. If not, it is not recommended to use it. Most of the time, huge data comes from a relational data store system, which supports query folding.

Another thing to note is that there is an option for “Get the latest data in real-time with DirectQuery”, which requires Premium or PPU licensing. And finally, you have to know that you cannot download a PBIX file from the service if it has an incremental refresh setup.

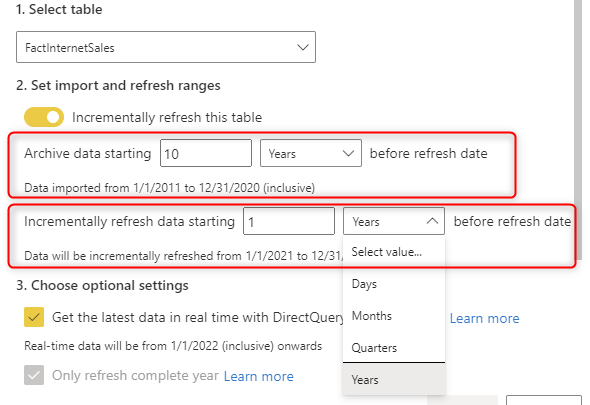

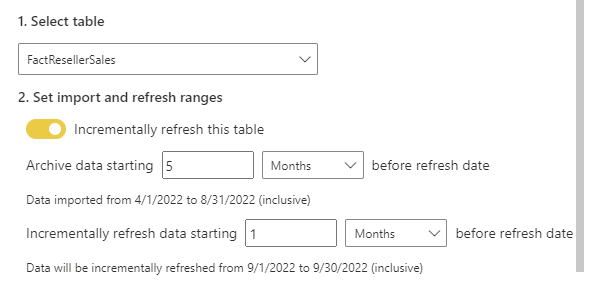

Configuration for the incremental refresh is easy. You just set up the amount rows to Store (load only once, and store it) and the amount rows to Refresh (re-load every time);

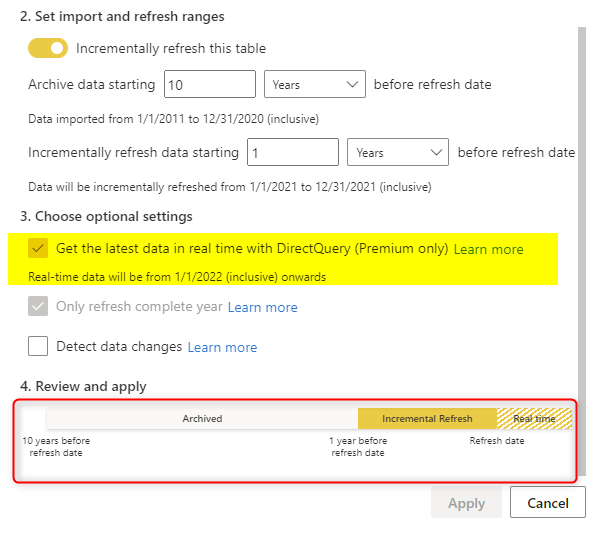

Hybrid table setup

You have one more configuration if you want to have your table set up as a hybrid table. This requires a Premium or PPU license. Select the “Get the latest data in real-time with DirectQuery” option. And your dataset has to be published into a Premium or PPU workspace. Once you set this configuration, You can see the period of real-time data and also a diagram showing the timeline of your setup.

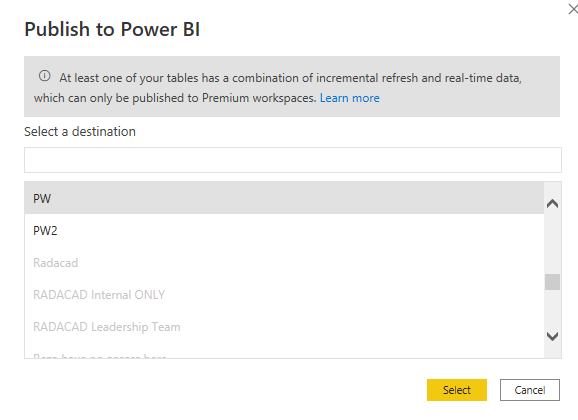

After enabling this on your dataset, your dataset can only be published to a Premium or PPU workspace.

Incremental Refresh for Multiple Tables

You can set up the incremental refresh for multiple tables. You don’t need more parameters; the two parameters of RangeStart and RangeEnd are enough. You just need to set up the filter in any other tables you want.

Then you need to set up the configuration in the Power BI Desktop for each table;

Note that although we are using the same parameters of RangeStart and RangeEnd, you can have different configurations of Store and Refresh for the incremental refresh setting for each table.

Publish to Service

After setting up everything, you can publish the Power BI file to the service. Note that if you used Hybrid tables, you could only publish it to Premium workspaces.

How do the partitions look like

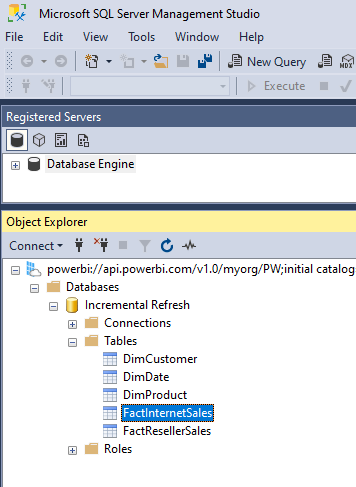

Now that you have set up the incremental refresh and the hybrid table settings, you can check out the partitions in the Power BI dataset. The method I used to show this to you only works if you have a Premium or PPU workspace. You can use the XMLA endpoint to connect to the dataset using the SQL Server Management Studio (SSMS) and see the partitions on a table.

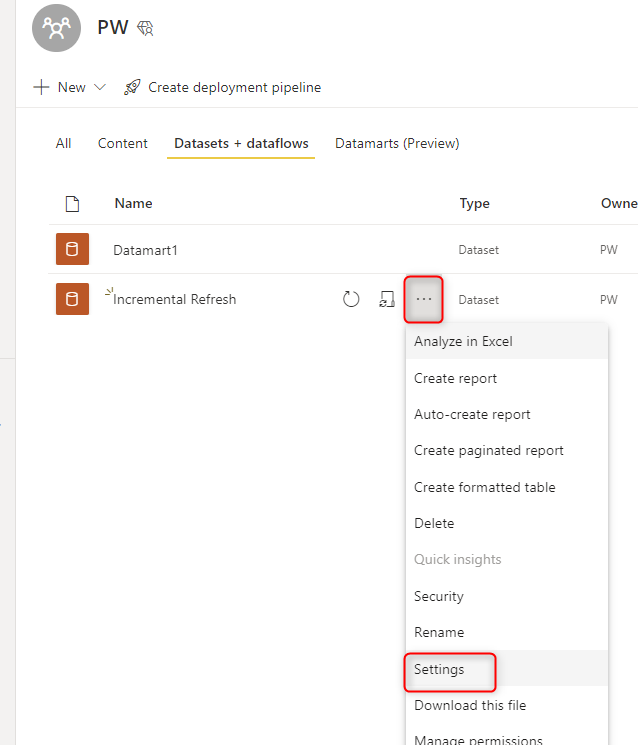

In the Power BI Service, go to the settings of your dataset;

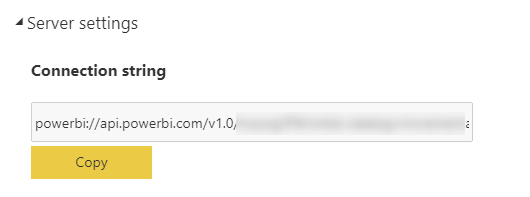

In the Dataset settings, expand Server settings and copy the connection string (if you don’t see this section, then perhaps your workspace is not a Premium or PPU workspace);

Open SSMS, create a connection to Analysis Services and paste the connection string as the server name, set authentication as Azure Active Directory – Universal with MFA, and they type your Power BI email as the user name. After authentication, you can see your dataset in the SSMS. Expand the tables.

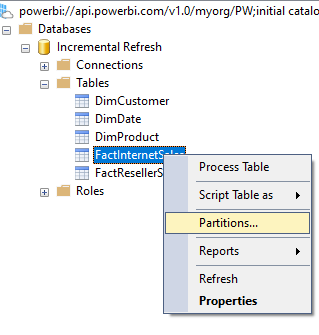

Right-click on a table (such as FactInternetSales) and select Partitions.

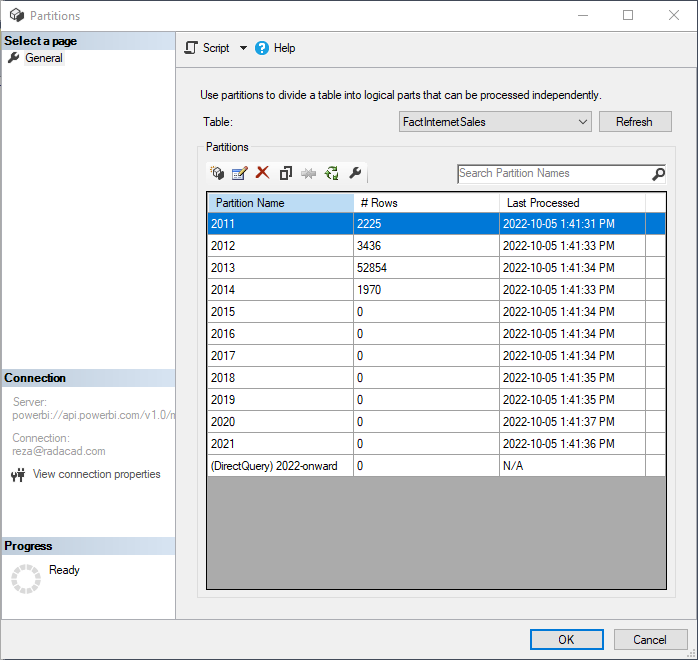

Then you will be able to see the partitions;

If your table has only one partition, then perhaps it doesn’t have an incremental refresh set up on it. If your table has the Hybrid table settings, then the last partition will be a DirectQuery partition (Like what you see in the above screenshot)

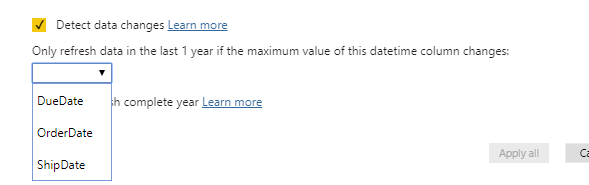

Detect Data Changes

Incremental Refresh will make the part of the dataset to refresh much smaller, and as a result, the process would be much faster. However, there is still one better way to do that. Suppose you have a modified DateTime (or updated DateTime) in your table. In that case, the incremental refresh process can monitor that field and only get rows whose date/time is after the latest date/time in that field in the previous refresh. To enable this process, you can enable the Detect Data Changes and then choose the modified date or update date from the table. Notice that this is different from the OrderDate or transaction date. And not all tables have such a field.

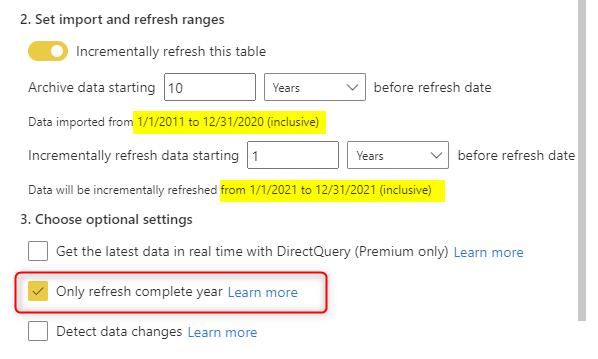

Only Refresh Complete Period

Depending on the period you selected when you set up the incremental refresh, you can choose to only refresh when the period is complete. For example, in the FactInternetSales, I set the refresh period to Year. And then I can set the option to Only Refresh the Complete Period, which means even If we were in Feb 2019, it would only refresh the data up to Dec 2018 (because that is the latest date that we have a full complete year in it);

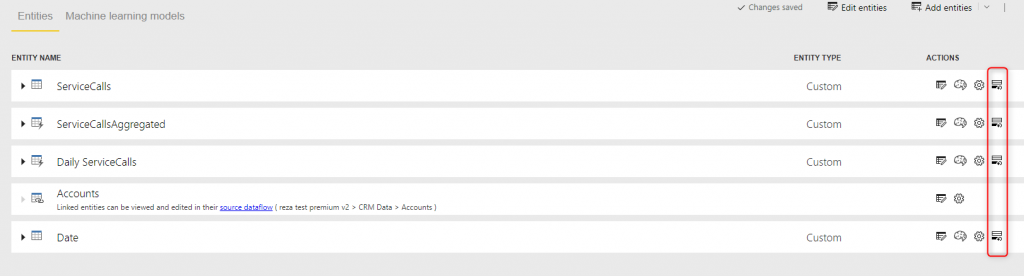

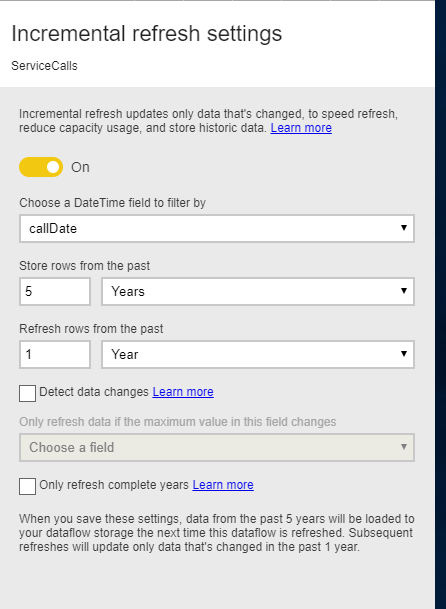

Incremental Refresh for Dataflows or Datamarts

Dataflows are ETL processes in the cloud for the Power BI service. You can also set up the Incremental Refresh for Dataflows. And it is even easier to do it for the dataflow. You don’t need to create the RangeStart and RangeEnd parameters there. Just go to the Incremental dataflow Refresh setting directly.

You can see that the same set-up of the incremental refresh setting is available for the dataflow;

A similar setting can be done on the Datamart for an incremental refresh.

Summary

Setting up the incremental refresh in Power BI means loading only part of the data regularly and storing the consistent data. This process will make your refresh time much faster. However, there are some requirements for it. You need to have a date field in your table. It is recommended only when the data source supports query folding. You can do this configuration on a Power BI dataset or in Power BI dataflow or Datamarts. If you do this on a dataset, then after publishing the dataset, you cannot download the PBIX file.

Hybrid tables can be an addition to your Incremental Refresh setup. They are helpful in having the most up-to-date data using the DirectQuery partition while the historical data is stored as Import in other partitions. From the Power BI point of view, these are all part of a single table.

Have you ever considered using Incremental Refresh? If you haven’t done it so far, let me know why in the comments below, and I’m always happy to help you with that.

Really insightful! Thank you Reza. Do you know if this feature is available to Power BI Report Server?

Hi Sebastian.

Not yet, However, we have to see, maybe it comes up one day 🙂

Cheers

Reza

Hi Reza, great article. So as you can’t download the pbix you just need to make sure you save a local copy and redeploy it when you want to make an update? At the moment of pbix update (redeploy to service) the dataset will have to do a full refresh once again before it will start using incremental refresh? Thx for letting me know. J

Hi Jan,

with changes in the structure, you would need to re-load the data again.

Cheers

Reza

Hi Reza, I’m also interested in this topic.. what do you mean with “change in structure”? I’m trying to setup the incremental refresh on a model (visual + very large dataset, only a small part of it being downloaded in the pbix file) and I’m facing two issues:

1) The very first loading of the model is taking a lot of time and always ends up with this error message: Before the data import for finished, its data source timed out. Double-check whether that data source can process import queries, and if it can, try again. Is there some tricks to avoid this error during the very first upload?

2) What would happen when changing something in the visual or something in the dataset (e.g. adding a new table or a new measure)?

Thank you

Francesca

Hi Francesca,

I would highly recommend in your scenario (that even the initial load is taking a long time) to decouple the ETL and the rest of the model using dataflows. You can then set up your dataflow to have Incremental Refresh. and also in your dataset set up incremental refresh.

You can then use the shared dataset concept of having Power BI getting data from your dataset. that way visualization changes, won’t affect the dataset at all.

and if you make any changes in the dataset as adding measures or relationships etc, it would only affect your dataset, not the dataflow. isolating layers like what I mentioned here is going to be the best option in your scenario I believe.

Cheers

Reza

Hi Reza,

Did you try to work with incremental upload from SAP BW? there data extraction is based on MDX query and it is not so straight forward as you described.

1. With power query

The fact that parameters are used the is very helpful as we can converts is value as an input entry for MDX query. So technically we can work with that but in case we want to combine data from multiple tables, every time we are changing data model we have to publish data set again and every time refresh historical data for all tables (not only for those where model is changed). Also we cannot Refresh data for one particular table. All or nothing… So we thought that Data Flow would help us…

2. Data Flow

Different way of working, no parameters… date only so we cannot use our solution with parameters. And even if we have a data source with date column then the data format in SAP BW is different than in Power BI, so the incremental upload is not possible at all.

If you have any experience with SAP BW or a smart solution how we can use incremental upload in data flow I’d really appreciate if you could share with us.

Thanks

Dariusz

Hi Dariusz

I am not sure if Query folding is supported with SAP BW. I assume not. If no query folding, then the incremental load won’t make the refresh process that much different, because the data will be loaded fully from the source to the engine of Power Query every time, and filtered there.

One way is to create a dataflow for previous periods manually, run it once. and then create the rest in a new dataflow.

Cheers

Reza

Hi Reza,

My Fact data tables include dates in the form of a number that have a relationship with a dimdate table.

Example: Invoice Date is in the form of 20190919 with the date being 09/19/2019. Am I able to complete the incremental refresh using this type of column or can i created a calculated column that puts it in date form?

Thanks,

Your Start and End range parameters HAVE TO BE date format. so better to create a new column (can be done in Power Query easily) to generate the date from YYYYMMDD format

Cheers

Reza

Hi Reza,

Did you try to set up an incremental refresh in Power BI for a data source that does not support query folding?

In my case data source for Power BI is Elastic Search. As there is no native connector we implemented REST API endpoint + power query M script that query data in batches using elastic scroll API.

Is it possible to somehow combine custom data source which is API endpoint and incremental refresh?

How about a solution that power query M script would call my API endpoint with parameters RangeStart and RangeEnd? An additional question is if the received data batch will override existing data or just add new entries.

Thanks

Ewelina

using Incremental Refresh with a data source that doesn’t support query folding can be done, but won’t be ideal.

imagine this scenario: we have 10 billions of rows in a data source. we only fetch rows from the last year. if the data source supporting query folding, we just get that part of the data and load it. if the data source doesn’t support folding, we have to read the entire data anyways, load it into temporary memory, then do filtering and load only the last one year. because in this case, we have loaded the entire data in the temporary memory, then it would be easier if we do a full load than the incremental load. that is why it is a preferable approach with sources that supports query folding.

is the custom connector you build, doing query folding?!

Cheers

Reza

I built a dataflow with multiple Excel files being read from Sharepoint folder. the data flow works perfectly.

however it fails the moment i try to do incremental refresh. I have 3 date time fields in the data ( Date Modified, Date Created and Date of transaction). i tried multiple combinations, i even changed the date modified and date created but it fails every time. is there something i am doing wrong?

Incremental Refresh works best on the data sources that support Query folding. Excel isn’t one of them.

So there won’t be a point of doing it anyway, because every time it has to scan the entire Excel file.

Cheers

Reza

Hi, Reza – my data is from a transaction file in DB2 from an AS400. The date is split into pieces: a separate column for CT, YR, MO, DY. I asked the DBA to add a date column but request denied. My connection is through ODBC. I have used MS Query to get the data into Excel, and I think I see a way to generate a date field in the SELECT statement. But MS Query is not a valid source for Power BI. Is it feasible to use SELECT statement like that for Power BI?

Hi Jeff

If you have a T-SQL statement (Select statement), then you can use that when you get data in Power BI. For SQL Server, Under Advanced Options (in the window of Get Data from SQL Server), you can write the SQL Command

Cheers

Reza

Hi Reza,

Can you please confirm how can we verify the data tracking is working.

Regards,

Srikanth

Hi Srikanth

You will get an email when the scheduled refresh of your dataset fails (either with the incremental refresh or without)

You can, however, add some more logic in your report, to show you the number of all transactions, or the latest transaction imported, etc to be able to reconcile it with the source system if you want. but that needs to be custom developed by you.

Cheers

Reza

Hi Reza,

I have implemented the incremental refresh as mentioned above. some how, the incremental refresh is taking more time (90mins) then regular refresh(30mins). FYI, i am refreshing 1 day and storing data for 4months. Please let me know, if you need more info.

Thanks,

Arey

Hi Arey

What is the data source you are getting data from? if the data source, doesn’t support query folding, then the Incremental Load won’t really change the process much. for example, if you are getting data from Excel file, then Incremental Load won’t make sense at all.

Cheers

Reza

Hello,

Thanks for the clarification about incremental refresh.

After implementing the incremental refresh, can we take back the incremental refresh procedure?

Best regards.

Beyza

What do you mean?

DO you mean can you change it back to non-incremental and full load? if that is the question, yes, you just change the switch of incremental load off, and then the full load happens.

Cheers

Reza

Hello again,

I have another question about RangeStart and RangeEnd parameters.

In my case I need to create these parameters according to MAX date and Second MAX date. The range is specified as the difference between the MAX date and previous (second) MAX date. I thought that I can create two calculated columns first, and then I can use these calculated columns in parameter creation. But of course I’m not sure if it’s right or not.

I’ve tried to write query on parameter creation but when I choose the Query option on parameter page, it’s not active. I can not write anything on it. Do you have any solution suggest?

Thanks.

Hi Beyza

what is the reason for calculating the max of a date field? is that because so you can only load those records that have updated or changed AFTER the last date? if yes, Incremental Load is doing that automatically. check the options window of the incremental load. you can choose a createddate/updateddate or something like that as a date field to watch.

Cheers

Reza

Hi Reza,

Thanks for the article, I am trying to implement incremental refresh but one I publish on the service that refresh is just pulling out data that sits on my initial range of 2 months while the period that I setup at incremental refresh police is suppose to look for 22 months.

Looking at my native query I am in about how the service will replace the parameters values from 2 for 22 months, as the seems to be hard-coded on the M query.

Any guess please ?

select [_].[TS_NAME],

[_].[LOC_KEY],

[_].[DATE],

[_].[value]

from [wrc].[wrc_v_pbi_PAP_Daily_Water_Use] as [_]

where [_].[DATE] > convert(datetime2, ‘2019-12-30 00:00:00’) and [_].[DATE] <= convert(datetime2, '2020-02-25 00:00:00')

Thanks

Hi Douglas

what configuration did you make for your incremental refresh? do you have a screenshot?

Cheers

Reza

Hi, does Incremental Refresh in Desktop only work when you import the data file, or can it be done on a direct query?

Cheers,Matt

Hi Matt

There is no need for an incremental refresh when using Direct Query. Incremental refresh means when the data is LOADED, it doesn’t load fully, it loads just the new or changed data. In DirectQuery mode, there is no loading process happening. the data will be queried each time for the visualization. same for the live connection.

Cheers

Reza

Hi Reza,

Hope you are well.

I’m trying to implement incremental refresh but unfortunately data refresh is taking much longer than it was before. Do you know if a SQL view in Azure DB data source supports query folding?

Many thanks!

It should fold.

Try opening the SQL profiler and monitoring the queries to see what query sent to the source, is it with the date fields in where clause?

Cheers

Reza

Many thank Reza for confirming.

I can see my query running with a where clause in it. 4 queries altogether running at the same time with different data ranges – which looks correct! Only issue now is it’s taking much much longer than usual. I suspect my Last Updated Date Time column needs to be indexed at least?

It is a good idea to have an index on the column that you have in your where clause, which is your datetime column. that might make it faster.

Cheers

Reza

Hello,

And thank you for a very informative article, as usual.

Wanted to ask what is your recommendation about incremental refresh on a data source that does not support query folding, e.g. reading from OData.

Thanks.

OData actually supports query folding (depends on the transformation)

But if the data source doesn’t support incremental refresh; then there won’t be a point of doing Incremental refresh at all, I say just leave the dataset normal without it.

imagine that I have a data source with 10M rows, and no query soldering supported. Even if I implement incremental refresh on this dataset, the process will access the 10M rows and read it every time anyways, then it will filter it. which there is no point.

Cheers

Reza

Hi Reza,

It is possible the same increamental refresh concept applies to pulling data using stored procedures?

for instance, I have a few stored procedues:

1. mysp_LoadSales @startdate, @Enddate, @LastXDays…

2. mysp_LoadSaleDetails @startdate, @Enddate, @LastXDays…

3. mysp_LoadSaleDeliveryDetails @startdate, @Enddate, @LastXDays…

In 2 & 3, the Sales table will always be used to filter the SaleDetails & SaleDeliveryDetails.

in 2:

select …

from Sales s

join SaleDetails sd on sd.SaleID = s.SailID

where s.CreatedDate between @StartDate and @EndDate

in 3:

select …

from Sales s

join SaleDeliverDetails sdd on ssd.SaleID = s.SailID

where s.CreatedDate between @StartDate and @EndDate

The reason I had to use stored procedules because the underlying data model was designed very poor. Pretty much like logging which logs various thing. I had to do some work inside of the stored procedure to return what is needed.

I use sales concept just want to simplify my case.

According to how power BI adding the dates to the query, i don’t see this is going to work for stored procedures, but want to confirm with you if I am right. And what is the better approach to get increamental refreshing work when I have to use stored procedures?

Thanks a lot.

Michael

Hi Michael

Have you traced the SQL script sent to the database using SQL profiler to see what is sent there? is there no usage of the stored proc in that code?

Cheers

Reza

Hello Reza, how are you?

Very nice Article.

Quick question: is there any way to access the database created using the incremental refresh?

Thanks a lot!

Best Regards,

Hi Joao

Power BI dataset is an in-memory database. you can connect to it using Power BI itself, SQL Server Development Tools, SSMS, Excel, and community tools such as Tabular Editor, DAX Studio and Power BI Helper

Cheers

Reza

Hi

I try to do it in a Folder connection (Folder has many Files), but does not working, what should be do to get it work

Hi Luis

the best Incremental refresh outcome is for the data sources that support query folding. I don’t think folder connection supports that. This means even if you can get the incremental refresh working, still the process has to load the data into the memory to process it. and it won’t help much in shortening the data refresh time.

Cheers

Reza

Hi Reza,

The article is quite helpful. Thanks a lot. I managed to follow the steps you mentioned however, PBI desktop still says “Before you can do incremental refresh, you need to set up parameters” which I did for sure for the query flowing from Power Query into Power BI desktop.

I got multiple dates in the table, can that make any difference. Or That query is an append query from other 15 queries which have been web API calls. Not sure if that’s making any difference. Do you mind sharing your thoughts. That would be a great help.

And by the way, the Close and Apply process an hour worth of time as it makes API calls to all those queries and sometimes, its very quick (that time, it doesn’t want to make API call again). Any specific reason why that may happen? And does it save the loading process as I have recently un-enabled all those 15 queries API queries in Power Query.

Look forward to hear from you.

Thanks again,

Gaurav

Hi Gaurav

With the API calls the query folding doesn’t happen all the time. it depends on the API. that is the reason why refreshing takes so long some time. Even if you could Implement incremental refresh, it might still need to bring the entire data into the cache first (it worth checking though and see if it supports query folding or not)

Cheers

Reza

Nice Explanation.. Thank you . Still bit confusion about the current date in RangeStart and RangeEnd while creating parameter . Is the dates latest date of Order column ?

That current date when creating the parameter is just a value to start with. It won’t do anything in this case, because you are setting the values of both parameters through the incremental refresh settings in the Power BI Desktop.

Cheers

Reza

Thank You for the informative articles. Is incremental refresh available with Power BI Embedded?

Power BI datasets are just shared in the embedded way, they support Incremental refresh like a normal dataset.