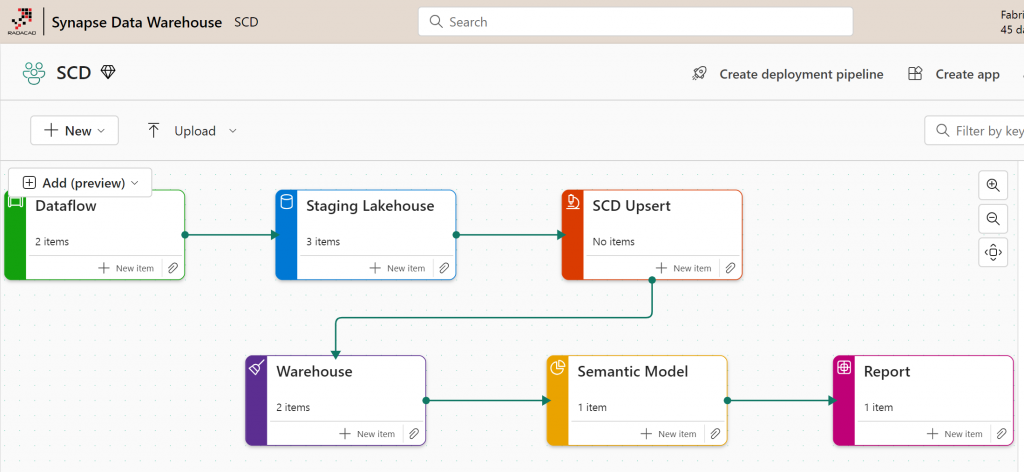

In the previous article, I explained SCD (Slowly Changing Dimension) and its different types. In this article, I’ll show you how to implement SCD Type 2 (one of the most common types) using Microsoft Fabric and Power BI. This article includes using Lakehouse, Dataflow, Warehouse, Data Pipeline, SQL Stored Procedures, Power BI Semantic model, and report in Microsoft Fabric.

Video

Introduction to SCD and Types of it

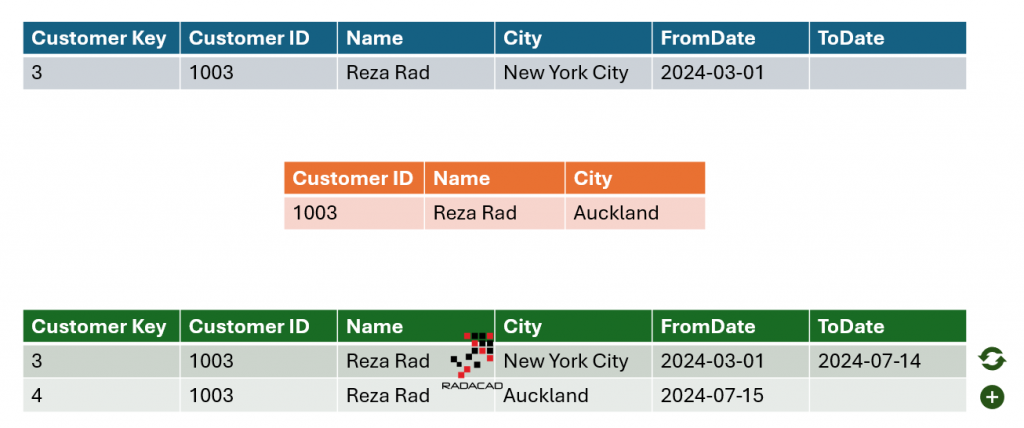

Slowly Changing Dimension (SCD) is when the attributes of a dimension member change and the way you handle the change in the data warehouse. Type 2 of SCD will keep all the history of changes using two columns of FromDate and ToDate in the dimension table. Read my previous article here to learn more about SCD and its types.

The Structure for Dimension Table

There are three important columns needed for every dimension table that needs SCD Type 2;

- Surrogate Key

- FromDate

- ToDate

The Surrogate key is the primary key column in the dimension table. This is different from the primary key in the source system. If you use the primary key of the source system as the surrogate key, then using that key column in the fact table can’t point out the changed value based on SCD Type 2. So it is important that this be another key column whose values are usually auto-incrementally created during the ETL process.

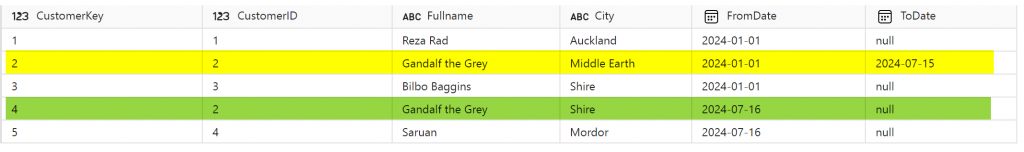

The FromDate and ToDate columns specify the period in which the values of dimension members are effective. For example, the image below shows that the customer, Reza Rad, was in New York City from March 1st to July 14th, 2024, and in Auckland from July 15th to the current date. The value of ToDate is nullable, and for the most current record, it will be null (blank).

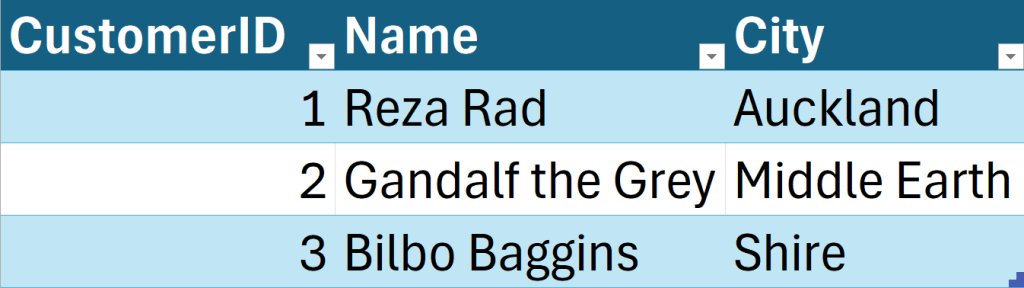

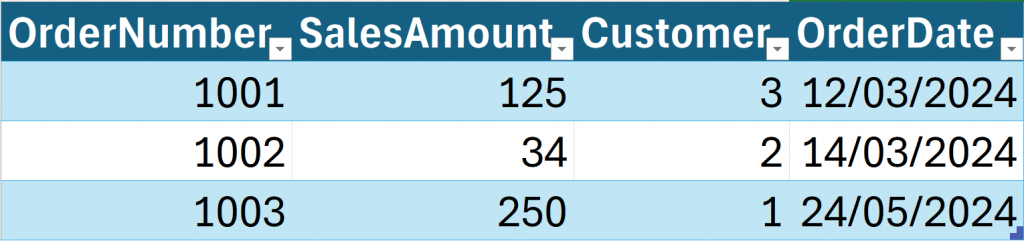

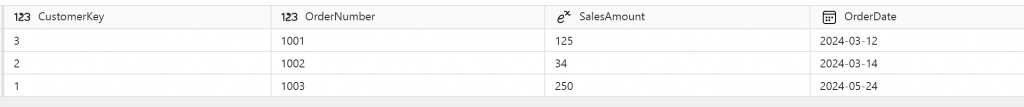

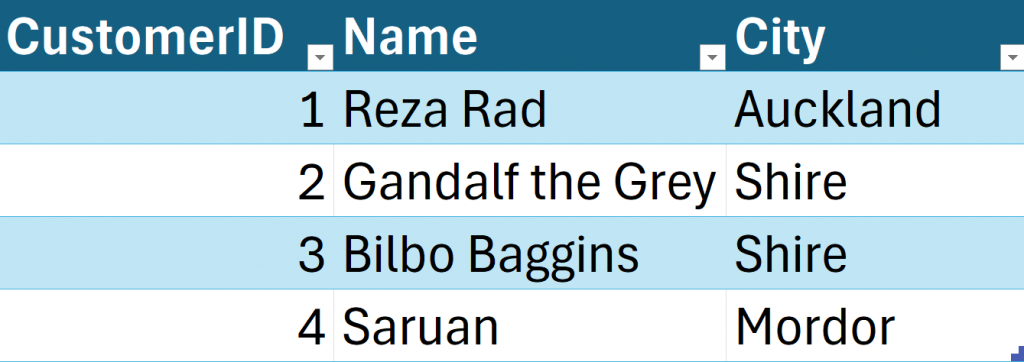

Example Source Tables:

Here is the example source table for the customer

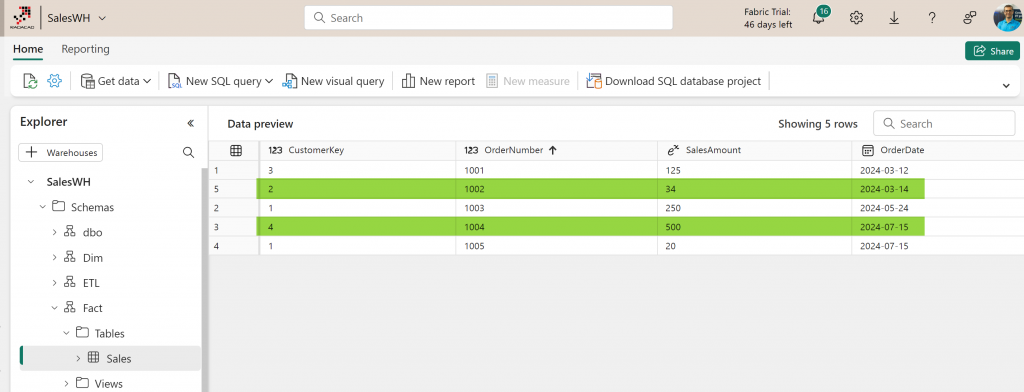

Here is the example source table for the sales transaction

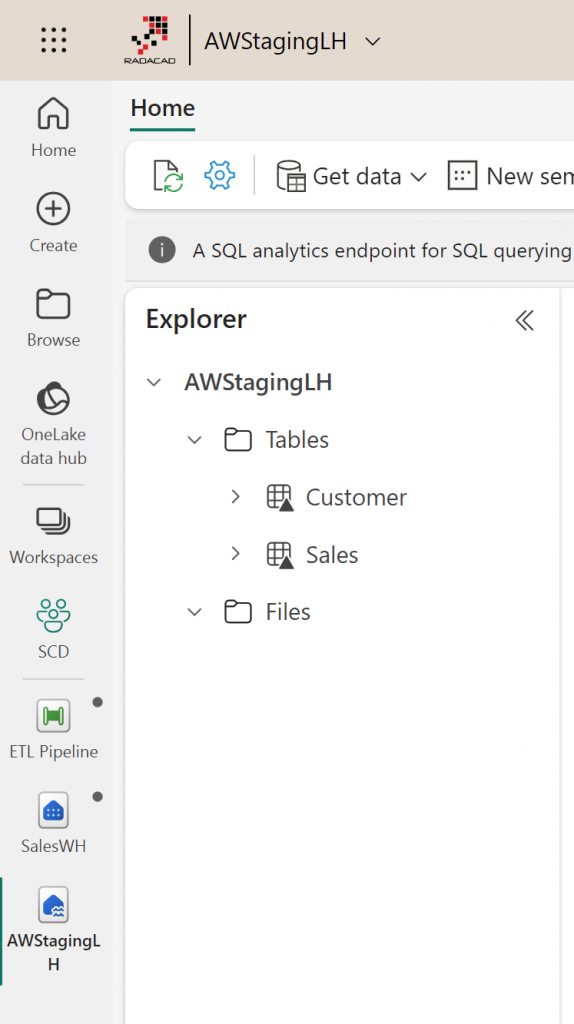

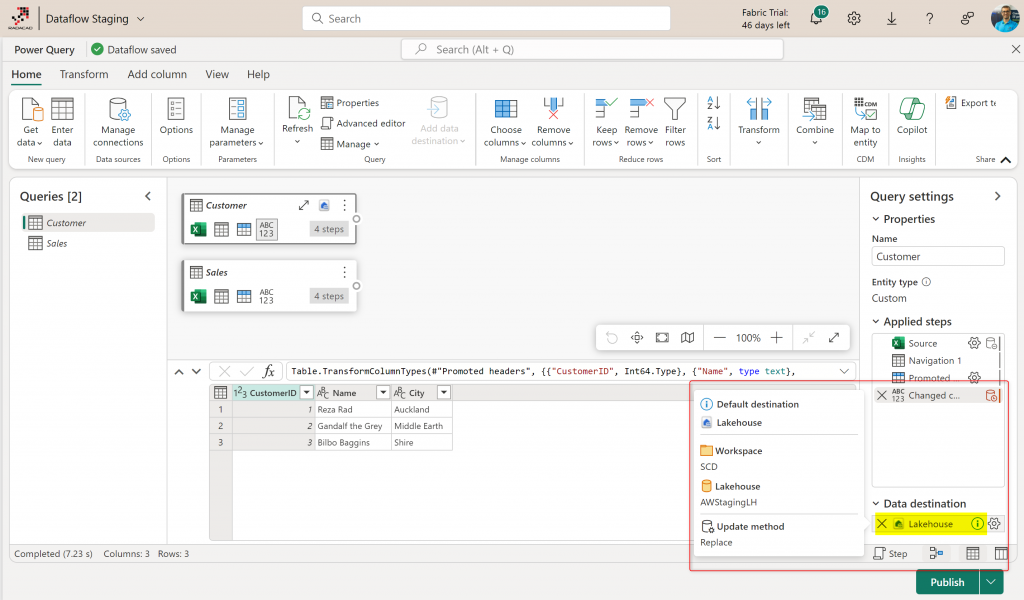

Lakehouse and Dataflow for Staging

Building a staging Lakehouse is not mandatory for this process but is very helpful. The method I will explain uses staging Lakehouse. A Staging Lakehouse will have the data of source tables exactly as-is copies without any changes or modifications.

Please note that the Dataflow comes with staging Lakehoues by default, but I created a custom staging Lakehouse for this example to clarify the process.

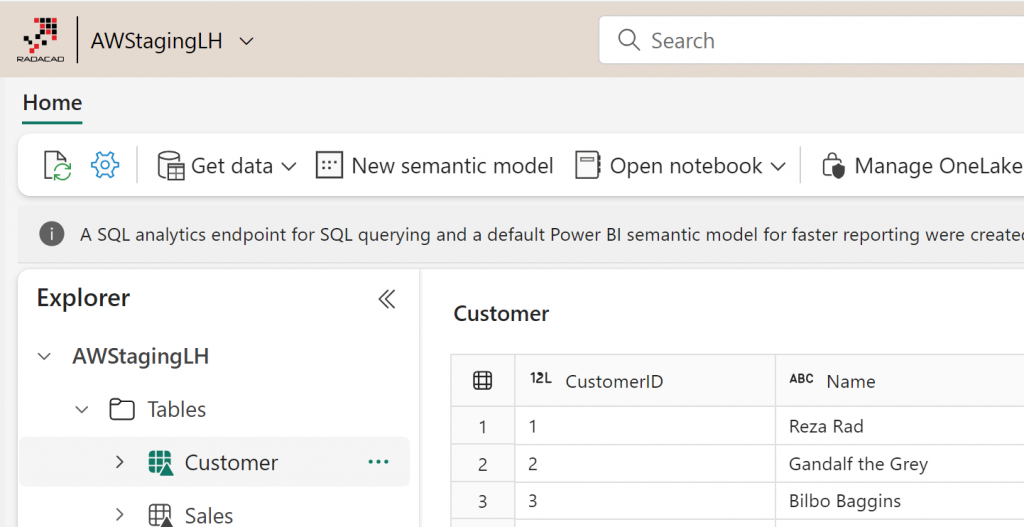

Then, I used a Dataflow Gen2 to load data into the staging Lakehouse.

The staging Lakehoues will have the two tables loaded in it as below.

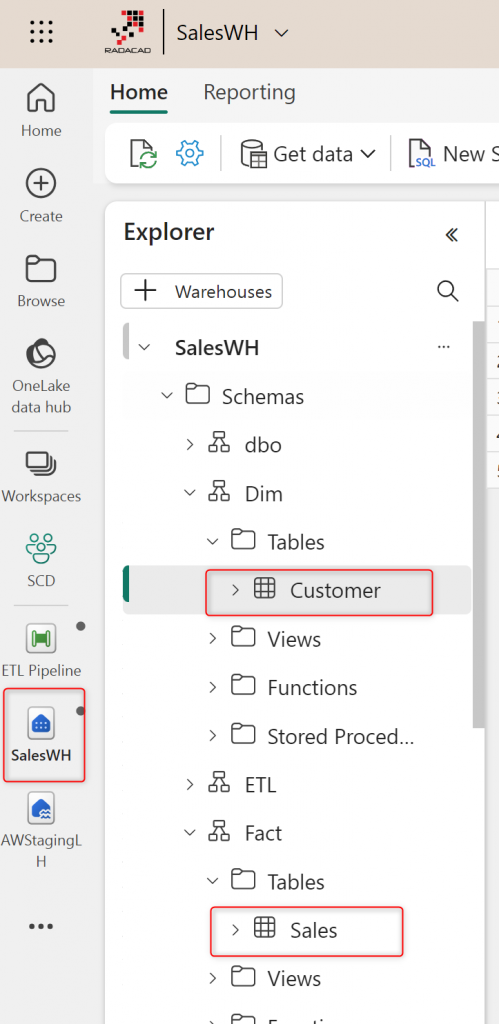

Warehouse for the Star Schema

In this example, I used a Warehouse for the star schema. We could have used the same Lakehouse for it using a different schema, too, but I created it separately to clarify things better.

Building Customer Dimension

The script below is used to create the Customer Dimension

CREATE TABLE [SalesWH].[Dim].[Customer] ( CustomerKey int NOT NULL, CustomerID int NOT NULL, Fullname VARCHAR(200) NOT NULL, City VARCHAR(200) NOT NULL, FromDate Date NOT NULL, ToDate Date NULL ) GO ALTER TABLE [SalesWH].[Dim].[Customer] ADD CONSTRAINT PK_DimCustomer PRIMARY KEY NONCLUSTERED (CustomerKey) NOT ENFORCED; GO

The three columns CustomerKey (surrogate key), FromDate, and ToDate are part of the definition.

Building Fact Sales

The script below is used to create the Fact Sales

CREATE TABLE [SalesWH].[Fact].[Sales]

(

CustomerKey int NOT NULL,

OrderNumber int NOT NULL,

SalesAmount DECIMAL NOT NULL,

OrderDate DATE NOT NULL

)

GO

The Fact table uses the CustomerKey (surrogate key) for relating to the Customer dimension, not the Customer ID (which is the key in the source system, in other words, natural key)

UPSERT: SCD Type 2 for Customer Dimension

Here comes the most important part of the implementation. We need a script that checks for change detection in the customer’s source table. In case the change is in City (that is our SCD Type 2 attribute), it will apply a UPSERT. UPSERT means Updating the old record, setting the ToDate, and inserting the new record.

Here is the script for UPSERT; this script is created as a stored procedure.

CREATE PROC [ETL].[UpsertDimCustomer]

AS

BEGIN

–an start date for the first load

Declare @FirstLoadDate DATE

set @FirstLoadDate=’2024-01-01′

Declare @MaxCustomerKey INT

select @MaxCustomerKey=isnull(max(CustomerKey),0) from SalesWH.Dim.Customer

–insert into SalesWH.Dim.Customer(CustomerID,Fullname,City,CustomerKey,FromDate)

— update

update SalesWH.Dim.Customer

set ToDate=Convert(Date,dateadd(day,-1,GetDate()))

where CustomerKey in

(

select

dm.CustomerKey

/*dm.CustomerKey,stg.CustomerID,stg.Name,stg.City,

ROW_NUMBER() OVER(ORDER BY stg.CustomerID ASC)+@MaxCustomerKey AS CustomerKey,

Convert(Date,GetDate())*/

from AWStagingLH.dbo.Customer stg

left outer join SalesWH.Dim.Customer dm

on stg.CustomerID=dm.CustomerID and dm.ToDate is null

where stg.City<>dm.City

)

— get the max ID again

select @MaxCustomerKey=isnull(max(CustomerKey),0) from SalesWH.Dim.Customer

— insert

insert into SalesWH.Dim.Customer(CustomerID,Fullname,City,CustomerKey,FromDate)

select stg.CustomerID,stg.Name,stg.City,

ROW_NUMBER() OVER(ORDER BY stg.CustomerID ASC)+@MaxCustomerKey AS CustomerKey,

case @MaxCustomerKey when 0 then @FirstLoadDate else Convert(Date,GetDate()) end as FromDate

from AWStagingLH.dbo.Customer stg

left outer join SalesWH.Dim.Customer dm

on stg.CustomerID=dm.CustomerID and dm.ToDate is null

where dm.CustomerKey is null

END

GO

*Note that this script can be written in many different ways; this is just one way.

Insert new rows in the Fact Table.

The next important thing is that the Fact table’s record gets added using the right CustomerKey. This is done using the join to the Customer dimension based on CustomerID (natural key) and the dates-between filter to check for the period in which that customer record is effective.

Here is the script for adding new records to the fact table:

CREATE PROC [ETL].[InsertNewFactSalesRecords]

AS

BEGIN

declare @LastETLDate Date

select @LastETLDate=max(OrderDate) from SalesWH.Fact.Sales

insert into SalesWH.Fact.Sales(CustomerKey,OrderNumber,SalesAmount,OrderDate)

select dim.CustomerKey,fact.OrderNumber,fact.SalesAmount,fact.OrderDate from AWStagingLH.dbo.Sales fact

inner join SalesWH.Dim.Customer dim

on fact.Customer=dim.CustomerID and fact.OrderDate between dim.FromDate and isnull(dim.ToDate,’9999-12-31′)

where OrderDate>isnull(@LastETLDate,’1900-01-01′)

END

GO

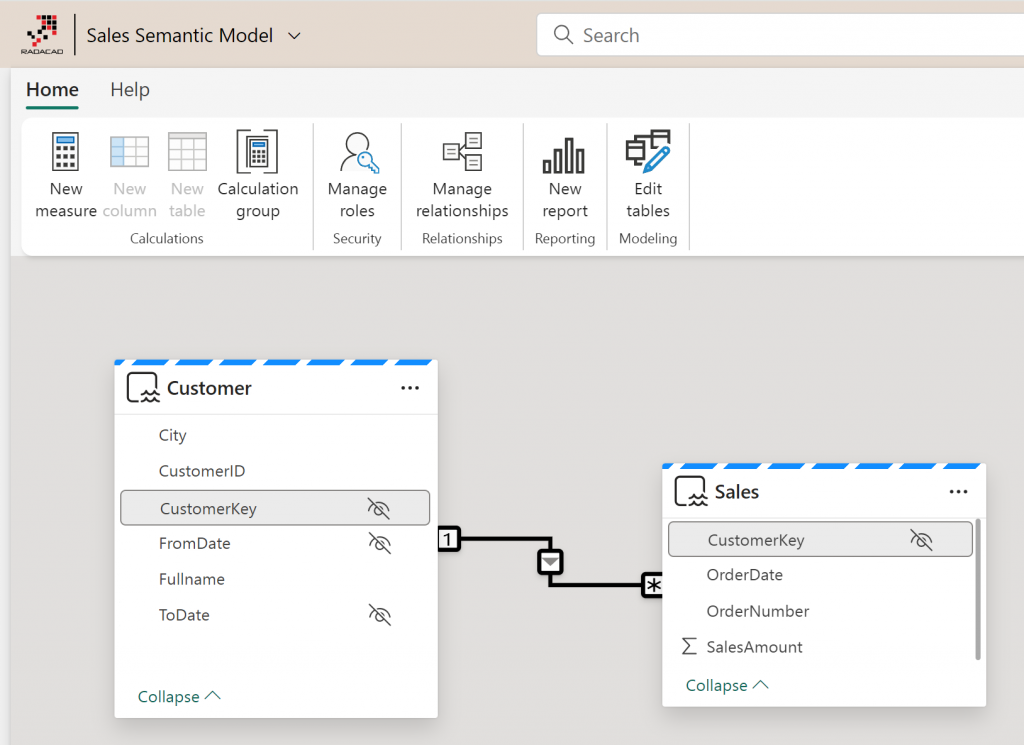

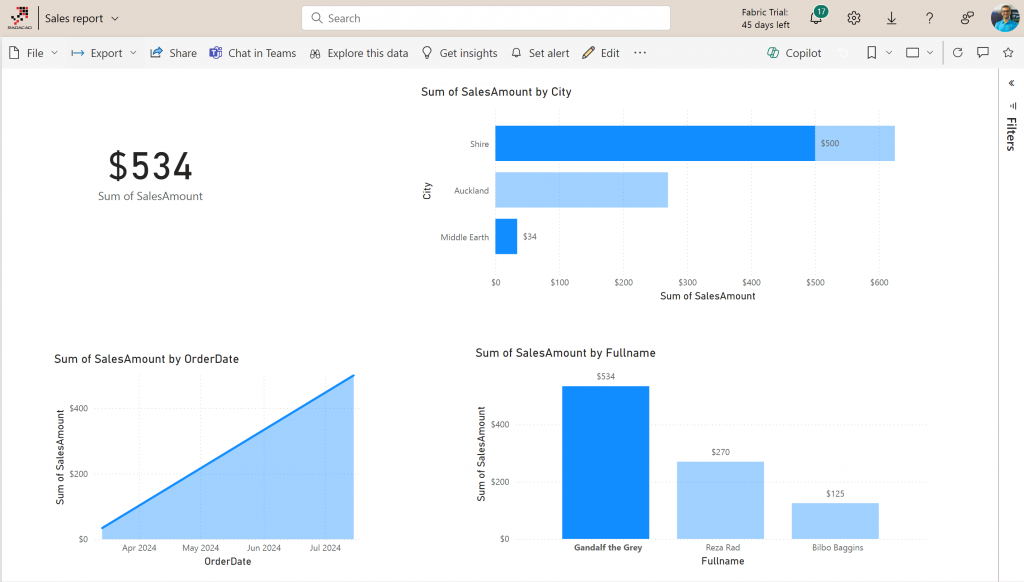

Power BI Semantic Model

Then, a simple custom semantic model is created on top of the Warehouse. The two tables are connected using the CustomerKey (surrogate key). The CustomerKey, FromDate, and ToDate columns are then hidden in the model editor as they are technical columns and not needed in the reporting.

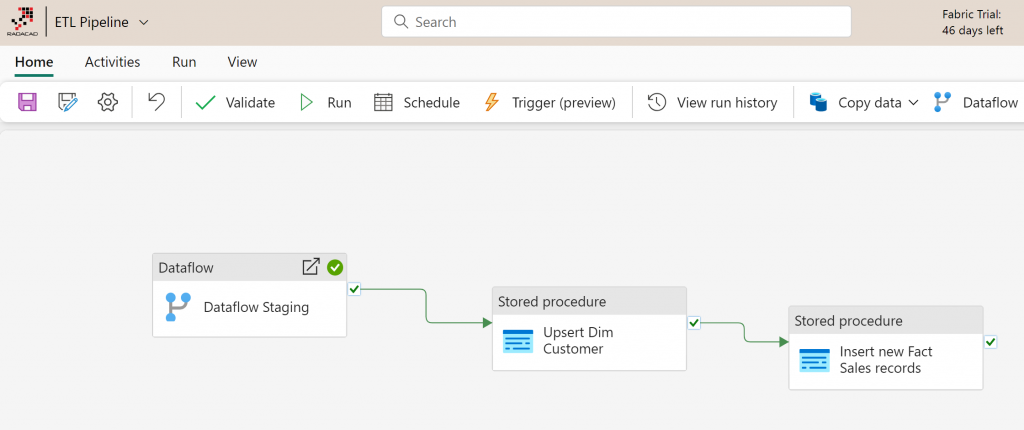

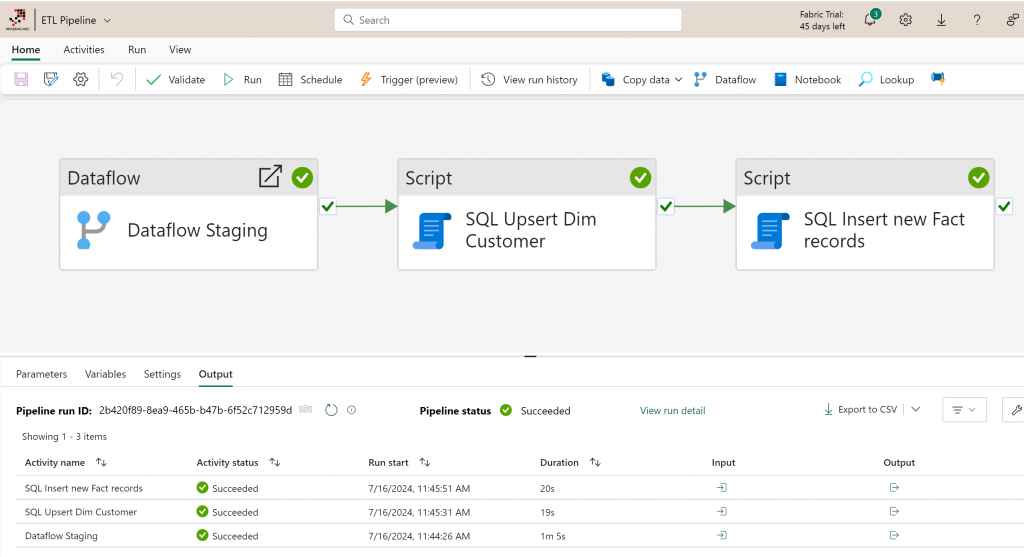

Pipeline to Orchestrate the Process

The three steps should run one after the other;

- Dataflow to load data into staging Lakehoues

- Upsert Customer dimension

- Insert new records into the Sales fact table

Here is the Pipeline that has all three steps as three activities (one is the Dataflow activity, and the other two are SQL Script activity)

Testing the work

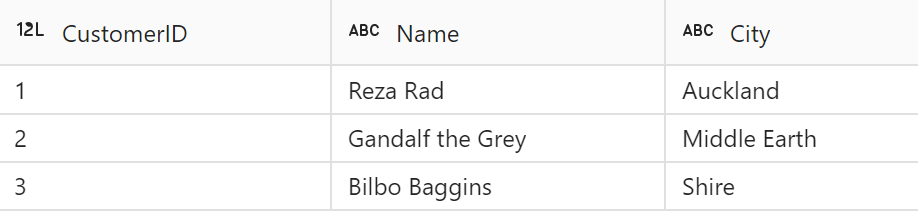

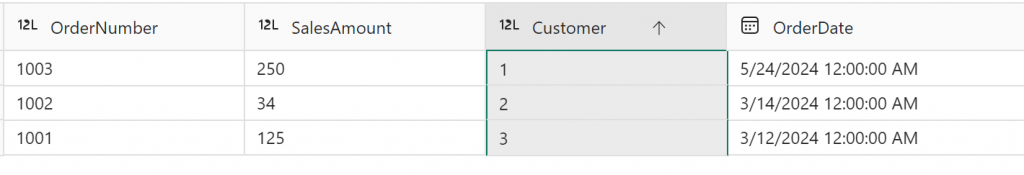

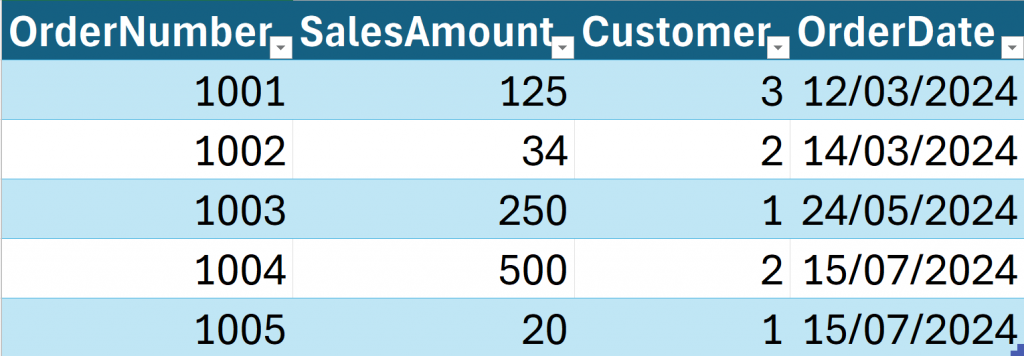

Here are the data in the source tables;

Customer table

Sales table

The initial execution of the pipeline will end with these data in the tables;

Customer dimension

Fact Sales

Then changes appear in the source system:

Customer source table

The changes are the City change for Gandalf the Grey, to Shire! And the new customer: Saruan!

The last two records are new.

The execution of the pipeline will apply the changes below:

Customer dimension table:

Note the two highlighted records showing SCD Type 2 implementation.

Fact Sales table:

The two highlighted records are for the same customer but with different values in their City column, the CustomerKey pointed to the correct customer record.

Finally, here is the Power BI report showing all the data. This report shows both customer cities.

Here are the Fabric objects used for this work

And here is a little diagram showing the process

Summary

SCD Type 2 is one of the most common types of Slowly Changing Dimensions. In this article, I explained one way of implementing it. There are, of course, many different ways that you can implement this in Microsoft Fabric. The key in all those methods is that the dimension table needs the three columns of surrogate key, FromDate, and ToDate. The fact table then relates to the dimension table using the surrogate key (which is looked up in the ETL process considering the natural key and the effective period date).

Please note that many details are omitted in the example mentioned here for simplicity; a real-world implementation of SCD will have more details.

To learn more about some of the concepts mentioned in this article, read the below articles: