Dataflows are useful for many scenarios when dealing with Power BI. One of their big advantages is the data source isolation and anything that comes with that. Benefits of such architecture are many, including datasets independent from the data source changes, and managing security in another layer from the data source. In this article, I’ll walk you through that architecture and explain the benefits of it.

New to dataflows?

If you are new to dataflows, I strongly suggest reading RADACAD’s article series about dataflows as below;

- What is the Common Data Model and Why Should I Care? Part 3 of Dataflow Series in Power BI

- Getting Started With Dataflow in Power BI – Part 2 of Dataflow Series

- What are the Use Cases of Dataflow for You in Power BI?

- Linked Entities and Computed Entities; Dataflows in Power BI Part 4

- How to Use Dataflow to Make the Refresh of Power BI Solution FASTER!

- Workaround for Computed Entity in Power BI Pro: Dataflow in Power BI

- Move Your Shared Tables to Dataflow; Build a Consistent Table in Power BI

- Power BI Architecture for Multi-Developer Tenant Using Dataflows and Shared Datasets

- Refresh Power BI Queries Through Power Platform Dataflows: Unlimited Times with Any Frequency

- Reference from Another Query in Dataflow – No Premium Needed – Power Platform Dataflows

- Dataflow Vs. Dataset: What are the Differences of these two Power BI Components

- Working with Records, Lists, and Values in Power BI Dataflows

- AI in Dataflow, Power BI web service, Cognitive Service -Part1

- AI in Dataflow, Power BI Service, Auto Azure ML – Part2

- AI in Dataflow, Azure ML Studio Integration with Power BI Services – Part 3

Data source isolation architecture

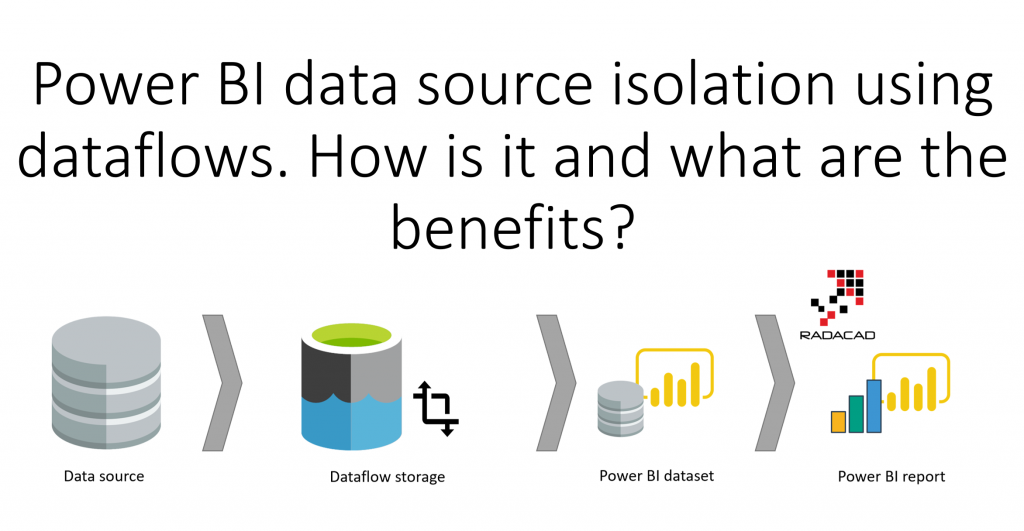

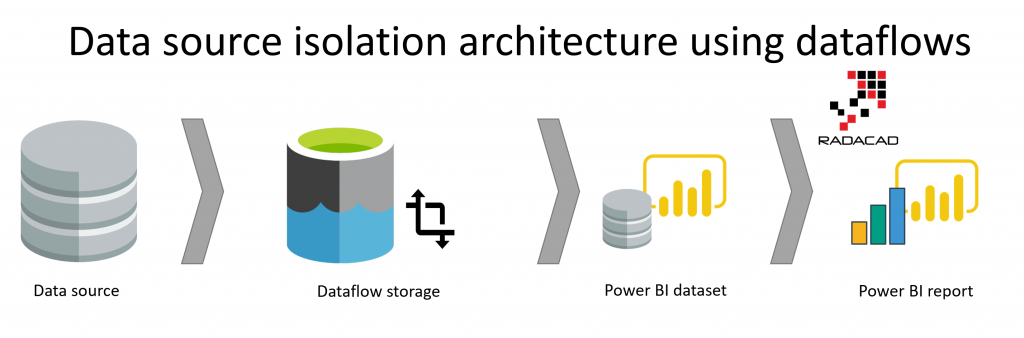

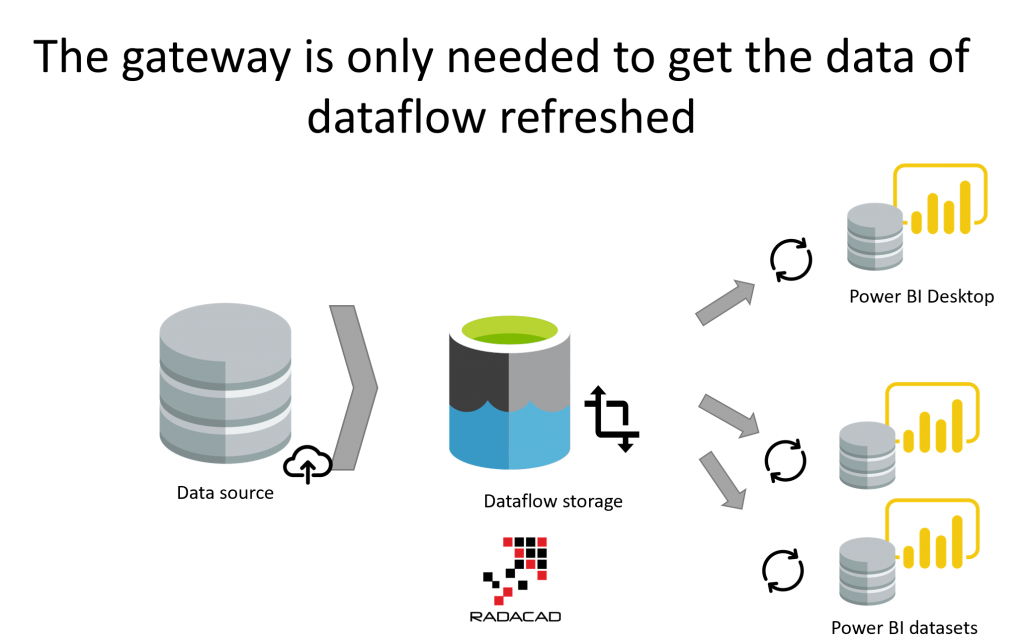

dataflows can be used as a layer between the data source and Power BI datasets. This can be done even if the data source is a SQL Server database, or any other relational data stores. Here is what the architecture looks like;

The architecture above is adding a layer of dataflow storage in between the data source and the Power BI dataset. This layer can also include some transformations (to reduce the amount of rows with filtering, or transforming the data), or it can be merely used as another storage before the Power BI dataset uses that data as a source.

Benefits of data source isolation architecture

There are many benefits to the architecture mentioned above, here are some of those.

- Reduce read from the data source

- The security access for users can be applied to the dataflow

- A smaller view of the data can be shared through the dataflow

- Reduces the gateway requirement for dataset refreshes

- Access to the data source from anywhere with an internet connection (No VPN or on-prem connections is needed)

- Any change/upgrade of the data source will not have an effect on the datasets

Let’s have a closer look at the items above.

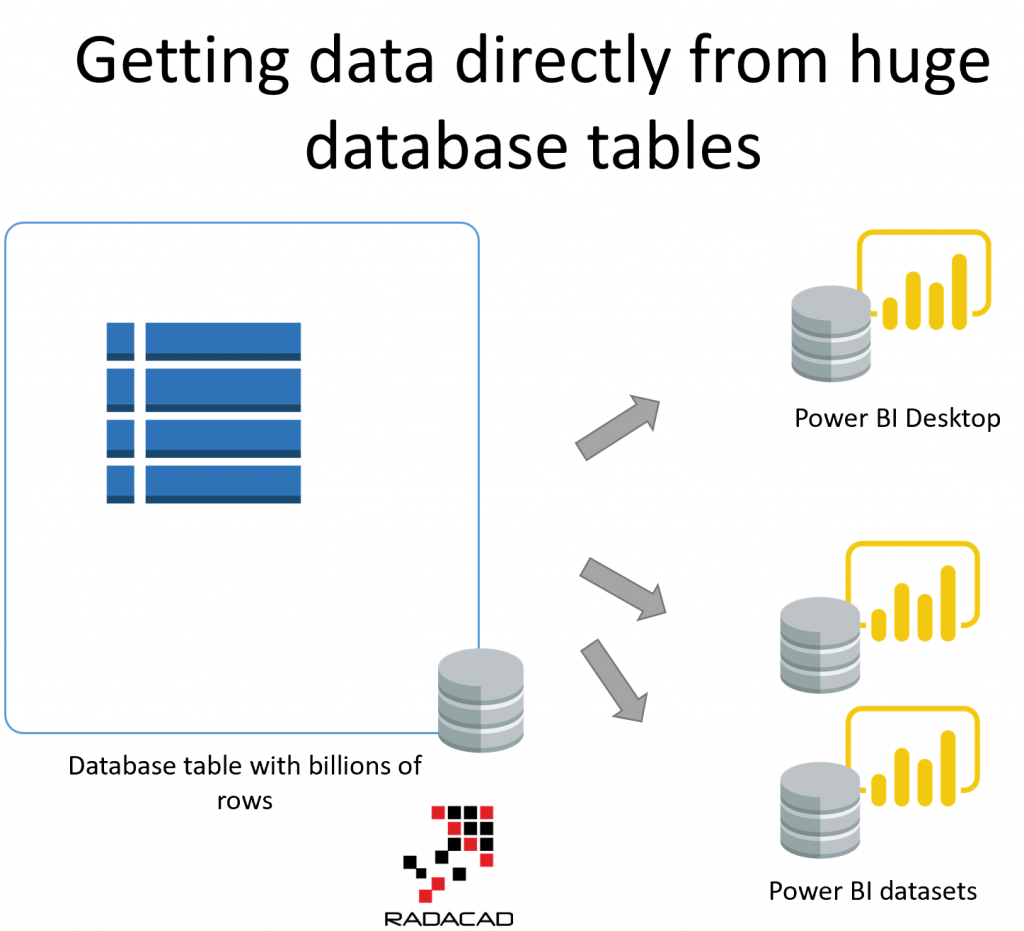

Reduce the read from the data source

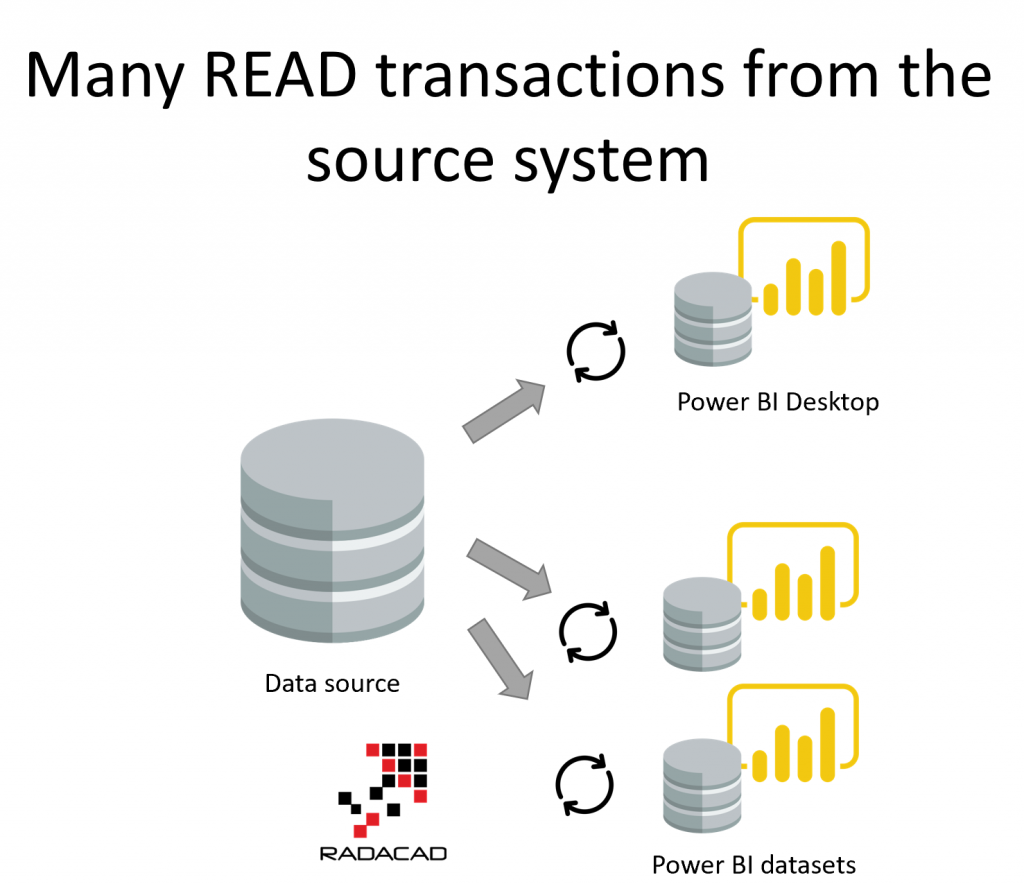

If the Power BI dataset (or report) gets data directly from the data source, then everytime for a refresh it will READ data from the data source. This can be at the scheduled refresh times of the published dataset, or even at the times that a report developer refreshes the data in the Power BI Desktop.

In scenarios that the data is read from an operational source system, reducing the amount of READs from the source system is highly recommended. The last thing you want is the operational system users to feel a performance drop in their live system whenever a Power BI dataset refreshes at their peak workload!

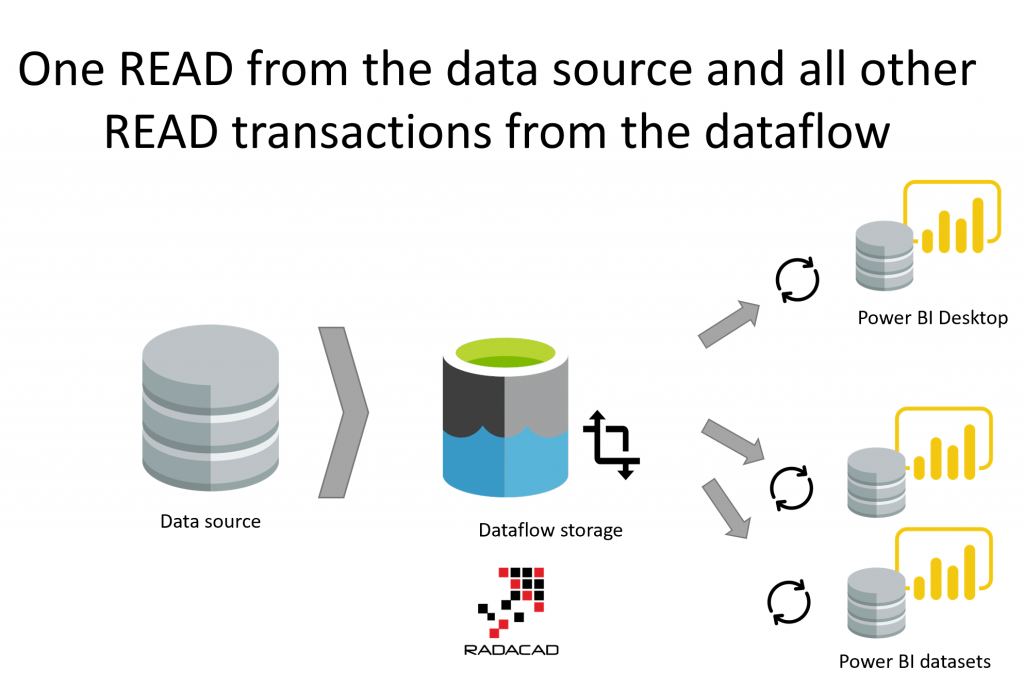

dataflows used as the layer between the data source and the dataset, means datasets will have READ from the dataflow instead of the data source.

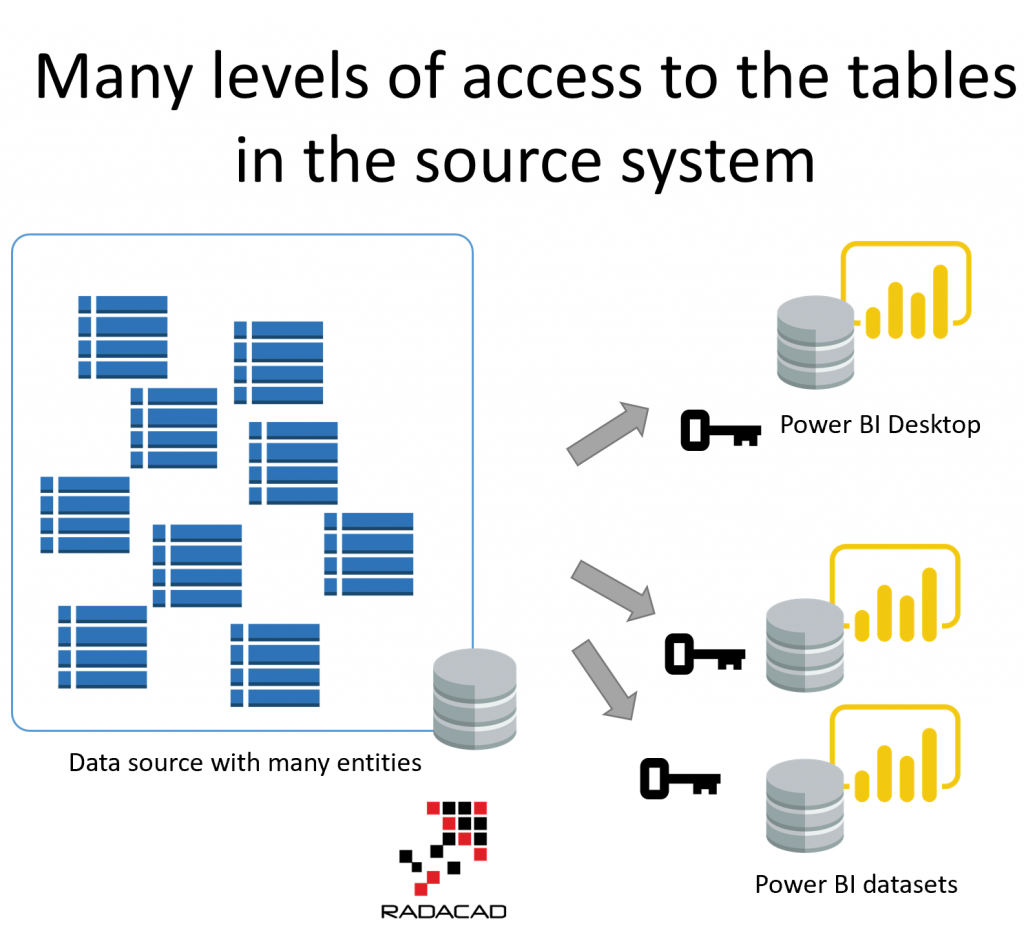

The security access for users can be applied to the dataflow

Often the data source includes more data and entities than what used in the report. Usually getting access to the source system requires going through processes and sign-offs which might take a lot of steps to go through especially because there are more in the data source than needed in the report.

Giving access to a SQL server database with hundreds of tables is something that needs a lot of considerations. However, if there is a dataflow layer on top of the data source with only two of those tables, then it would be much easier to give access to users to that dataflow.

dataflow adds another layer of security to the data.

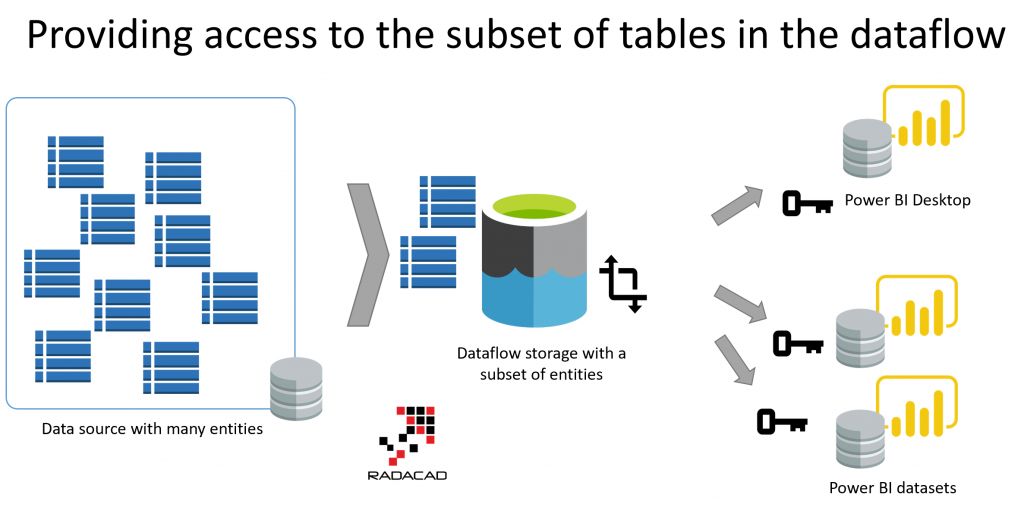

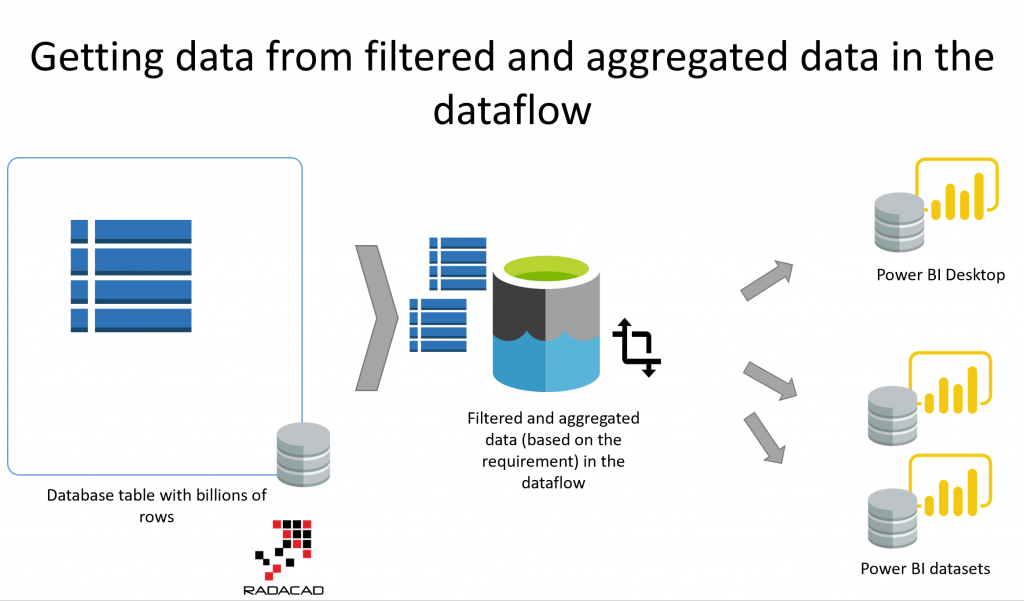

A smaller view of the data can be shared through the dataflow

One of the main concerns of DBAs or database managers when asked to get access to the database table is the amount of data read from the database. If there is a table with 500 millions of rows in the database, and someone tries to import all of that table into Power BI, it might impact the performance of the data source.

Dataflow can be used as a layer on top of the data source, which has only the required data in it. It might be aggregated data or data filtered for the last previous periods needed for analysis.

Reduces the gateway requirement for dataset refreshes

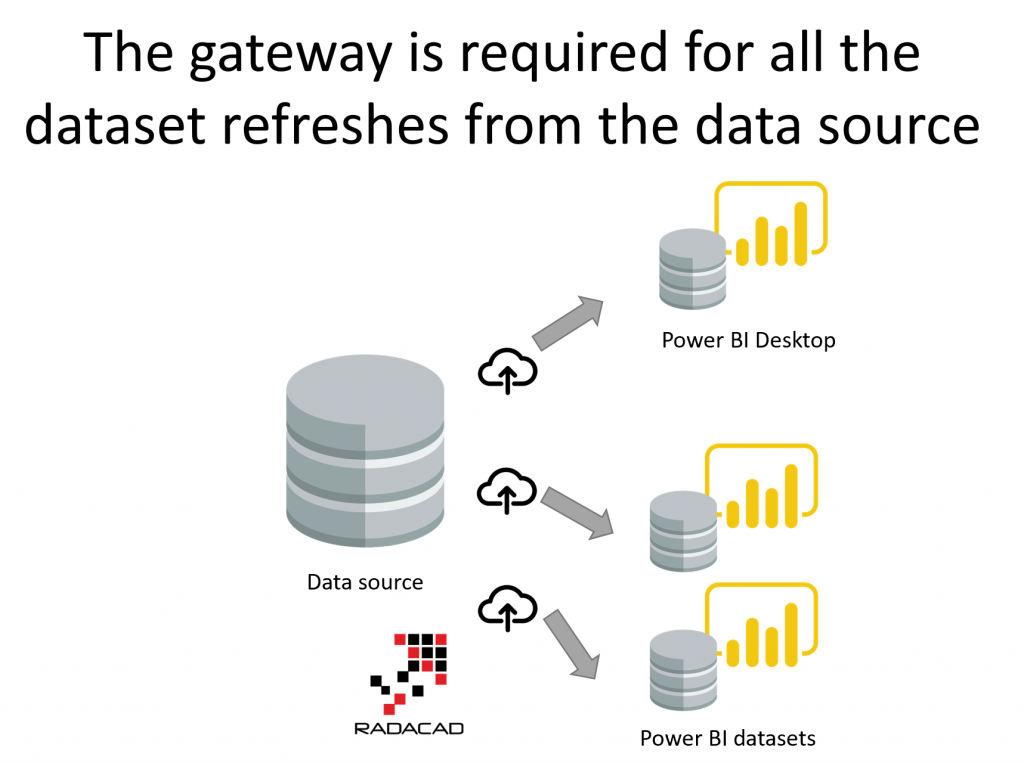

Gateway is bringing an extra element in the refresh of a dataset if the data source is located on-premises. Each dataset that uses that data source would need to go through that gateway for the refresh process.

When dataflow used as a layer between the dataset and the data source, a gateway would be just needed for the dataflow refresh, but not for the datasets. The dataset refreshes as a result can be faster and more reliable.

Access to the data source from anywhere with an internet connection (No VPN or on-prem connections is needed)

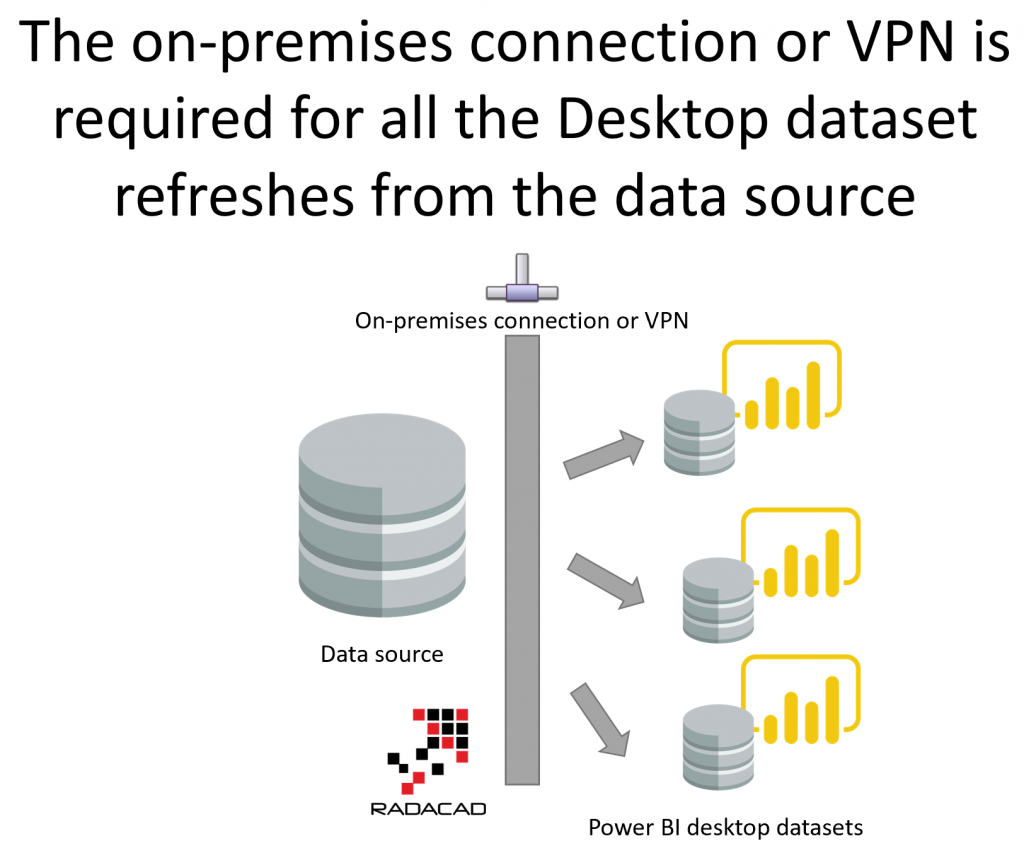

Often report developers, have the need to be connected to a VPN or on-premises network to develop reports that are getting data from an on-premises data source.

If dataflow is used as a layer in between, then report developers just need an internet connection.

Any change/upgrade of the data source will not have an effect on the datasets

Introducing a layer between the data source and the datasets is reducing the metadata dependency to the source system.

If there are 15 Power BI datasets using a SQL Server database table, and the database is now moved to Oracle database, then all of those 15 datasets have to be updated with changes in their get data and their Power Query code.

However, if those 15 Power BI datasets are getting their data from a dataflow, they won’t be impacted at all. if the database system moves to oracle. Only the dataflow metadata and code has to be updated. Maintaining the solution would be much easier this way.

What if I use SQL Server database/data warehouse combined with SSIS or ADF instead of dataflow?

This is a question that I often get from database professionals whenever I talk about dataflow. You might ask; “Reza, you talked about all these benefits of dataflows, but I can re-produce the same with a database (SQL Server for example), and a data transformation tool or service (SSIS or Azure data factory for example). what is the difference?”

This is a great question to ask. My answer is that, don’t bind yourself to a tool or technology. Get the concept and then find a good tool or service to apply it.

The concept mentioned here is to have a layer of data storage between the source data and the Power BI dataset. For many Power BI users without having a database background, dataflow storage and transformation is a great way to achieve that. However, if you are a database professional and good skilled with databases and data integration technologies, then choose your tool and implement it your way.

Summary

Data source isolation is one of the techniques that I highly recommend in a Power BI architecture. It comes with many benefits as listed above, however, it adds a layer in the Power BI development, and it might require more development time in the beginning. However, the effort at the beginning worth the gains in long term.

Have you faced situations that you needed data source isolation? please share your experience below in the comments.